Exploratory Data Analysis

What is EDA?

EDA is the unstructured process of probing the data we haven’t seen before to understand more about it with a view to thinking about how we can use the data, and to discover what it reveals as insights at first glance.

At other times, we need to analyze some data with no particular objective in mind except to find out if it could be useful for anything at all.

Consider a situation where your manager points you to some data and asks you to do some analysis on it. The data could be in a Google Drive, or a Github repo, or on a thumb drive. It may have been received from a client, a customer or a vendor. You may have a high level pointer to what the data is, for example you may know there is order history data, or invoice data, or web log data. The ask may not be very specific, nor the goal clarified, but we would like to check the data out to see if there is something useful we can do with it.

In other situations, we are looking for something specific, and are looking for the right data to analyze. For example, we may be trying to to identify zip codes where to market our product. We may be able to get data that provides us information on income, consumption, population characteristics etc that could help us with our task. When we receive such data, we would like to find out if it is fit for purpose.

Inquiries to conduct

So when you get data that you do not know much about in advance, you start with exploratory data analysis, or EDA. Possible inquiries you might like to conduct are:

- How much data do we have - number of rows in the data?

- How many columns, or fields do we have in the dataset?

- Data types - which of the columns appear to be numeric, dates or strings?

- Names of the columns, and do they tell us anything?

- A visual review of a sample of the dataset

- Completeness of the dataset, are missing values obvious? Columns that are largely empty?

- Unique values for columns that appear to be categorical, and how many observations of each category?

- For numeric columns, the range of values (calculated from min and max values)

- Distributions for the different columns, possibly graphed

- Correlations between the different columns

Exploratory Data Analysis (EDA) is generally the first activity performed to get a high level understanding of new data. It employs a variety of graphical and summarization techniques to get a ‘sense of the data’.

The purpose of Exploratory Data Analysis is to interrogate the data in an open-minded way with a view to understanding the structure of the data, uncover any prominent themes, identify important variables, detect obvious anomalies, consider missing values, review data types, obtain a visual understanding of the distribution of the data, understand correlations between variables, etc. Not all these things can be discovered during EDA, but these are generally the things we look for when performing EDA.

EDA is unstructured exploration, there is not a defined set of activities you must perform. Generally, you probe the data, and depending upon what you discover, you ask more questions.

Introduction to Arrays

Arrays, or collection of numbers, are fundamental to analytics at scale. We will cover arrays from a NumPy lens exclusively, given how much NumPy dominates all array based manipulation.

NumPy is the underlying library for manipulating arrays in Python. And arrays are really important for analytics. The reason arrays are important is because many analytical algorithms will only accept arrays as input. Deep learning networks will exclusively accept only arrays as input, though arrays are called tensors in the deep learning world. In addition to this practical issue, data is much easier to manipulate, transform and perform mathematical operations on if it is expressed as an array.

NumPy underpins pandas as well as many other libraries. So we may not be using it a great deal, but there will be situations where numpy is unavoidable.

Below is a high level overview of what arrays are, and some basic array operations.

Multi-dimensional data

Arrays have structure in the form of dimensions, and numbers sit at the intersection of these dimensions. In a spreadsheet, you see two dimensions - one being the rows, represented as 1, 2, 3..., and the other the columns, repesented as A, B, C. Numpy arrays can have any number of dimensions, even though dimensions beyond the third are humanly impossible to visualize.

A numpy array when printed in Python encloses data for a dimension in square brackets. The fundamental unit of an array of any size is a single one-dimensional row where numbers are separated by commas and enclosed in a set of square brackets, for example, [1, 2, 3, 1]. Several of these will then be arranged within additional nested square brackets to make up the complete array. To understand the idea of an array, mentally visualize a 2-dimensional array similar to a spreadsheet. Every number within the array exists at the intersection of all of its dimensions. In Python, each position along a dimension, more commonly called an axis, is represented by numbers starting with the first element being 0. These positions are called indexes.

The number of square brackets [ gives the number of dimensions in the array. Two are represented on screen, the rows and columns, like a 2D matrix. But the screen is two-dimensional, and cannot display additional dimensions. Therefore all other dimensions appear as repeats of rows and columns - look at the example next. The last two dimensions, eg here 3, 4 represent rows and columns. The 2, the first one, means there are two sets of these rows and columns in the array!

Creating arrays with Numpy

Everything that Numpy touches ends as an array, just like everything from a pandas function is a dataframe. Easiest way to generate a random array is np.random.randn(2,3) which will give an array with dimensions 2,3. You can pick any other dimensions too. randn gives random normal numbers.

# import some libraries

import pandas as pd

import os

import random

import numpy as np

import scipy

import math

import joblib

# Create a one dimensional array

np.random.randn(4)

array([ 1.1736499 , 1.54772703, -0.21693701, 0.31459622])

# Create a 2-dimensional array with random normal variables

# np.random.seed(123)

np.random.randn(2,3)

array([[-0.4905774 , -1.47052507, -1.04379812],

[-0.20386335, 0.56686123, 1.16730192]])

# Create a 3-dimensional array with random integers

x = np.random.randint(low = 1, high = 5, size = (2,3,4))

print('Shape: ', x.shape)

x

Shape: (2, 3, 4)

array([[[3, 3, 2, 1],

[2, 1, 1, 2],

[1, 1, 1, 3]],

[[1, 4, 1, 1],

[3, 2, 1, 1],

[2, 4, 2, 3]]])

Numpy axes numbers run from left to right, starting with the index 0. So x.shape gives me 2, 3, 4 which means 2 is the 0th axis, 3 rows are the 1st axis and 4 columns are the 2nd axis.

The shape of the above array is (2, 3, 4)

axis = 0 means : (2, 3, 4)

axis = 1 means : (2, 3, 4)

axis = 2 means : (2, 3, 4)

# Create a 3-dimensional array

data = np.random.randn(2, 3, 4)

print('The shape of the array is:', data.shape)

data

The shape of the array is: (2, 3, 4)

array([[[-0.94207619, 0.85819949, 0.85937811, 0.03423557],

[ 0.43471567, -0.3977568 , -0.38560239, 1.37103135],

[-0.8236544 , -0.75445943, 0.34979668, 0.46855885]],

[[ 1.12936861, -0.44238069, 0.96649123, -1.36034059],

[ 0.64099078, 1.41112827, -0.58302938, 0.0526134 ],

[ 1.6253795 , 0.47798241, 0.53996765, -0.77834533]]])

The number of

[gives the number of dimensions in the array.

Two are represented on screen, the rows and columns. All others appear afterwards. The last two dimensions, eg here 3, 4 represent rows and columns. The 2, the first one, means there are two sets of these rows and columns in the array.

np.random.randn(4, 3, 2)

array([[[-1.84207847, -0.22688959],

[ 1.40275113, 0.74415778],

[-0.15042182, -0.75451819]],

[[ 0.58001497, 0.9170983 ],

[ 0.253829 , 1.08733683],

[-0.6430149 , 2.01905416]],

[[ 0.15379162, -0.07853098],

[-0.85224692, 0.25954211],

[ 0.0392591 , 0.29043794]],

[[-0.85687338, -0.90593571],

[-1.28917985, 0.25920641],

[ 1.48624977, -0.27429377]]])

# Now let us add another dimension. But this time random integers than random normal.

# The random integer function (randint) requires specifying low and high for the uniform distribution.

data = np.random.randint(low = 1, high = 100, size = (2,3,2,4))

data

array([[[[50, 35, 31, 23],

[67, 69, 34, 61]],

[[70, 83, 55, 30],

[47, 76, 54, 61]],

[[73, 14, 87, 13],

[ 8, 95, 6, 13]]],

[[[73, 33, 25, 83],

[48, 73, 44, 83]],

[[54, 24, 72, 3],

[ 1, 91, 30, 60]],

[[62, 39, 74, 77],

[14, 33, 8, 83]]]])

So there will be a collection of 2 rows x 4 columns matrices, repeated 3 times, and that entire set another 2 times.

And the 4 occurrences of [[[[ means there are 4 dimensions to the array.

type(data)

numpy.ndarray

# Converting a list to an array

list1 = list(range(12))

list1

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11]

array1 = np.array(list1)

array1

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11])

# This array1 is one dimensional, let us convert to a 3x4 array.

array1.shape = (3,4)

array1

array([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]])

# Create arrays of zeros

array1 = np.zeros((2,3)) # The dimensions must be a tuple inside the brackets

array1

array([[0., 0., 0.],

[0., 0., 0.]])

# Create arrays from a range

array1 = np.arange((12))

array1

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11])

#You can reshape the dimensions of an array

array1.reshape(3,4)

array([[ 0, 1, 2, 3],

[ 4, 5, 6, 7],

[ 8, 9, 10, 11]])

array1.reshape(3,2,2)

array([[[ 0, 1],

[ 2, 3]],

[[ 4, 5],

[ 6, 7]],

[[ 8, 9],

[10, 11]]])

# Create an array of 1's

array1 = np.ones((3,5))

array1

array([[1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1.]])

# Creates the identity matrix

array1 = np.eye(4)

array1

array([[1., 0., 0., 0.],

[0., 1., 0., 0.],

[0., 0., 1., 0.],

[0., 0., 0., 1.]])

# Create an empty array - useful if you need a place to keep data that will be generated later in the code.

# It shows zeros but is actually empty

np.empty([2,3])

array([[6.23042070e-307, 4.67296746e-307, 1.69121096e-306],

[9.34609111e-307, 1.42413555e-306, 1.78019082e-306]])

Summarizing data along an axis

Putting the axis = n argument with a summarization function (eg, sum) makes the axis n disappear, having been summarized into the function's results, leaving only the rest of the dimensions. So np.sum(array_name, axis = n), similarly mean(), min(), median(), std() etc will calculate the aggregation function by collapsing all the elements of the selected axis number into one and performing that operation. See below using the sum function.

x = data = np.random.randint(low = 1, high = 100, size = (2,3))

x

array([[17, 58, 47],

[63, 98, 94]])

# So with axis = 0, the very first dimension, ie the 2 rows, will collapse leaving an array of shape (3,)

x.sum(axis = 0)

array([ 80, 156, 141])

# So with axis = 0, the very first dimension, ie the 2 rows, will collapse leaving an array of shape (2,)

x.sum(axis = 1)

array([122, 255])

Subsetting arrays ('slices')

Python starts numbering things starting with zero, which means the first item is the 0th item.

The portion of the dimension you wish to select is given in the form start:finish where the start element is included, but the finish is excluded. So 1:3 means include 1 and 2 but not 3.

: means include everything

array1 = np.random.randint(0, 100, (3,5))

array1

array([[46, 19, 51, 42, 76],

[80, 27, 40, 28, 81],

[34, 37, 87, 93, 97]])

array1[0:2, 0:2]

array([[46, 19],

[80, 27]])

array1[:,0:2] # ':' means include everything

array([[46, 19],

[80, 27],

[34, 37]])

array1[0:2]

array([[46, 19, 51, 42, 76],

[80, 27, 40, 28, 81]])

#Slices are references to the original array. So you if you need a copy, use the below:

array1[0:2].copy()

array([[46, 19, 51, 42, 76],

[80, 27, 40, 28, 81]])

Generally, use the above 'Long Form' way for slicing where you specify the indices for each dimension. Where everything is to be included, use :. There are other short-cut methods of slicing, but can leave those as is.

Imagine an array a1 with dimensions (3, 5, 2, 4). This means: - This array has 3 arrays in it that have the dimensions (5, 2, 4) - Each of these 3 arrays have 5 additional arrays each in them of the dimension (2,4). (So there are 3*5=15 of these 2x4 arrays) - Each of these (2,4) arrays has 2 one-dimensional arrays with 4 columns.

If in the slice notation only a portion of what to include is specified, eg a1[0], then it means we are asking for the first one of these axes, ie the dimension parameters are specifying from the left of (3, 5, 2, 4). It means give me the first of the 3 arrays with size (5,2,4).

If the slice notation says a1[0,1], then it means 0th element of the first dim, and 1st element of the second dim.

Check it out using the following code:

a1 = np.random.randint(0, 100, (3,4,2,5))

a1

array([[[[59, 41, 61, 8, 39],

[73, 32, 61, 51, 6]],

[[69, 3, 25, 8, 46],

[67, 65, 13, 83, 88]],

[[79, 17, 61, 24, 86],

[97, 47, 49, 53, 55]],

[[77, 52, 43, 40, 74],

[51, 39, 97, 66, 19]]],

[[[54, 88, 81, 40, 95],

[74, 61, 27, 53, 92]],

[[ 9, 57, 21, 87, 73],

[99, 6, 77, 63, 76]],

[[73, 31, 94, 85, 65],

[95, 78, 27, 83, 44]],

[[75, 63, 71, 49, 43],

[54, 4, 93, 75, 70]]],

[[[87, 0, 13, 69, 0],

[81, 13, 88, 24, 36]],

[[21, 19, 30, 32, 55],

[40, 21, 74, 89, 68]],

[[80, 34, 75, 13, 9],

[63, 19, 73, 12, 47]],

[[27, 29, 45, 65, 43],

[83, 21, 11, 45, 6]]]])

a1[0].shape

(4, 2, 5)

a1[0]

array([[[59, 41, 61, 8, 39],

[73, 32, 61, 51, 6]],

[[69, 3, 25, 8, 46],

[67, 65, 13, 83, 88]],

[[79, 17, 61, 24, 86],

[97, 47, 49, 53, 55]],

[[77, 52, 43, 40, 74],

[51, 39, 97, 66, 19]]])

a1[0,1]

array([[69, 3, 25, 8, 46],

[67, 65, 13, 83, 88]])

More slicing: Picking selected rows or columns

a1 = np.random.randint(0, 100, (8,9))

a1

array([[44, 78, 29, 91, 82, 86, 76, 3, 90],

[69, 96, 29, 79, 25, 47, 95, 87, 85],

[79, 42, 99, 88, 14, 38, 47, 62, 41],

[39, 98, 27, 95, 65, 2, 59, 72, 16],

[46, 44, 55, 65, 32, 5, 79, 40, 65],

[38, 76, 78, 8, 76, 35, 27, 32, 51],

[58, 75, 3, 99, 23, 73, 77, 12, 39],

[66, 43, 58, 35, 33, 85, 75, 8, 10]])

# Select the first row

a1[0]

array([44, 78, 29, 91, 82, 86, 76, 3, 90])

# Select the fourth row

a1[3]

array([39, 98, 27, 95, 65, 2, 59, 72, 16])

# Select the first and the fourth row together

a1[[0,3]]

array([[44, 78, 29, 91, 82, 86, 76, 3, 90],

[39, 98, 27, 95, 65, 2, 59, 72, 16]])

# Select the first and the fourth column

a1[:,[0,3]]

array([[44, 91],

[69, 79],

[79, 88],

[39, 95],

[46, 65],

[38, 8],

[58, 99],

[66, 35]])

# Select subset of named rows and columns

a1[[0, 3]][:,[0, 1]] # Named rows and columns.

# Note that a1[[0, 3],[0, 1]] does not work as expected, it selects two points (0,0)and (3,1).

# Really crazy but it is what it is.

array([[44, 78],

[39, 98]])

### Operations on arrays All math on arrays is element wise, and scalars are multiplied/added with each element.

array1 + 4

array([[68, 82, 45, 28, 15],

[86, 33, 19, 37, 21],

[29, 72, 94, 70, 62]])

array1 > np.random.randint(0, 2, (3,5))

array([[ True, True, True, True, True],

[ True, True, True, True, True],

[ True, True, True, True, True]])

array1 + 2

array([[66, 80, 43, 26, 13],

[84, 31, 17, 35, 19],

[27, 70, 92, 68, 60]])

np.sum(array1) # adds all the elements of an array

701

np.sum(array1, axis = 0) # adds all elements of the array along a particular axis

array([171, 175, 146, 123, 86])

Matrix math

Numpy has arrays as well as matrices. Matrices are 2D, arrays can have any number of dimensions. The only real difference between a matrix (type = numpy.matrix) and an array (type = numpy.ndarray) is that all array operations are element wise, ie the special R x C matrix multiplication does not apply to arrays. However, for an array that is 2 x 2 in shape you can use the @ operator to do matrix math.

So that leaves matrices and arrays interchangeable in a practical sense. Except that you can't do an inverse of an array using .I which you can for a matrix.

# Create a matrix 'm' and an array 'a' that are identical

m = np.matrix(np.random.randint(0,10,(3,3)))

a = np.array(m)

m

matrix([[4, 2, 7],

[7, 0, 2],

[9, 3, 4]])

a

array([[4, 2, 7],

[7, 0, 2],

[9, 3, 4]])

Transpose with a .T

m.T

matrix([[4, 7, 9],

[2, 0, 3],

[7, 2, 4]])

a.T

array([[4, 7, 9],

[2, 0, 3],

[7, 2, 4]])

Inverse with a .I

Does not work for arrays

m.I

matrix([[-0.05825243, 0.12621359, 0.03883495],

[-0.09708738, -0.45631068, 0.39805825],

[ 0.2038835 , 0.05825243, -0.13592233]])

Matrix multiplication

For matrices, just a * suffices for matrix multiplication. If using arrays, use @ for matrix multiplication, which also works for matrices. So just to be safe, just use @.

Dot-product is the same as row-by-column matrix multiplication, and is not elementwise.

a=np.matrix([[4, 3], [2, 1]])

b=np.mat([[1, 2], [3, 4]])

a

matrix([[4, 3],

[2, 1]])

b

matrix([[1, 2],

[3, 4]])

a*b

matrix([[13, 20],

[ 5, 8]])

a@b

matrix([[13, 20],

[ 5, 8]])

# Now check with arrays

a=np.array([[4, 3], [2, 1]])

b=np.array([[1, 2], [3, 4]])

a@b # does matrix multiplication.

array([[13, 20],

[ 5, 8]])

a

array([[4, 3],

[2, 1]])

b

array([[1, 2],

[3, 4]])

a*b # element-wise multiplication as a and b are arrays

array([[4, 6],

[6, 4]])

@ is the same as np.dot(a, b), which is just a longer fully spelled out function.

np.dot(a,b)

array([[13, 20],

[ 5, 8]])

Exponents with matrices and arrays **.

a = np.array([[4, 3], [2, 1]])

m = np.matrix(a)

m

matrix([[4, 3],

[2, 1]])

a**2 # Because a is an array, this will square each element of a.

array([[16, 9],

[ 4, 1]], dtype=int32)

m**2 # Because m is a matrix, this will be read as m*m, and dot product of the matrix with itself will result.

matrix([[22, 15],

[10, 7]])

which is same as a@a

a@a

array([[22, 15],

[10, 7]])

Modulus, or size

The modulus is just sqrt(a^2 + b^2 + ....n^2), where a, b...n are elements of the vector, matrix or array. Can be calculated using np.linalg.norm(a)

a = np.array([4,3,2,1])

np.linalg.norm(a)

5.477225575051661

# Same as calculating manually

(4**2 + 3**2 + 2**2 + 1**2) ** 0.5

5.477225575051661

b

array([[1, 2],

[3, 4]])

np.linalg.norm(b)

5.477225575051661

m

matrix([[4, 3],

[2, 1]])

np.linalg.norm(m)

5.477225575051661

m = np.matrix(np.random.randint(0,10,(3,3)))

m

matrix([[1, 4, 5],

[2, 3, 6],

[4, 6, 6]])

np.linalg.norm(m)

13.379088160259652

print(np.ravel(m))

print(type(np.ravel(m)))

print('Manual calculation for norm')

((np.ravel(m)**2).sum())**.5

[1 4 5 2 3 6 4 6 6]

<class 'numpy.ndarray'>

Manual calculation for norm

13.379088160259652

Determinant of a matrix np.linalg.det(a)

Used for calculating the inverse of a matrix, and only applies to square matrices.

np.linalg.det(m)

30.000000000000014

Converting from matrix to array and vice-versa

np.asmatrix and np.asarray allow you to convert one to the other. Though above we have just used np.array and np.matrix without any issue.

The above references: https://stackoverflow.com/questions/4151128/what-are-the-differences-between-numpy-arrays-and-matrices-which-one-should-i-u

Distances and angles between vectors

Size of a vector, angle between vectors, distance between vectors

# We set up two vectors a and b

a = np.array([1,2,3]); b = np.array([5,4,3])

print('a =',a)

print('b =',b)

a = [1 2 3]

b = [5 4 3]

# Size of the vector, computed as the root of the squares of each of the elements

np.linalg.norm(a)

3.7416573867739413

# Distance between two vectors

np.linalg.norm(a - b)

4.47213595499958

# Which is the same as

print(np.sqrt(np.dot(a, a) - 2 * np.dot(a, b) + np.dot(b, b)))

(a@a + b@b - 2*a@b)**.5

4.47213595499958

4.47213595499958

# Combine the two vectors

X = np.concatenate((a,b)).reshape(2,3)

X

array([[1, 2, 3],

[5, 4, 3]])

# Euclidean distance is the default metric for this function

# from sklearn

from sklearn.metrics import pairwise_distances

pairwise_distances(X)

array([[0. , 4.47213595],

[4.47213595, 0. ]])

# Angle in radians between two vectors. To get the

# answer in degrees, multiply by 180/pi, or 180/math.pi (after import math). Also there is a function in math called

# math.radians to get radians from degrees, or math.degrees(x) to convert angle x from radians to degrees.

import math

angle_in_radians = np.arccos(np.dot(a,b) / (np.linalg.norm(a) * np.linalg.norm(b)))

angle_in_degrees = math.degrees(angle_in_radians)

print('Angle in degrees =', angle_in_degrees)

print('Angle in radians =', angle_in_radians)

Angle in degrees = 33.74461333141198

Angle in radians = 0.5889546074455115

# Same as above using math.acos instead of np.arccos

math.acos(np.dot(a,b) / (np.linalg.norm(a) * np.linalg.norm(b)))

0.5889546074455115

Sorting with argsort

Which is the same as sort, but shows index numbers instead of the values

# We set up an array

a = np.array([20,10,30,0])

# Sorted indices

np.argsort(a)

array([3, 1, 0, 2], dtype=int64)

# Using the indices to get the sorted values

a[np.argsort(a)]

array([ 0, 10, 20, 30])

# Descending sort indices

np.argsort(a)[::-1]

array([2, 0, 1, 3], dtype=int64)

# Descending sort values

a[np.argsort(a)[::-1]]

array([30, 20, 10, 0])

Understanding DataFrames

As we discussed in the prior section, understanding and manipulating arrays of numbers is fundamental to the data science process. This is because nearly all ML and AI algorithms insist on being provided data arrays as inputs, and the NumPy library underpins almost all of data science.

As we discussed, a NumPy array is essentially a collection of numbers. This collection is organized along ‘dimensions’. So NumPy objects are n-dimensional array objects, or ndarray, a fast and efficient container for large datasets in Python.

But arrays have several limitations. One huge limitation is that they are raw containers with numbers, they don't have 'headers', or labels that describe the columns, rows, or the additional dimensions. This means we need to track separately somewhere what each of the dimensions mean. Another limitation is that after 3 dimensions, the additional dimensions are impossible toto visualize in the human mind. For most practical purposes, humans like to think of data in the tabular form, with just rows and columns. If there are more dimensions, one can have multiple tables.

This is where pandas steps in. Pandas use dataframes, or a spreadsheet like construct where there are rows and columns, and these rows and columns can have names or headings. Pandas dataframes are easily converted to NumPy arrays, and algorithms will mostly accept a dataframe as an input just as they would an array.

Exploring Tabular Data with Pandas

Tabular data is often the most common data type that is encountered, though ‘unstructured’ data is increasingly becoming common. Tabular data is two dimensional data – with rows and columns. The columns are defined and understood, and we generally understand what they contain.

- Data is laid out as a 2-dimensional matrix, whether in a spreadsheet, or R/Python dataframes, or in a database table.

- Rows generally represent individual observations, while columns are the fields/variables.

- Variables can be numeric, or categorical.

- Numerical variables can be integers, floats etc, and are continuous.

- Categorical variables may be cardinal (eg, species, gender), or ordinal (eg, low, medium, high), and belong to a discrete set.

- Categorical variables are also called factors, and levels.

- Algorithms often require categorical variables to be converted to numerical variables.

Unstructured data includes audio, video and other kinds of data that is useful for problems of perception. Unstructured data will almost invariably need to be converted into structured arrays with defined dimensions, but for the moment we will skip that.

Reading data with Pandas

Pandas offer several different functions for reading different types of data.

read_csv: Load comma separated files

read_table: Load tab separated files

read_fwf: Read data in fixed-width column format (i.e., no delimiters)

read_clipboardRead data from the clipboard; useful for converting tables from web pages

read_excel: Read Excel files

read_html: Read all tables found in the given HTML document

read_json: Read data from a JSON (JavaScript Object Notation) file

read_pickle: Read a pickle file

read_sql: Read results of an SQL query

read_sas: Read SAS files

Other data types in Python

- Lists are represented as

[]. Lists are a changeable collection of elements, and the elements can be any Python data, eg strings, numbers, dictionaries, or even other lists. - Dictionaries are enclosed in

{}. These are 'key:value' pairs, where 'key' is almost like a name given to a 'value'. - Sets are also enclosed in

{}, except they don't have the colons separating the key:value pairs. These are collections of items, and they are unordered. - Tuples are collections of variables, and enclosed in

(). They are different from sets in that they are unchangeable.

# Example - creating a list

empty_list = []

list1 = ['a', 2,4, 'python']

list1

['a', 2, 4, 'python']

# Example - creating a dictionary

dict1 = {'first': ['John', 'Jane'], 'something_else': (1,2,3)}

dict1

{'first': ['John', 'Jane'], 'something_else': (1, 2, 3)}

dict1['first']

['John', 'Jane']

dict1['something_else']

(1, 2, 3)

# Checking the data type of the new variable we created

type(dict1)

dict

# Checking the data type

type(list1)

list

# Set operations

set1 = {1,2,4,5} # Sets can do intersect, union and difference

# Tuple example

tuple1 = 1, 3, 4 # or

tuple1 = (1, 3, 4)

tuple1

(1, 3, 4)

Loading built-in data sets in Python

Before we move forward with getting into the details with EDA, we will first take a small digressive detour to talk about data sets.

In order to experiment with EDA, we need some data. We can bring our own data, but for exploration and experimentation, it is often easy to load up one of the many in-built datasets accessible through Python. These datasets cover the spectrum - from really small datasets to those with many thousands of records, and include text data such as movie reviews and tweets.

We will leverage these built in datasets for the rest of the discussion as they provide a good path to creating reproducible examples. These datasets are great for experimenting, testing, doing tutorials and exercises.

The next few headings will cover these in-built datasets.

- The Statsmodels library provides access to several interesting inbuilt datasets in Python.

- The datasets available in R can also be accessed through statsmodels.

- The Seaborn library has several toy datasets available to explore.

- The Scikit Learn (sklearn) library also has in-built datasets.

- Scikit Learn also provides a function to generate random datasets with described characteristics (

make_blobsfunction)

In the rest of this discussion, we will use these data sets and explore the data.

Some of these are described below, together with information on how to access and use such datasets.

# Load the regular libraries

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

Loading data from Statsmodels

Statsmodels allows access to several datasets for use in examples, model testing, tutorials, testing functions etc. These can be accessed using sm.datasets.macrodata.load_pandas()['data'], where macrodata is just one example of a dataset. Pressing TAB after sm.datasets should bring up a pick-list of datasets to choose from.

The commands print(sm.datasets.macrodata.DESCRLONG) and print(sm.datasets.macrodata.NOTE) provide additional details on the datasets.

# Load macro economic data from Statsmodels

import statsmodels.api as sm

df = sm.datasets.macrodata.load_pandas()['data']

df

| year | quarter | realgdp | realcons | realinv | realgovt | realdpi | cpi | m1 | tbilrate | unemp | pop | infl | realint | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1959.0 | 1.0 | 2710.349 | 1707.4 | 286.898 | 470.045 | 1886.9 | 28.980 | 139.7 | 2.82 | 5.8 | 177.146 | 0.00 | 0.00 |

| 1 | 1959.0 | 2.0 | 2778.801 | 1733.7 | 310.859 | 481.301 | 1919.7 | 29.150 | 141.7 | 3.08 | 5.1 | 177.830 | 2.34 | 0.74 |

| 2 | 1959.0 | 3.0 | 2775.488 | 1751.8 | 289.226 | 491.260 | 1916.4 | 29.350 | 140.5 | 3.82 | 5.3 | 178.657 | 2.74 | 1.09 |

| 3 | 1959.0 | 4.0 | 2785.204 | 1753.7 | 299.356 | 484.052 | 1931.3 | 29.370 | 140.0 | 4.33 | 5.6 | 179.386 | 0.27 | 4.06 |

| 4 | 1960.0 | 1.0 | 2847.699 | 1770.5 | 331.722 | 462.199 | 1955.5 | 29.540 | 139.6 | 3.50 | 5.2 | 180.007 | 2.31 | 1.19 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 198 | 2008.0 | 3.0 | 13324.600 | 9267.7 | 1990.693 | 991.551 | 9838.3 | 216.889 | 1474.7 | 1.17 | 6.0 | 305.270 | -3.16 | 4.33 |

| 199 | 2008.0 | 4.0 | 13141.920 | 9195.3 | 1857.661 | 1007.273 | 9920.4 | 212.174 | 1576.5 | 0.12 | 6.9 | 305.952 | -8.79 | 8.91 |

| 200 | 2009.0 | 1.0 | 12925.410 | 9209.2 | 1558.494 | 996.287 | 9926.4 | 212.671 | 1592.8 | 0.22 | 8.1 | 306.547 | 0.94 | -0.71 |

| 201 | 2009.0 | 2.0 | 12901.504 | 9189.0 | 1456.678 | 1023.528 | 10077.5 | 214.469 | 1653.6 | 0.18 | 9.2 | 307.226 | 3.37 | -3.19 |

| 202 | 2009.0 | 3.0 | 12990.341 | 9256.0 | 1486.398 | 1044.088 | 10040.6 | 216.385 | 1673.9 | 0.12 | 9.6 | 308.013 | 3.56 | -3.44 |

203 rows × 14 columns

# Print the description of the data

print(sm.datasets.macrodata.DESCRLONG)

US Macroeconomic Data for 1959Q1 - 2009Q3

# Print the data-dictionary for the different columns/fields in the data

print(sm.datasets.macrodata.NOTE)

::

Number of Observations - 203

Number of Variables - 14

Variable name definitions::

year - 1959q1 - 2009q3

quarter - 1-4

realgdp - Real gross domestic product (Bil. of chained 2005 US$,

seasonally adjusted annual rate)

realcons - Real personal consumption expenditures (Bil. of chained

2005 US$, seasonally adjusted annual rate)

realinv - Real gross private domestic investment (Bil. of chained

2005 US$, seasonally adjusted annual rate)

realgovt - Real federal consumption expenditures & gross investment

(Bil. of chained 2005 US$, seasonally adjusted annual rate)

realdpi - Real private disposable income (Bil. of chained 2005

US$, seasonally adjusted annual rate)

cpi - End of the quarter consumer price index for all urban

consumers: all items (1982-84 = 100, seasonally adjusted).

m1 - End of the quarter M1 nominal money stock (Seasonally

adjusted)

tbilrate - Quarterly monthly average of the monthly 3-month

treasury bill: secondary market rate

unemp - Seasonally adjusted unemployment rate (%)

pop - End of the quarter total population: all ages incl. armed

forces over seas

infl - Inflation rate (ln(cpi_{t}/cpi_{t-1}) * 400)

realint - Real interest rate (tbilrate - infl)

Importing R datasets using Statsmodels

Datasets available in R can also be imported using the command sm.datasets.get_rdataset('mtcars').data, where mtcards can be replaced by the appropriate dataset name.

# Import the mtcars dataset which contains attributes for 32 models of cars

mtcars = sm.datasets.get_rdataset('mtcars').data

mtcars.to_excel('mtcars.xlsx')

mtcars.describe()

| mpg | cyl | disp | hp | drat | wt | qsec | vs | am | gear | carb | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 32.000000 | 32.000000 | 32.000000 | 32.000000 | 32.000000 | 32.000000 | 32.000000 | 32.000000 | 32.000000 | 32.000000 | 32.0000 |

| mean | 20.090625 | 6.187500 | 230.721875 | 146.687500 | 3.596563 | 3.217250 | 17.848750 | 0.437500 | 0.406250 | 3.687500 | 2.8125 |

| std | 6.026948 | 1.785922 | 123.938694 | 68.562868 | 0.534679 | 0.978457 | 1.786943 | 0.504016 | 0.498991 | 0.737804 | 1.6152 |

| min | 10.400000 | 4.000000 | 71.100000 | 52.000000 | 2.760000 | 1.513000 | 14.500000 | 0.000000 | 0.000000 | 3.000000 | 1.0000 |

| 25% | 15.425000 | 4.000000 | 120.825000 | 96.500000 | 3.080000 | 2.581250 | 16.892500 | 0.000000 | 0.000000 | 3.000000 | 2.0000 |

| 50% | 19.200000 | 6.000000 | 196.300000 | 123.000000 | 3.695000 | 3.325000 | 17.710000 | 0.000000 | 0.000000 | 4.000000 | 2.0000 |

| 75% | 22.800000 | 8.000000 | 326.000000 | 180.000000 | 3.920000 | 3.610000 | 18.900000 | 1.000000 | 1.000000 | 4.000000 | 4.0000 |

| max | 33.900000 | 8.000000 | 472.000000 | 335.000000 | 4.930000 | 5.424000 | 22.900000 | 1.000000 | 1.000000 | 5.000000 | 8.0000 |

# Load the famous Iris dataset

iris = sm.datasets.get_rdataset('iris').data

iris

| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

| ... | ... | ... | ... | ... | ... |

| 145 | 6.7 | 3.0 | 5.2 | 2.3 | virginica |

| 146 | 6.3 | 2.5 | 5.0 | 1.9 | virginica |

| 147 | 6.5 | 3.0 | 5.2 | 2.0 | virginica |

| 148 | 6.2 | 3.4 | 5.4 | 2.3 | virginica |

| 149 | 5.9 | 3.0 | 5.1 | 1.8 | virginica |

150 rows × 5 columns

Datasets in Seaborn

Several datasets are accessible through the Seaborn library

# Get the names of all the datasets that are available through Seaborn

import seaborn as sns

sns.get_dataset_names()

['anagrams',

'anscombe',

'attention',

'brain_networks',

'car_crashes',

'diamonds',

'dots',

'dowjones',

'exercise',

'flights',

'fmri',

'geyser',

'glue',

'healthexp',

'iris',

'mpg',

'penguins',

'planets',

'seaice',

'taxis',

'tips',

'titanic']

# Load the diamonds dataset

diamonds = sns.load_dataset('diamonds')

diamonds.head(20)

| carat | cut | color | clarity | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | Ideal | E | SI2 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 |

| 1 | 0.21 | Premium | E | SI1 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 |

| 2 | 0.23 | Good | E | VS1 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 |

| 3 | 0.29 | Premium | I | VS2 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 |

| 4 | 0.31 | Good | J | SI2 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 |

| 5 | 0.24 | Very Good | J | VVS2 | 62.8 | 57.0 | 336 | 3.94 | 3.96 | 2.48 |

| 6 | 0.24 | Very Good | I | VVS1 | 62.3 | 57.0 | 336 | 3.95 | 3.98 | 2.47 |

| 7 | 0.26 | Very Good | H | SI1 | 61.9 | 55.0 | 337 | 4.07 | 4.11 | 2.53 |

| 8 | 0.22 | Fair | E | VS2 | 65.1 | 61.0 | 337 | 3.87 | 3.78 | 2.49 |

| 9 | 0.23 | Very Good | H | VS1 | 59.4 | 61.0 | 338 | 4.00 | 4.05 | 2.39 |

| 10 | 0.30 | Good | J | SI1 | 64.0 | 55.0 | 339 | 4.25 | 4.28 | 2.73 |

| 11 | 0.23 | Ideal | J | VS1 | 62.8 | 56.0 | 340 | 3.93 | 3.90 | 2.46 |

| 12 | 0.22 | Premium | F | SI1 | 60.4 | 61.0 | 342 | 3.88 | 3.84 | 2.33 |

| 13 | 0.31 | Ideal | J | SI2 | 62.2 | 54.0 | 344 | 4.35 | 4.37 | 2.71 |

| 14 | 0.20 | Premium | E | SI2 | 60.2 | 62.0 | 345 | 3.79 | 3.75 | 2.27 |

| 15 | 0.32 | Premium | E | I1 | 60.9 | 58.0 | 345 | 4.38 | 4.42 | 2.68 |

| 16 | 0.30 | Ideal | I | SI2 | 62.0 | 54.0 | 348 | 4.31 | 4.34 | 2.68 |

| 17 | 0.30 | Good | J | SI1 | 63.4 | 54.0 | 351 | 4.23 | 4.29 | 2.70 |

| 18 | 0.30 | Good | J | SI1 | 63.8 | 56.0 | 351 | 4.23 | 4.26 | 2.71 |

| 19 | 0.30 | Very Good | J | SI1 | 62.7 | 59.0 | 351 | 4.21 | 4.27 | 2.66 |

# Load the mpg dataset from Seaborn. This is similar to the mtcars dataset,

# but has a higher count of observations.

sns.load_dataset('mpg')

| mpg | cylinders | displacement | horsepower | weight | acceleration | model_year | origin | name | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 18.0 | 8 | 307.0 | 130.0 | 3504 | 12.0 | 70 | usa | chevrolet chevelle malibu |

| 1 | 15.0 | 8 | 350.0 | 165.0 | 3693 | 11.5 | 70 | usa | buick skylark 320 |

| 2 | 18.0 | 8 | 318.0 | 150.0 | 3436 | 11.0 | 70 | usa | plymouth satellite |

| 3 | 16.0 | 8 | 304.0 | 150.0 | 3433 | 12.0 | 70 | usa | amc rebel sst |

| 4 | 17.0 | 8 | 302.0 | 140.0 | 3449 | 10.5 | 70 | usa | ford torino |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 393 | 27.0 | 4 | 140.0 | 86.0 | 2790 | 15.6 | 82 | usa | ford mustang gl |

| 394 | 44.0 | 4 | 97.0 | 52.0 | 2130 | 24.6 | 82 | europe | vw pickup |

| 395 | 32.0 | 4 | 135.0 | 84.0 | 2295 | 11.6 | 82 | usa | dodge rampage |

| 396 | 28.0 | 4 | 120.0 | 79.0 | 2625 | 18.6 | 82 | usa | ford ranger |

| 397 | 31.0 | 4 | 119.0 | 82.0 | 2720 | 19.4 | 82 | usa | chevy s-10 |

398 rows × 9 columns

# Look at how many cars from each country in the mpg dataset

sns.load_dataset('mpg').origin.value_counts()

usa 249

japan 79

europe 70

Name: origin, dtype: int64

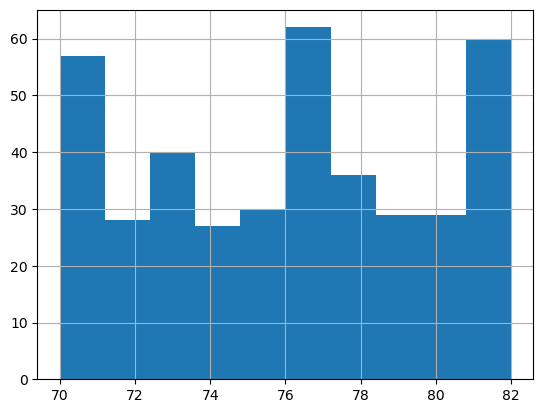

# Build a histogram of the model year

sns.load_dataset('mpg').model_year.astype('category').hist();

# Create a random dataframe with random data

n = 25

df = pd.DataFrame(

{'state': list(np.random.choice(["New York", "Florida", "California"], size=(n))),

'gender': list(np.random.choice(["Male", "Female"], size=(n), p=[.4, .6])),

'education': list(np.random.choice(["High School", "Undergrad", "Grad"], size=(n))),

'housing': list(np.random.choice(["Rent", "Own"], size=(n))),

'height': list(np.random.randint(140,200,n)),

'weight': list(np.random.randint(100,150,n)),

'income': list(np.random.randint(50,250,n)),

'computers': list(np.random.randint(0,6,n))

})

df

| state | gender | education | housing | height | weight | income | computers | |

|---|---|---|---|---|---|---|---|---|

| 0 | California | Female | High School | Own | 190 | 119 | 111 | 0 |

| 1 | California | Female | High School | Own | 140 | 126 | 232 | 2 |

| 2 | New York | Female | High School | Rent | 169 | 123 | 111 | 1 |

| 3 | California | Female | High School | Own | 152 | 147 | 123 | 1 |

| 4 | New York | Female | Undergrad | Own | 197 | 111 | 206 | 4 |

| 5 | New York | Male | Grad | Own | 187 | 144 | 87 | 4 |

| 6 | California | Female | High School | Own | 189 | 115 | 75 | 5 |

| 7 | New York | Female | Undergrad | Own | 197 | 117 | 195 | 0 |

| 8 | Florida | Female | Grad | Own | 146 | 127 | 244 | 5 |

| 9 | New York | Female | Undergrad | Rent | 194 | 106 | 138 | 3 |

| 10 | New York | Female | Undergrad | Rent | 181 | 101 | 206 | 2 |

| 11 | California | Female | Undergrad | Rent | 156 | 121 | 243 | 3 |

| 12 | Florida | Male | Grad | Own | 184 | 143 | 129 | 0 |

| 13 | New York | Male | Grad | Own | 168 | 106 | 176 | 3 |

| 14 | New York | Female | Undergrad | Own | 141 | 112 | 225 | 4 |

| 15 | New York | Female | Undergrad | Rent | 171 | 105 | 66 | 5 |

| 16 | Florida | Female | Grad | Rent | 155 | 126 | 233 | 5 |

| 17 | California | Female | Undergrad | Rent | 193 | 106 | 162 | 4 |

| 18 | New York | Male | High School | Rent | 179 | 107 | 187 | 5 |

| 19 | California | Female | Undergrad | Own | 186 | 125 | 79 | 1 |

| 20 | California | Female | Grad | Own | 157 | 102 | 183 | 4 |

| 21 | Florida | Male | Undergrad | Rent | 174 | 109 | 94 | 5 |

| 22 | New York | Female | Grad | Own | 162 | 107 | 140 | 1 |

| 23 | New York | Female | Grad | Rent | 198 | 142 | 193 | 4 |

| 24 | Florida | Male | High School | Rent | 174 | 115 | 55 | 1 |

# Load the 'Old Faithful' eruption data

sns.load_dataset('geyser')

| duration | waiting | kind | |

|---|---|---|---|

| 0 | 3.600 | 79 | long |

| 1 | 1.800 | 54 | short |

| 2 | 3.333 | 74 | long |

| 3 | 2.283 | 62 | short |

| 4 | 4.533 | 85 | long |

| ... | ... | ... | ... |

| 267 | 4.117 | 81 | long |

| 268 | 2.150 | 46 | short |

| 269 | 4.417 | 90 | long |

| 270 | 1.817 | 46 | short |

| 271 | 4.467 | 74 | long |

272 rows × 3 columns

Datasets in sklearn

Scikit Learn has several datasets that are built-in as well that can be used to experiment with functions and algorithms. Some are listed below:

load_boston(*[, return_X_y]) Load and return the boston house-prices dataset (regression).

load_iris(*[, return_X_y, as_frame]) Load and return the iris dataset (classification).

load_diabetes(*[, return_X_y, as_frame]) Load and return the diabetes dataset (regression).

load_digits(*[, n_class, return_X_y, as_frame]) Load and return the digits dataset (classification).

load_linnerud(*[, return_X_y, as_frame]) Load and return the physical excercise linnerud dataset.

load_wine(*[, return_X_y, as_frame]) Load and return the wine dataset (classification).

load_breast_cancer(*[, return_X_y, as_frame]) Load and return the breast cancer wisconsin dataset (classification).

Let us import the wine dataset next, and the California housing datset after that.

from sklearn import datasets

X = datasets.load_wine()['data']

y = datasets.load_wine()['target']

features = datasets.load_wine()['feature_names']

DESCR = datasets.load_wine()['DESCR']

classes = datasets.load_wine()['target_names']

wine_df = pd.DataFrame(X, columns = features)

wine_df.insert(0,'WineType', y)

# X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

df = wine_df[(wine_df['WineType'] != 2)]

# Let us look at the DESCR for the dataframe we just loaded

print(DESCR)

.. _wine_dataset:

Wine recognition dataset

------------------------

**Data Set Characteristics:**

:Number of Instances: 178

:Number of Attributes: 13 numeric, predictive attributes and the class

:Attribute Information:

- Alcohol

- Malic acid

- Ash

- Alcalinity of ash

- Magnesium

- Total phenols

- Flavanoids

- Nonflavanoid phenols

- Proanthocyanins

- Color intensity

- Hue

- OD280/OD315 of diluted wines

- Proline

- class:

- class_0

- class_1

- class_2

:Summary Statistics:

============================= ==== ===== ======= =====

Min Max Mean SD

============================= ==== ===== ======= =====

Alcohol: 11.0 14.8 13.0 0.8

Malic Acid: 0.74 5.80 2.34 1.12

Ash: 1.36 3.23 2.36 0.27

Alcalinity of Ash: 10.6 30.0 19.5 3.3

Magnesium: 70.0 162.0 99.7 14.3

Total Phenols: 0.98 3.88 2.29 0.63

Flavanoids: 0.34 5.08 2.03 1.00

Nonflavanoid Phenols: 0.13 0.66 0.36 0.12

Proanthocyanins: 0.41 3.58 1.59 0.57

Colour Intensity: 1.3 13.0 5.1 2.3

Hue: 0.48 1.71 0.96 0.23

OD280/OD315 of diluted wines: 1.27 4.00 2.61 0.71

Proline: 278 1680 746 315

============================= ==== ===== ======= =====

:Missing Attribute Values: None

:Class Distribution: class_0 (59), class_1 (71), class_2 (48)

:Creator: R.A. Fisher

:Donor: Michael Marshall (MARSHALL%PLU@io.arc.nasa.gov)

:Date: July, 1988

This is a copy of UCI ML Wine recognition datasets.

https://archive.ics.uci.edu/ml/machine-learning-databases/wine/wine.data

The data is the results of a chemical analysis of wines grown in the same

region in Italy by three different cultivators. There are thirteen different

measurements taken for different constituents found in the three types of

wine.

Original Owners:

Forina, M. et al, PARVUS -

An Extendible Package for Data Exploration, Classification and Correlation.

Institute of Pharmaceutical and Food Analysis and Technologies,

Via Brigata Salerno, 16147 Genoa, Italy.

Citation:

Lichman, M. (2013). UCI Machine Learning Repository

[https://archive.ics.uci.edu/ml]. Irvine, CA: University of California,

School of Information and Computer Science.

.. topic:: References

(1) S. Aeberhard, D. Coomans and O. de Vel,

Comparison of Classifiers in High Dimensional Settings,

Tech. Rep. no. 92-02, (1992), Dept. of Computer Science and Dept. of

Mathematics and Statistics, James Cook University of North Queensland.

(Also submitted to Technometrics).

The data was used with many others for comparing various

classifiers. The classes are separable, though only RDA

has achieved 100% correct classification.

(RDA : 100%, QDA 99.4%, LDA 98.9%, 1NN 96.1% (z-transformed data))

(All results using the leave-one-out technique)

(2) S. Aeberhard, D. Coomans and O. de Vel,

"THE CLASSIFICATION PERFORMANCE OF RDA"

Tech. Rep. no. 92-01, (1992), Dept. of Computer Science and Dept. of

Mathematics and Statistics, James Cook University of North Queensland.

(Also submitted to Journal of Chemometrics).

# California housing dataset. medv is the median value of the homes

from sklearn import datasets

X = datasets.fetch_california_housing()['data']

y = datasets.fetch_california_housing()['target']

features = datasets.fetch_california_housing()['feature_names']

DESCR = datasets.fetch_california_housing()['DESCR']

cali_df = pd.DataFrame(X, columns = features)

cali_df.insert(0,'medv', y)

cali_df

| medv | MedInc | HouseAge | AveRooms | AveBedrms | Population | AveOccup | Latitude | Longitude | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 4.526 | 8.3252 | 41.0 | 6.984127 | 1.023810 | 322.0 | 2.555556 | 37.88 | -122.23 |

| 1 | 3.585 | 8.3014 | 21.0 | 6.238137 | 0.971880 | 2401.0 | 2.109842 | 37.86 | -122.22 |

| 2 | 3.521 | 7.2574 | 52.0 | 8.288136 | 1.073446 | 496.0 | 2.802260 | 37.85 | -122.24 |

| 3 | 3.413 | 5.6431 | 52.0 | 5.817352 | 1.073059 | 558.0 | 2.547945 | 37.85 | -122.25 |

| 4 | 3.422 | 3.8462 | 52.0 | 6.281853 | 1.081081 | 565.0 | 2.181467 | 37.85 | -122.25 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 20635 | 0.781 | 1.5603 | 25.0 | 5.045455 | 1.133333 | 845.0 | 2.560606 | 39.48 | -121.09 |

| 20636 | 0.771 | 2.5568 | 18.0 | 6.114035 | 1.315789 | 356.0 | 3.122807 | 39.49 | -121.21 |

| 20637 | 0.923 | 1.7000 | 17.0 | 5.205543 | 1.120092 | 1007.0 | 2.325635 | 39.43 | -121.22 |

| 20638 | 0.847 | 1.8672 | 18.0 | 5.329513 | 1.171920 | 741.0 | 2.123209 | 39.43 | -121.32 |

| 20639 | 0.894 | 2.3886 | 16.0 | 5.254717 | 1.162264 | 1387.0 | 2.616981 | 39.37 | -121.24 |

20640 rows × 9 columns

# Again, we can look at what the various columns mean

print(DESCR)

.. _california_housing_dataset:

California Housing dataset

--------------------------

**Data Set Characteristics:**

:Number of Instances: 20640

:Number of Attributes: 8 numeric, predictive attributes and the target

:Attribute Information:

- MedInc median income in block group

- HouseAge median house age in block group

- AveRooms average number of rooms per household

- AveBedrms average number of bedrooms per household

- Population block group population

- AveOccup average number of household members

- Latitude block group latitude

- Longitude block group longitude

:Missing Attribute Values: None

This dataset was obtained from the StatLib repository.

https://www.dcc.fc.up.pt/~ltorgo/Regression/cal_housing.html

The target variable is the median house value for California districts,

expressed in hundreds of thousands of dollars ($100,000).

This dataset was derived from the 1990 U.S. census, using one row per census

block group. A block group is the smallest geographical unit for which the U.S.

Census Bureau publishes sample data (a block group typically has a population

of 600 to 3,000 people).

An household is a group of people residing within a home. Since the average

number of rooms and bedrooms in this dataset are provided per household, these

columns may take surpinsingly large values for block groups with few households

and many empty houses, such as vacation resorts.

It can be downloaded/loaded using the

:func:`sklearn.datasets.fetch_california_housing` function.

.. topic:: References

- Pace, R. Kelley and Ronald Barry, Sparse Spatial Autoregressions,

Statistics and Probability Letters, 33 (1997) 291-297

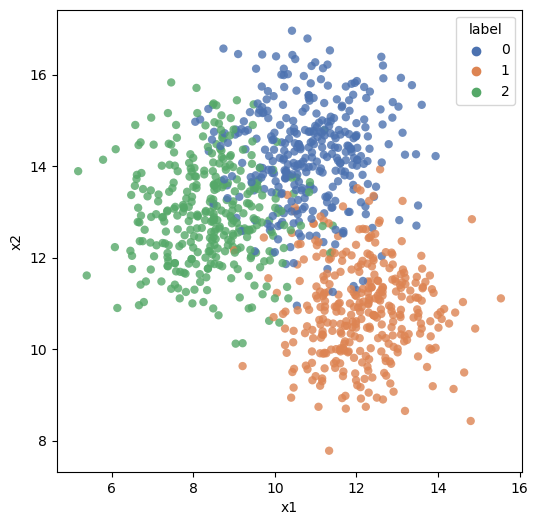

Create Artificial Data using sklearn

In addition to the built-in datasets, it is possible to create artificial data of arbitrary size to test or explain different algorithms for solving classification (both binary and multi-class) as well as regression problems.

One example using the make_blobs function is provided below, but a great deal more detail is available at https://scikit-learn.org/stable/datasets/sample_generators.html#sample-generators

make_blobs and make_classification can create multiclass datasets, and make_regression can be used for creating datasets with specified characteristics. Refer to the sklearn documentation link above to learn more.

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.datasets import make_blobs

X, y, centers = make_blobs(n_samples=1000, centers=3, n_features=2,

random_state=0, return_centers=True, center_box=(0,20),

cluster_std = 1.1)

df = pd.DataFrame(dict(x1=X[:,0], x2=X[:,1], label=y))

df = round(df,ndigits=2)

df

| x1 | x2 | label | |

|---|---|---|---|

| 0 | 9.26 | 12.64 | 2 |

| 1 | 12.02 | 14.14 | 0 |

| 2 | 8.50 | 13.12 | 2 |

| 3 | 8.93 | 12.87 | 2 |

| 4 | 7.37 | 11.82 | 2 |

| ... | ... | ... | ... |

| 995 | 11.94 | 10.92 | 1 |

| 996 | 9.40 | 12.17 | 2 |

| 997 | 10.25 | 10.45 | 1 |

| 998 | 7.37 | 12.01 | 2 |

| 999 | 11.01 | 11.17 | 1 |

1000 rows × 3 columns

plt.figure(figsize=(6,6))

sns.scatterplot(data = df, x = 'x1', y = 'x2', hue = 'label',

alpha = .8, palette="deep",edgecolor = 'None');

Exploratory Data Analysis using Python

After all of this lengthy introduction, we are finally ready to get started with actually performing some EDA.

As mentioned earlier, EDA is unstructured exploration, there is not a set of set activities you must perform. Generally, you probe the data, and depending upon what you discover, you ask more questions.

Things we will do:

- Look at how to read different types of data

- Understand how to access in-built datasets in Python

- Calculate summary statistics covered in the prior class (refer list to the right)

- Perform basic graphing using Pandas to explore the data

- Understand group-by and pivoting functions (the split-apply-combine process)

- Look at pandas-profiling, a library that can perform many data exploration tasks

Pandas is a library we will be using often, and is something we will use to explore data and perform EDA. We will also use NumPy and SciPy.

# Load the regular libraries

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

A note on managing working directories

A very basic problem one runs into when trying to load datafiles is the file path - and if the file is not located in the current working directory for Python.

Generally, reading a CSV file is simple - pd.read_csv and pointing to the filename does the trick. If the file is there but pandas returns an error, that could be because the file may not be located in your working directory. In such a case, enter the complete path to the file.

Alternatively, you can bring the file to your working directory. To check and change your working directory, use the following code:

import os

# To check current working directory:

os.getcwd()

'C:\\Users\\user\\Google Drive\\jupyter'

Or, you could type pwd in a cell. Be aware that pwd should be on the first line of the cell!

pwd

'C:\\Users\\user\\Google Drive\\jupyter'

# To change working directory

os.chdir(r'C:\Users\user\Google Drive\jupyter')

EDA on the diamonds dataset

Questions we might like answered

Below is a repeat of what was said in the introduction to this chapter, just to avoid having to go back to check what we are trying to do. When performing EDA, we want to explore data in an unstructured way, and try to get a 'feel' for the data. The kinds of questions we may want to answer are:

- How much data do we have - number of rows in the data?

- How many columns, or fields do we have in the dataset?

- Data types - which of the columns appear to be numeric, dates or strings?

- Names of the columns, and do they tell us anything?

- A visual review of a sample of the dataset

- Completeness of the dataset, are missing values obvious? Columns that are largely empty?

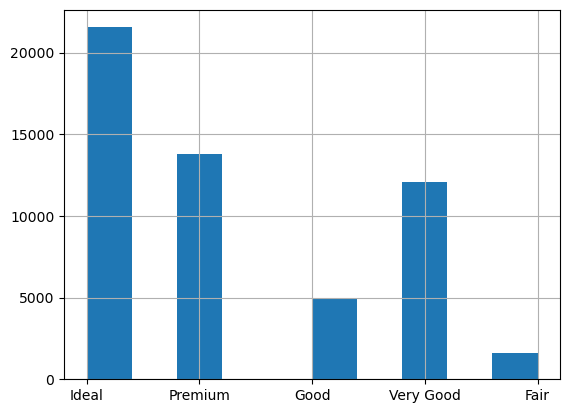

- Unique values for columns that appear to be categorical, and how many observations of each category?

- For numeric columns, the range of values (calculated from min and max values)

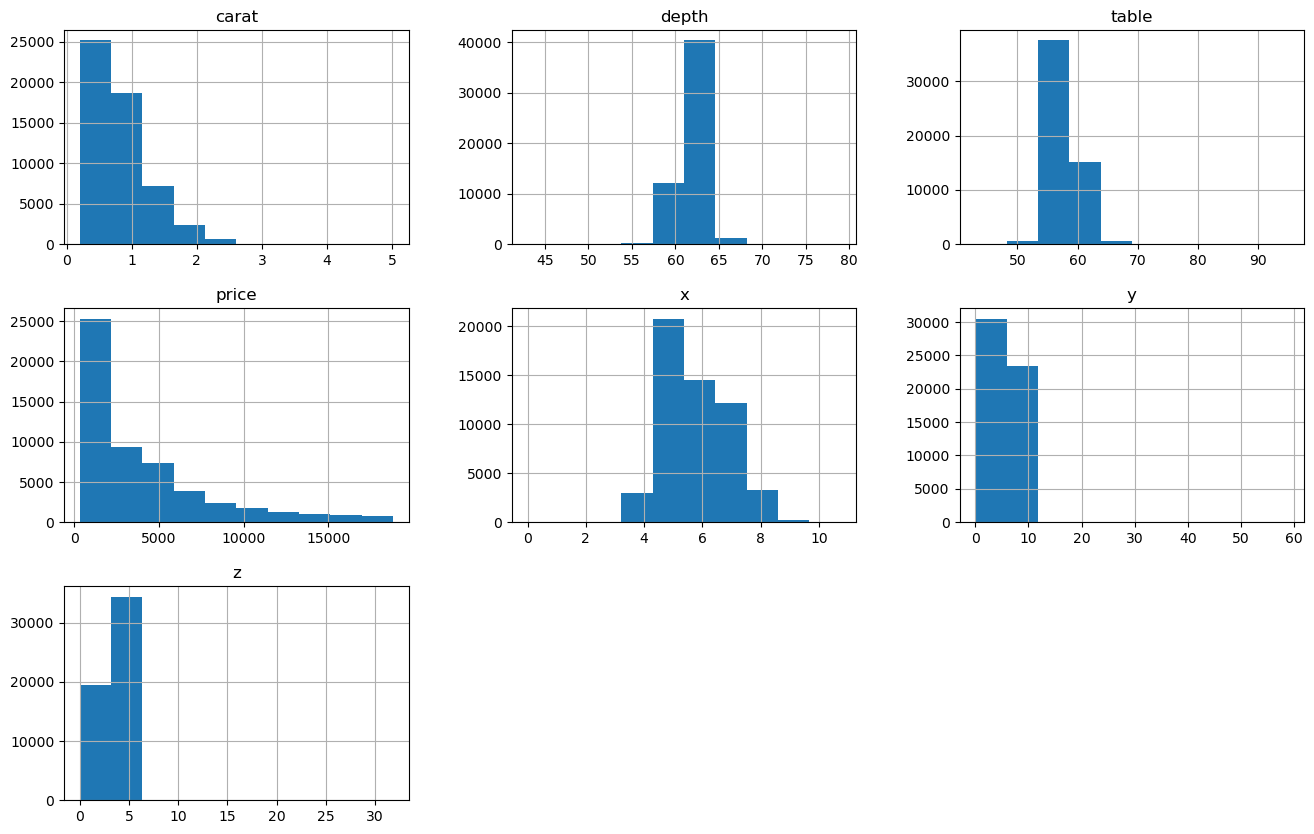

- Distributions for the different columns, possibly graphed

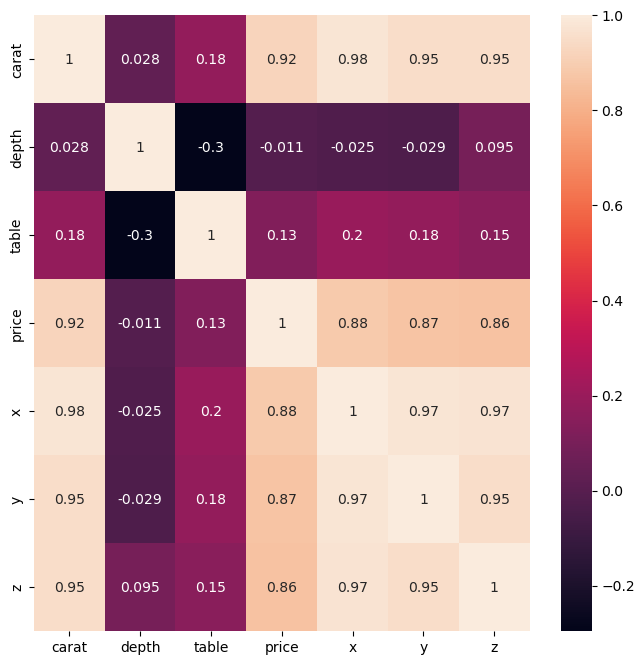

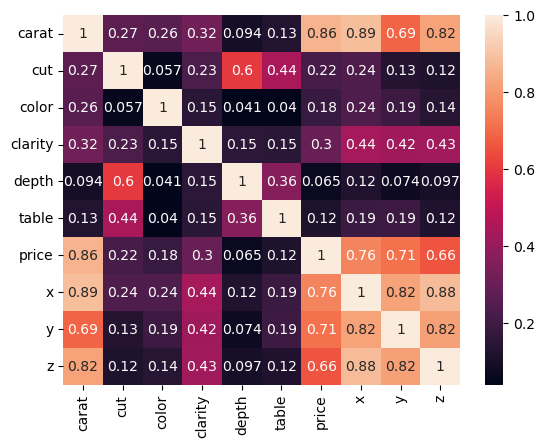

- Correlations between the different columns

Load data

We will start our exploration with the diamonds dataset.

The ‘diamonds’ has 50k+ records, each representing a single diamond. The weight and other attributes are available, and so is the price.

The dataset allows us to experiment with a variety of prediction techniques and algorithms. Below are the columns in the dataset, and their description.

| Column | Description |

|---|---|

| price | price in US dollars (\$326--\$18,823) |

| carat | weight of the diamond (0.2--5.01) |

| cut | quality of the cut (Fair, Good, Very Good, Premium, Ideal) |

| color | diamond colour, from J (worst) to D (best) |

| clarity | a measurement of how clear the diamond is (I1 (worst), SI2, SI1, VS2, VS1, VVS2, VVS1, IF (best)) |

| x | length in mm (0--10.74) |

| y | width in mm (0--58.9) |

| z | depth in mm (0--31.8) |

| depth | total depth percentage = z / mean(x, y) = 2 * z / (x + y) (43--79) |

| table | width of top of diamond relative to widest point (43--95) |

# Load data from seaborn

df = sns.load_dataset('diamonds')

df

| carat | cut | color | clarity | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | Ideal | E | SI2 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 |

| 1 | 0.21 | Premium | E | SI1 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 |

| 2 | 0.23 | Good | E | VS1 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 |

| 3 | 0.29 | Premium | I | VS2 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 |

| 4 | 0.31 | Good | J | SI2 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 53935 | 0.72 | Ideal | D | SI1 | 60.8 | 57.0 | 2757 | 5.75 | 5.76 | 3.50 |

| 53936 | 0.72 | Good | D | SI1 | 63.1 | 55.0 | 2757 | 5.69 | 5.75 | 3.61 |

| 53937 | 0.70 | Very Good | D | SI1 | 62.8 | 60.0 | 2757 | 5.66 | 5.68 | 3.56 |

| 53938 | 0.86 | Premium | H | SI2 | 61.0 | 58.0 | 2757 | 6.15 | 6.12 | 3.74 |

| 53939 | 0.75 | Ideal | D | SI2 | 62.2 | 55.0 | 2757 | 5.83 | 5.87 | 3.64 |

53940 rows × 10 columns

Descriptive stats

Pandas describe() function provides a variety of summary statistics. Review the table below. Notice the categorical variables were ignored. This is because descriptive stats do not make sense for categorical variables.

# Let us look at some descriptive statistics for the numerical variables

df.describe()

| carat | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|

| count | 53940.000000 | 53940.000000 | 53940.000000 | 53940.000000 | 53940.000000 | 53940.000000 | 53940.000000 |

| mean | 0.797940 | 61.749405 | 57.457184 | 3932.799722 | 5.731157 | 5.734526 | 3.538734 |

| std | 0.474011 | 1.432621 | 2.234491 | 3989.439738 | 1.121761 | 1.142135 | 0.705699 |

| min | 0.200000 | 43.000000 | 43.000000 | 326.000000 | 0.000000 | 0.000000 | 0.000000 |

| 25% | 0.400000 | 61.000000 | 56.000000 | 950.000000 | 4.710000 | 4.720000 | 2.910000 |

| 50% | 0.700000 | 61.800000 | 57.000000 | 2401.000000 | 5.700000 | 5.710000 | 3.530000 |

| 75% | 1.040000 | 62.500000 | 59.000000 | 5324.250000 | 6.540000 | 6.540000 | 4.040000 |

| max | 5.010000 | 79.000000 | 95.000000 | 18823.000000 | 10.740000 | 58.900000 | 31.800000 |

df.info() gives you information on the dataset

df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 53940 entries, 0 to 53939

Data columns (total 10 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 carat 53940 non-null float64

1 cut 53940 non-null category

2 color 53940 non-null category

3 clarity 53940 non-null category

4 depth 53940 non-null float64

5 table 53940 non-null float64

6 price 53940 non-null int64

7 x 53940 non-null float64

8 y 53940 non-null float64

9 z 53940 non-null float64

dtypes: category(3), float64(6), int64(1)

memory usage: 3.0 MB

Similarly, df.shape gives you a tuple with the counts of rows and columns.

Trivia:

- Note there is no () after df.shape, as it is a property. Properties are the 'attributes' of the object that can be set using methods.

- Methods are like functions, but are inbuilt, and apply to an object. They are part of the class definition for the object.

df.shape

(53940, 10)

df.columns gives you the names of the columns.

df.columns

Index(['carat', 'cut', 'color', 'clarity', 'depth', 'table', 'price', 'x', 'y',

'z'],

dtype='object')

Exploring individual columns

Pandas provide a large number of functions that allow us to explore several statistics relating to individual variables.

| Measures | Function (from Pandas, unless otherwise stated) |

|---|---|

| Central Tendency | |

| Mean | mean() |

| Geometric Mean | gmean() (from scipy.stats) |

| Median | median() |

| Mode | mode() |

| Measures of Variability | |

| Range | max() - min() |

| Variance | var() |

| Standard Deviation | std() |

| Coefficient of Variation | std() / mean() |

| Measures of Association | |

| Covariance | cov() |

| Correlation | corr() |

| Analyzing Distributions | |

| Percentiles | quantile() |

| Quartiles | quantile() |

| Z-Scores | zscore (from scipy) |

We examine many of these in action below.

Functions for descriptive stats

# Mean

df.mean(numeric_only=True)

carat 0.797940

depth 61.749405

table 57.457184

price 3932.799722

x 5.731157

y 5.734526

z 3.538734

dtype: float64

# Median

df.median(numeric_only=True)

carat 0.70

depth 61.80

table 57.00

price 2401.00

x 5.70

y 5.71

z 3.53

dtype: float64

# Mode

df.mode()

| carat | cut | color | clarity | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.3 | Ideal | G | SI1 | 62.0 | 56.0 | 605 | 4.37 | 4.34 | 2.7 |

# Min, also max works as well

df.min(numeric_only=True)

carat 0.2

depth 43.0

table 43.0

price 326.0

x 0.0

y 0.0

z 0.0

dtype: float64

# Variance

df.var(numeric_only=True)

carat 2.246867e-01

depth 2.052404e+00

table 4.992948e+00

price 1.591563e+07

x 1.258347e+00

y 1.304472e+00

z 4.980109e-01

dtype: float64

# Standard Deviation

df.std(numeric_only=True)

carat 0.474011

depth 1.432621

table 2.234491

price 3989.439738

x 1.121761

y 1.142135

z 0.705699

dtype: float64

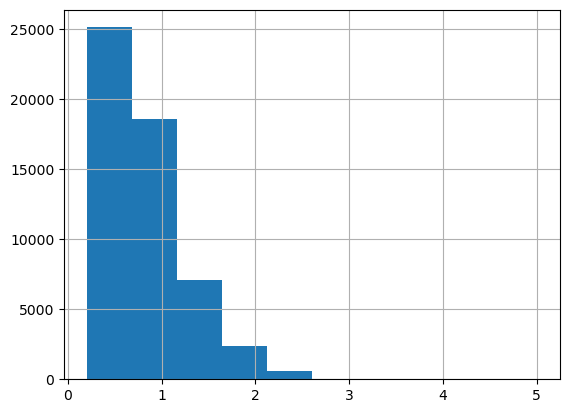

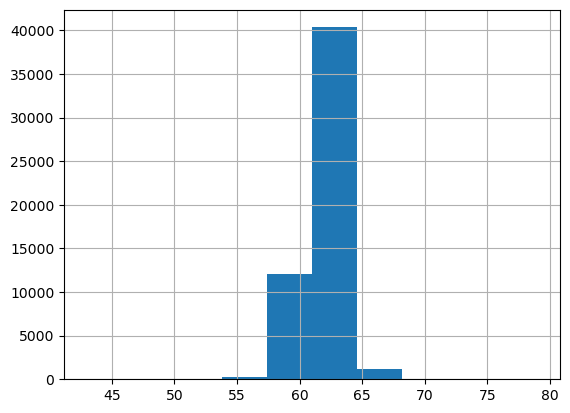

Some quick histograms

Histograms allow us to look at the distribution of the data. The df.colname.hist() function allows us to create quick histograms (or column charts in case of categorical variables).

Visualization using Matplotlib is covered in a different chapter.

# A quick histogram

df.carat.hist();

df.depth.hist();

df.cut.hist();

# All together

df.hist(figsize=(16,10));

Calculate range

# Let us calculate the range manually

df.depth.max() - df.depth.min()

36.0

Covariance and correlations

# Let us do the covariance matrix, which is a one-liner with pandas

df.cov()

| carat | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|

| carat | 0.224687 | 0.019167 | 0.192365 | 1.742765e+03 | 0.518484 | 0.515248 | 0.318917 |

| depth | 0.019167 | 2.052404 | -0.946840 | -6.085371e+01 | -0.040641 | -0.048009 | 0.095968 |

| table | 0.192365 | -0.946840 | 4.992948 | 1.133318e+03 | 0.489643 | 0.468972 | 0.237996 |

| price | 1742.765364 | -60.853712 | 1133.318064 | 1.591563e+07 | 3958.021491 | 3943.270810 | 2424.712613 |

| x | 0.518484 | -0.040641 | 0.489643 | 3.958021e+03 | 1.258347 | 1.248789 | 0.768487 |

| y | 0.515248 | -0.048009 | 0.468972 | 3.943271e+03 | 1.248789 | 1.304472 | 0.767320 |

| z | 0.318917 | 0.095968 | 0.237996 | 2.424713e+03 | 0.768487 | 0.767320 | 0.498011 |

# Now the correlation matrix - another one-liner

df.corr()

| carat | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|

| carat | 1.000000 | 0.028224 | 0.181618 | 0.921591 | 0.975094 | 0.951722 | 0.953387 |

| depth | 0.028224 | 1.000000 | -0.295779 | -0.010647 | -0.025289 | -0.029341 | 0.094924 |

| table | 0.181618 | -0.295779 | 1.000000 | 0.127134 | 0.195344 | 0.183760 | 0.150929 |

| price | 0.921591 | -0.010647 | 0.127134 | 1.000000 | 0.884435 | 0.865421 | 0.861249 |

| x | 0.975094 | -0.025289 | 0.195344 | 0.884435 | 1.000000 | 0.974701 | 0.970772 |

| y | 0.951722 | -0.029341 | 0.183760 | 0.865421 | 0.974701 | 1.000000 | 0.952006 |

| z | 0.953387 | 0.094924 | 0.150929 | 0.861249 | 0.970772 | 0.952006 | 1.000000 |

# We can also calculate the correlations individually between given variables

df[['carat', 'depth']].corr()

| carat | depth | |

|---|---|---|

| carat | 1.000000 | 0.028224 |

| depth | 0.028224 | 1.000000 |

# We can create a heatmap of correlations

plt.figure(figsize = (8,8))

sns.heatmap(df.corr(), annot=True);

plt.show()

# We can calculate phi-k correlations as well

import phik

X = df.phik_matrix()

X

interval columns not set, guessing: ['carat', 'depth', 'table', 'price', 'x', 'y', 'z']

| carat | cut | color | clarity | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|---|---|---|

| carat | 1.000000 | 0.270726 | 0.261376 | 0.320729 | 0.093835 | 0.127877 | 0.860178 | 0.885596 | 0.685737 | 0.821934 |

| cut | 0.270726 | 1.000000 | 0.057308 | 0.229186 | 0.604758 | 0.441720 | 0.220674 | 0.237591 | 0.131938 | 0.115199 |

| color | 0.261376 | 0.057308 | 1.000000 | 0.146758 | 0.040634 | 0.039959 | 0.183244 | 0.238246 | 0.191040 | 0.140158 |

| clarity | 0.320729 | 0.229186 | 0.146758 | 1.000000 | 0.154796 | 0.148489 | 0.295205 | 0.435204 | 0.419662 | 0.425129 |

| depth | 0.093835 | 0.604758 | 0.040634 | 0.154796 | 1.000000 | 0.362929 | 0.064652 | 0.124055 | 0.073533 | 0.097474 |

| table | 0.127877 | 0.441720 | 0.039959 | 0.148489 | 0.362929 | 1.000000 | 0.115604 | 0.187285 | 0.190942 | 0.121229 |

| price | 0.860178 | 0.220674 | 0.183244 | 0.295205 | 0.064652 | 0.115604 | 1.000000 | 0.755270 | 0.714089 | 0.656248 |

| x | 0.885596 | 0.237591 | 0.238246 | 0.435204 | 0.124055 | 0.187285 | 0.755270 | 1.000000 | 0.822881 | 0.882911 |

| y | 0.685737 | 0.131938 | 0.191040 | 0.419662 | 0.073533 | 0.190942 | 0.714089 | 0.822881 | 1.000000 | 0.816241 |

| z | 0.821934 | 0.115199 | 0.140158 | 0.425129 | 0.097474 | 0.121229 | 0.656248 | 0.882911 | 0.816241 | 1.000000 |

sns.heatmap(X, annot=True);

Detailed Phi-k correlation report

from phik import report

phik.report.correlation_report(df)

Quantiles to analyze the distribution

# Calculating quantiles

# Here we calculate the 30th quantile

df.quantile(0.30)

carat 0.42

depth 61.20

table 56.00

price 1087.00

x 4.82

y 4.83

z 2.98

Name: 0.3, dtype: float64

# Calculating multiple quantiles

df.quantile([.1,.3,.5,.75])

| carat | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|

| 0.10 | 0.31 | 60.0 | 55.0 | 646.00 | 4.36 | 4.36 | 2.69 |

| 0.30 | 0.42 | 61.2 | 56.0 | 1087.00 | 4.82 | 4.83 | 2.98 |

| 0.50 | 0.70 | 61.8 | 57.0 | 2401.00 | 5.70 | 5.71 | 3.53 |

| 0.75 | 1.04 | 62.5 | 59.0 | 5324.25 | 6.54 | 6.54 | 4.04 |

Z-scores

# Z-scores for two of the columns (x - mean(x))/std(x)

from scipy.stats import zscore

zscores = zscore(df[['carat', 'depth']])

# Verify z-scores have mean of 0 and standard deviation of 1:

print('Z-scores: \n', zscores, '\n')

print('Mean is: ', zscores.mean(axis = 0), '\n')

print('Std Deviation is: ', zscores.std(axis = 0), '\n')

Z-scores:

carat depth

0 -1.198168 -0.174092

1 -1.240361 -1.360738

2 -1.198168 -3.385019

3 -1.071587 0.454133

4 -1.029394 1.082358

... ... ...

53935 -0.164427 -0.662711

53936 -0.164427 0.942753

53937 -0.206621 0.733344

53938 0.130927 -0.523105

53939 -0.101137 0.314528

[53940 rows x 2 columns]

Mean is: carat 2.889982e-14

depth -3.658830e-15

dtype: float64

Std Deviation is: carat 1.000009

depth 1.000009

dtype: float64

Dataframe information

# Look at some dataframe information

df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 53940 entries, 0 to 53939

Data columns (total 10 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 carat 53940 non-null float64

1 cut 53940 non-null category

2 color 53940 non-null category

3 clarity 53940 non-null category

4 depth 53940 non-null float64

5 table 53940 non-null float64

6 price 53940 non-null int64

7 x 53940 non-null float64

8 y 53940 non-null float64

9 z 53940 non-null float64

dtypes: category(3), float64(6), int64(1)

memory usage: 3.0 MB

Names of columns

# Column names

df.columns

Index(['carat', 'cut', 'color', 'clarity', 'depth', 'table', 'price', 'x', 'y',

'z'],

dtype='object')

Other useful functions

Sort:

df.sort_values(['price', 'table'], ascending = [False, True]).head()

Unique values:df.cut.unique()

Count of unique values:df.cut.nunique()

Value Counts:df.cut.value_counts()

Take a sample from a dataframe:diamonds.sample(4)(or n=4)

Rename columns:df.rename(columns = {'price':'dollars'}, inplace = True)

Split-Apply-Combine

The phrase Split-Apply-Combine was made popular by Hadley Wickham, who is the author of the popular dplyr package in R. His original paper on the topic can be downloaded at https://www.jstatsoft.org/article/download/v040i01/468

Conceptually, it involves:

- Splitting the data into sub-groups based on some filtering criteria

- Applying a function to each sub-group and obtaining a result

- Combining the results into one single dataframe.

Split-Apply-Combine does not represent three separate steps in data analysis, but a way to think about solving problems by breaking them up into manageable pieces, operate on each piece independently, and put all the pieces back together.

In Python, the Split-Apply-Combine operations are implemented using different functions such as pivot, pivot_table, crosstab, groupby and possibly others.

Ref: http://www.jstatsoft.org/v40/i01/

Stack

Even though stack and unstack do not pivot data, they reshape a data in a fundamental way that deserves a reference alongside the standard split-apply-combine techniques.

What stack does is to completely flatten out a dataframe by bringing all columns down against the index. The index becomes a multi-level index, and all the columns show up against every single row.

The result is a pandas series, with as many rows as the rows times columns in the original dataset.

You can then move the index into the columns of a dataframe by doing reset_index().

Let us first consider a simpler dataframe with just a few entries.

Example 1

df = pd.DataFrame([[9, 10], [14, 30]],

index=['cat', 'dog'],

columns=['weight-lbs', 'height-in'])

df

| weight-lbs | height-in | |

|---|---|---|

| cat | 9 | 10 |

| dog | 14 | 30 |

df.stack()

cat weight-lbs 9

height-in 10

dog weight-lbs 14

height-in 30

dtype: int64

# Convert this to a dataframe

pd.DataFrame(df.stack()).reset_index().rename({'level_0': 'animal', 'level_1':'measure', 0: 'value'}, axis=1)

| animal | measure | value | |

|---|---|---|---|

| 0 | cat | weight-lbs | 9 |

| 1 | cat | height-in | 10 |

| 2 | dog | weight-lbs | 14 |

| 3 | dog | height-in | 30 |

type(df.stack())

pandas.core.series.Series

df.stack().index

MultiIndex([('cat', 'weight-lbs'),

('cat', 'height-in'),

('dog', 'weight-lbs'),

('dog', 'height-in')],

)

Example 2:

Now we look at a larger dataframe.

import statsmodels.api as sm

iris = sm.datasets.get_rdataset('iris').data

# Let us look at the original data before we stack it

iris

| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

| ... | ... | ... | ... | ... | ... |

| 145 | 6.7 | 3.0 | 5.2 | 2.3 | virginica |

| 146 | 6.3 | 2.5 | 5.0 | 1.9 | virginica |

| 147 | 6.5 | 3.0 | 5.2 | 2.0 | virginica |

| 148 | 6.2 | 3.4 | 5.4 | 2.3 | virginica |

| 149 | 5.9 | 3.0 | 5.1 | 1.8 | virginica |

150 rows × 5 columns

iris.stack()

0 Sepal.Length 5.1

Sepal.Width 3.5

Petal.Length 1.4

Petal.Width 0.2

Species setosa

...

149 Sepal.Length 5.9

Sepal.Width 3.0

Petal.Length 5.1

Petal.Width 1.8

Species virginica

Length: 750, dtype: object

We had 150 rows and 5 columns in our original dataset, and we would therefore expect to have 150*5 = 750 items in our stacked series. Which we can verify.

iris.shape[0] * iris.shape[1]

750

Example 3:

We stack the mtcars dataset.

mtcars = sm.datasets.get_rdataset('mtcars').data

mtcars

| mpg | cyl | disp | hp | drat | wt | qsec | vs | am | gear | carb | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mazda RX4 | 21.0 | 6 | 160.0 | 110 | 3.90 | 2.620 | 16.46 | 0 | 1 | 4 | 4 |

| Mazda RX4 Wag | 21.0 | 6 | 160.0 | 110 | 3.90 | 2.875 | 17.02 | 0 | 1 | 4 | 4 |

| Datsun 710 | 22.8 | 4 | 108.0 | 93 | 3.85 | 2.320 | 18.61 | 1 | 1 | 4 | 1 |

| Hornet 4 Drive | 21.4 | 6 | 258.0 | 110 | 3.08 | 3.215 | 19.44 | 1 | 0 | 3 | 1 |

| Hornet Sportabout | 18.7 | 8 | 360.0 | 175 | 3.15 | 3.440 | 17.02 | 0 | 0 | 3 | 2 |

| Valiant | 18.1 | 6 | 225.0 | 105 | 2.76 | 3.460 | 20.22 | 1 | 0 | 3 | 1 |

| Duster 360 | 14.3 | 8 | 360.0 | 245 | 3.21 | 3.570 | 15.84 | 0 | 0 | 3 | 4 |

| Merc 240D | 24.4 | 4 | 146.7 | 62 | 3.69 | 3.190 | 20.00 | 1 | 0 | 4 | 2 |

| Merc 230 | 22.8 | 4 | 140.8 | 95 | 3.92 | 3.150 | 22.90 | 1 | 0 | 4 | 2 |

| Merc 280 | 19.2 | 6 | 167.6 | 123 | 3.92 | 3.440 | 18.30 | 1 | 0 | 4 | 4 |

| Merc 280C | 17.8 | 6 | 167.6 | 123 | 3.92 | 3.440 | 18.90 | 1 | 0 | 4 | 4 |

| Merc 450SE | 16.4 | 8 | 275.8 | 180 | 3.07 | 4.070 | 17.40 | 0 | 0 | 3 | 3 |

| Merc 450SL | 17.3 | 8 | 275.8 | 180 | 3.07 | 3.730 | 17.60 | 0 | 0 | 3 | 3 |

| Merc 450SLC | 15.2 | 8 | 275.8 | 180 | 3.07 | 3.780 | 18.00 | 0 | 0 | 3 | 3 |

| Cadillac Fleetwood | 10.4 | 8 | 472.0 | 205 | 2.93 | 5.250 | 17.98 | 0 | 0 | 3 | 4 |

| Lincoln Continental | 10.4 | 8 | 460.0 | 215 | 3.00 | 5.424 | 17.82 | 0 | 0 | 3 | 4 |

| Chrysler Imperial | 14.7 | 8 | 440.0 | 230 | 3.23 | 5.345 | 17.42 | 0 | 0 | 3 | 4 |

| Fiat 128 | 32.4 | 4 | 78.7 | 66 | 4.08 | 2.200 | 19.47 | 1 | 1 | 4 | 1 |

| Honda Civic | 30.4 | 4 | 75.7 | 52 | 4.93 | 1.615 | 18.52 | 1 | 1 | 4 | 2 |

| Toyota Corolla | 33.9 | 4 | 71.1 | 65 | 4.22 | 1.835 | 19.90 | 1 | 1 | 4 | 1 |

| Toyota Corona | 21.5 | 4 | 120.1 | 97 | 3.70 | 2.465 | 20.01 | 1 | 0 | 3 | 1 |

| Dodge Challenger | 15.5 | 8 | 318.0 | 150 | 2.76 | 3.520 | 16.87 | 0 | 0 | 3 | 2 |

| AMC Javelin | 15.2 | 8 | 304.0 | 150 | 3.15 | 3.435 | 17.30 | 0 | 0 | 3 | 2 |

| Camaro Z28 | 13.3 | 8 | 350.0 | 245 | 3.73 | 3.840 | 15.41 | 0 | 0 | 3 | 4 |

| Pontiac Firebird | 19.2 | 8 | 400.0 | 175 | 3.08 | 3.845 | 17.05 | 0 | 0 | 3 | 2 |

| Fiat X1-9 | 27.3 | 4 | 79.0 | 66 | 4.08 | 1.935 | 18.90 | 1 | 1 | 4 | 1 |

| Porsche 914-2 | 26.0 | 4 | 120.3 | 91 | 4.43 | 2.140 | 16.70 | 0 | 1 | 5 | 2 |

| Lotus Europa | 30.4 | 4 | 95.1 | 113 | 3.77 | 1.513 | 16.90 | 1 | 1 | 5 | 2 |

| Ford Pantera L | 15.8 | 8 | 351.0 | 264 | 4.22 | 3.170 | 14.50 | 0 | 1 | 5 | 4 |

| Ferrari Dino | 19.7 | 6 | 145.0 | 175 | 3.62 | 2.770 | 15.50 | 0 | 1 | 5 | 6 |

| Maserati Bora | 15.0 | 8 | 301.0 | 335 | 3.54 | 3.570 | 14.60 | 0 | 1 | 5 | 8 |

| Volvo 142E | 21.4 | 4 | 121.0 | 109 | 4.11 | 2.780 | 18.60 | 1 | 1 | 4 | 2 |

mtcars.stack()

Mazda RX4 mpg 21.0

cyl 6.0

disp 160.0

hp 110.0

drat 3.9

...

Volvo 142E qsec 18.6

vs 1.0

am 1.0

gear 4.0

carb 2.0

Length: 352, dtype: float64

Unstack

Unstack is the same as the stack of the transpose of a dataframe.

So you flip the rows and columns of a database, and you then do a stack.

mtcars.transpose()

| Mazda RX4 | Mazda RX4 Wag | Datsun 710 | Hornet 4 Drive | Hornet Sportabout | Valiant | Duster 360 | Merc 240D | Merc 230 | Merc 280 | ... | AMC Javelin | Camaro Z28 | Pontiac Firebird | Fiat X1-9 | Porsche 914-2 | Lotus Europa | Ford Pantera L | Ferrari Dino | Maserati Bora | Volvo 142E | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| mpg | 21.00 | 21.000 | 22.80 | 21.400 | 18.70 | 18.10 | 14.30 | 24.40 | 22.80 | 19.20 | ... | 15.200 | 13.30 | 19.200 | 27.300 | 26.00 | 30.400 | 15.80 | 19.70 | 15.00 | 21.40 |

| cyl | 6.00 | 6.000 | 4.00 | 6.000 | 8.00 | 6.00 | 8.00 | 4.00 | 4.00 | 6.00 | ... | 8.000 | 8.00 | 8.000 | 4.000 | 4.00 | 4.000 | 8.00 | 6.00 | 8.00 | 4.00 |

| disp | 160.00 | 160.000 | 108.00 | 258.000 | 360.00 | 225.00 | 360.00 | 146.70 | 140.80 | 167.60 | ... | 304.000 | 350.00 | 400.000 | 79.000 | 120.30 | 95.100 | 351.00 | 145.00 | 301.00 | 121.00 |