Deep Learning

AI, Machine Learning and Deep Learning

Artificial Intelligence is the widest categorization of analytical methods that aim to automate intellectual tasks normally performed by humans. Machine learning can be considered to be a sub-set of the wider AI domain where a system is trained rather than explicitly programmed. It’s presented with many examples relevant to a task, and it finds statistical structure in these examples that eventually allows the system to come up with rules for automating the task.

The "deep" in deep learning is not a reference to any kind of deeper or intuitive understanding of data achieved by the approach. It simply stands for the idea of successive layers of representation of data. The number of layers in a model of the data is called the depth of the model.

Modern deep learning may involve tens or even hundreds of successive layers of representations. Each layer has parameters that have been ‘learned’ (or optimized) from the training data. These layered representations are encapsulated in models termed neural networks, quite literally layers of data arrays stacked on top of each other.

Deep Learning, ML and AI: Deep learning is a specific subfield of machine learning: learning from data that puts an emphasis on learning successive layers of increasingly meaningful representations. The deep in deep stands for this idea of successive layers of representations. Deep learning is used extensively for problems of perception – vision, speech and language.

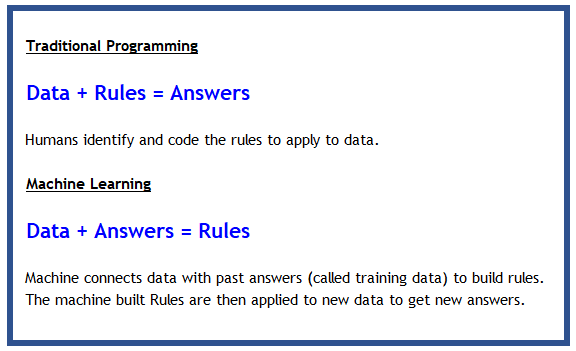

The below graphic explains the difference between traditional programming and machine learning (which includes deep learning).

A First Neural Net

Before we dive into more detail, let us build our first neural net with Tensorflow & Keras. This will help place in context the explanations that are provided later. Do not worry if not everything in the example makes sense yet, the goal is to get a high level view before we look at the more interesting stuff.

Diamond price prediction

We will use our diamonds dataset again, and try to predict the price of a diamond with the dataset. We load the data, and split it into training and test sets. In fact, we follow the regular machine learning workflow that we repeatedly followed in the prior chapter.

As always, some library imports first...

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.layers import Dense, Conv2D, MaxPooling2D, Dropout, Input

from tensorflow.keras import regularizers

from tensorflow.keras.utils import to_categorical

import numpy as np

import pandas as pd

import statsmodels.api as sm

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn import datasets

from sklearn.metrics import mean_absolute_error, mean_squared_error

import sklearn.preprocessing as preproc

from sklearn.metrics import confusion_matrix, accuracy_score, classification_report, ConfusionMatrixDisplay

from sklearn import metrics

from sklearn.model_selection import train_test_split

## Load data

diamonds = sns.load_dataset("diamonds")

## Let us examine the dataset

diamonds

| carat | cut | color | clarity | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | Ideal | E | SI2 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 |

| 1 | 0.21 | Premium | E | SI1 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 |

| 2 | 0.23 | Good | E | VS1 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 |

| 3 | 0.29 | Premium | I | VS2 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 |

| 4 | 0.31 | Good | J | SI2 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 53935 | 0.72 | Ideal | D | SI1 | 60.8 | 57.0 | 2757 | 5.75 | 5.76 | 3.50 |

| 53936 | 0.72 | Good | D | SI1 | 63.1 | 55.0 | 2757 | 5.69 | 5.75 | 3.61 |

| 53937 | 0.70 | Very Good | D | SI1 | 62.8 | 60.0 | 2757 | 5.66 | 5.68 | 3.56 |

| 53938 | 0.86 | Premium | H | SI2 | 61.0 | 58.0 | 2757 | 6.15 | 6.12 | 3.74 |

| 53939 | 0.75 | Ideal | D | SI2 | 62.2 | 55.0 | 2757 | 5.83 | 5.87 | 3.64 |

53940 rows × 10 columns

## Get dummy variables

diamonds = pd.get_dummies(diamonds)

diamonds.head()

| carat | depth | table | price | x | y | z | cut_Ideal | cut_Premium | cut_Very Good | ... | color_I | color_J | clarity_IF | clarity_VVS1 | clarity_VVS2 | clarity_VS1 | clarity_VS2 | clarity_SI1 | clarity_SI2 | clarity_I1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 | True | False | False | ... | False | False | False | False | False | False | False | False | True | False |

| 1 | 0.21 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 | False | True | False | ... | False | False | False | False | False | False | False | True | False | False |

| 2 | 0.23 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 | False | False | False | ... | False | False | False | False | False | True | False | False | False | False |

| 3 | 0.29 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 | False | True | False | ... | True | False | False | False | False | False | True | False | False | False |

| 4 | 0.31 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 | False | False | False | ... | False | True | False | False | False | False | False | False | True | False |

5 rows × 27 columns

## Define X and y as arrays. y is the price column, X is everything else

X = diamonds.loc[:, diamonds.columns != 'price'].values

y = diamonds.price.values

## Train test split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

# A step to convert the arrays to floats

X_train = X_train.astype('float')

X_test = X_test.astype('float')

Next, we build a model

We created a model using Layers.

- A ‘Layer’ is a fundamental building block that takes an array, or a ‘tensor’ as an input, performs some calculations, and provides an output. Layers generally have weights, or parameters. Some layers are ‘stateless’, in that they do not have weights (eg, the flatten layer).

- Layers were arranged sequentially in our model. The output of a layer becomes the input for the next layer. Because layers will accept an input of only a certain shape, the layers need to be compatible.

- The arrangement of the layers defines the architecture of our model.

## Now we build our model

model = keras.Sequential() #Instantiate the model

model.add(Input(shape=(X_train.shape[1],))) ## INPUT layer

model.add(Dense(200, activation = 'relu')) ## Hidden layer 1

model.add(Dense(200, activation = 'relu')) ## Hidden layer 2

model.add(Dense(200, activation = 'relu')) ## Hidden layer 3

model.add(Dense(200, activation = 'relu')) ## Hidden layer 4

model.add(Dense(1, activation = 'linear')) ## OUTPUT layer

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 200) 5400

dense_1 (Dense) (None, 200) 40200

dense_2 (Dense) (None, 200) 40200

dense_3 (Dense) (None, 200) 40200

dense_4 (Dense) (None, 1) 201

=================================================================

Total params: 126201 (492.97 KB)

Trainable params: 126201 (492.97 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

Compile the model.

Next, we compile() the model. The compile step configures the learning process. As part of this step, we define at least three more things:

- The Loss function/Objective function,

- Optimizer, and

- Metrics.

model.compile(loss = 'mean_squared_error', optimizer = 'adam', metrics = ['mean_squared_error'])

Finally, we fit() the model.

This is where the training loop runs. It needs the data, the number of epochs, and the batch size for mini-batch gradient descent.

history = model.fit(X_train, y_train, epochs = 3, batch_size = 128, validation_split = 0.2)

Epoch 1/3

270/270 [==============================] - 1s 3ms/step - loss: 14562129.0000 - mean_squared_error: 14562129.0000 - val_loss: 3446444.2500 - val_mean_squared_error: 3446444.2500

Epoch 2/3

270/270 [==============================] - 1s 3ms/step - loss: 1964322.2500 - mean_squared_error: 1964322.2500 - val_loss: 1639353.7500 - val_mean_squared_error: 1639353.7500

Epoch 3/3

270/270 [==============================] - 1s 3ms/step - loss: 1265392.1250 - mean_squared_error: 1265392.1250 - val_loss: 1009011.9375 - val_mean_squared_error: 1009011.9375

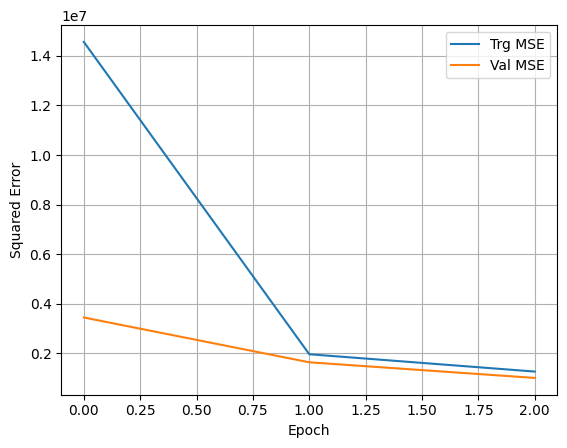

Notice that when fitting the model, we assigned the fitting process to a variable called history. This helps us capture the training metrics and plot them afterwards. We do that next.

Now we can plot the training and validation MSE

plt.plot(history.history['mean_squared_error'], label='Trg MSE')

plt.plot(history.history['val_mean_squared_error'], label='Val MSE')

plt.xlabel('Epoch')

plt.ylabel('Squared Error')

plt.legend()

plt.grid(True)

X_test

array([[ 0.51, 61. , 57. , ..., 0. , 0. , 0. ],

[ 0.24, 61.8 , 56. , ..., 0. , 0. , 0. ],

[ 0.42, 61.5 , 55. , ..., 0. , 0. , 0. ],

...,

[ 0.9 , 63.8 , 61. , ..., 1. , 0. , 0. ],

[ 2.26, 63.2 , 58. , ..., 0. , 1. , 0. ],

[ 0.5 , 61.3 , 57. , ..., 0. , 0. , 0. ]])

## Perform predictions

y_pred = model.predict(X_test)

338/338 [==============================] - 0s 951us/step

## With the predictions in hand, we can calculate RMSE and other evaluation metrics

print('MSE = ', mean_squared_error(y_test,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_test,y_pred)))

print('MAE = ', mean_absolute_error(y_test,y_pred))

MSE = 874640.7129841921

RMSE = 935.2222799870585

MAE = 493.7015598142947

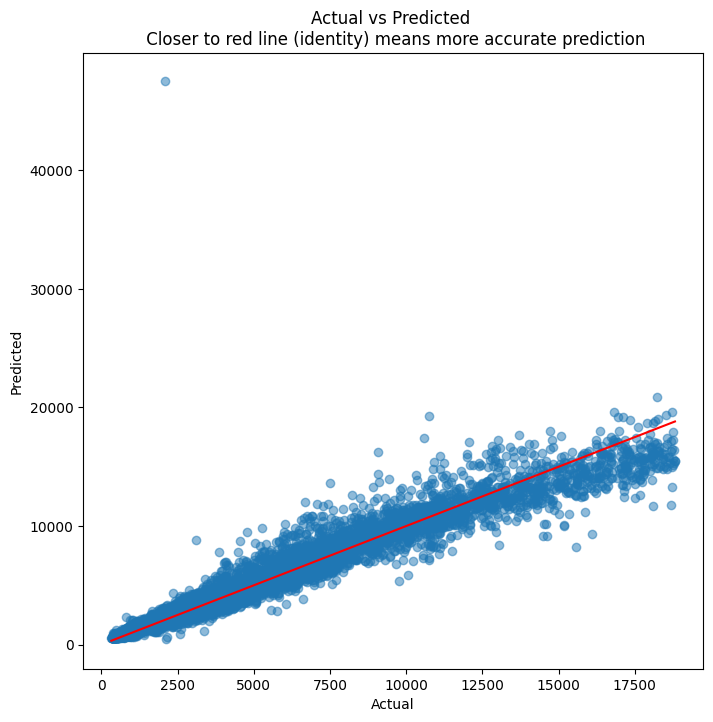

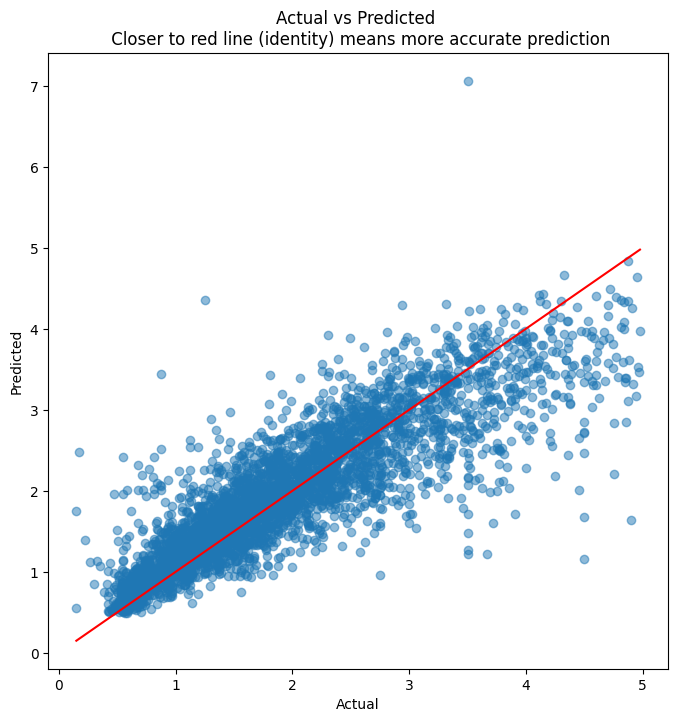

## Next, we scatterplot the actuals against the predictions

plt.figure(figsize = (8,8))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.title('Actual vs Predicted \n Closer to red line (identity) means more accurate prediction')

plt.plot( [y_test.min(),y_test.max()],[y_test.min(),y_test.max()], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted")

Text(0, 0.5, 'Predicted')

## R-squared calculation

pd.DataFrame({'actual':y_test, 'predicted':y_pred.ravel()}).corr()**2

| actual | predicted | |

|---|---|---|

| actual | 1.000000 | 0.945001 |

| predicted | 0.945001 | 1.000000 |

Understanding Neural Networks

Understanding the structure

A neural net is akin to a mechanism that can model any type of function. So given any input, and a set of labels/outputs, we can ‘train the network’ to produce the output we desire.

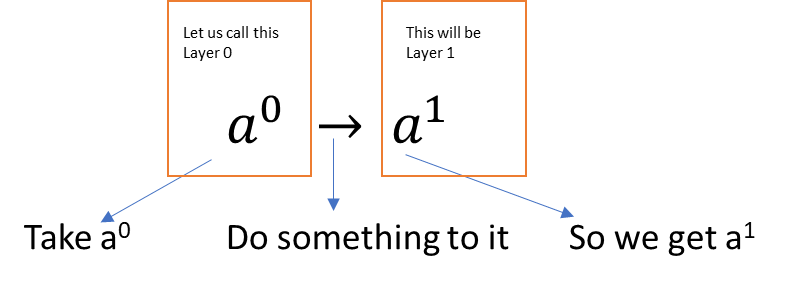

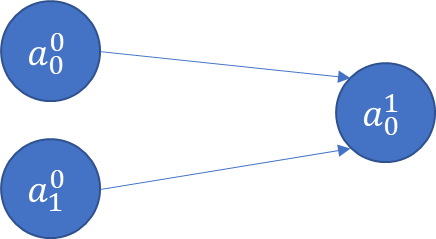

Imagine a simple case where there is an input and an output . (The superscript indicates the layer number, and is not the same as a^n!)

In other words, we have a transformation to perform. One way to do this is using a scalar weight w, and a bias term b.

- is the activation function (more on this later)

- w is the weight

- b is the bias

The question for us is: How can we derive the values of and as to get the correct value for the output?

Now imagine there are two input variables (or two features)

If we have more inputs/features, say , then generally:

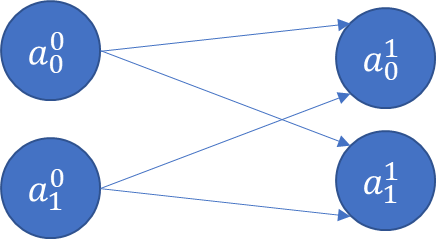

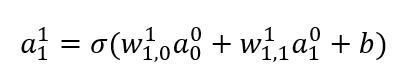

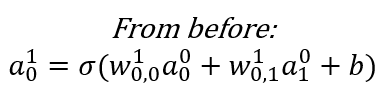

Now consider a situation where there is an extra output (eg, multiple categories).

Hidden Layers

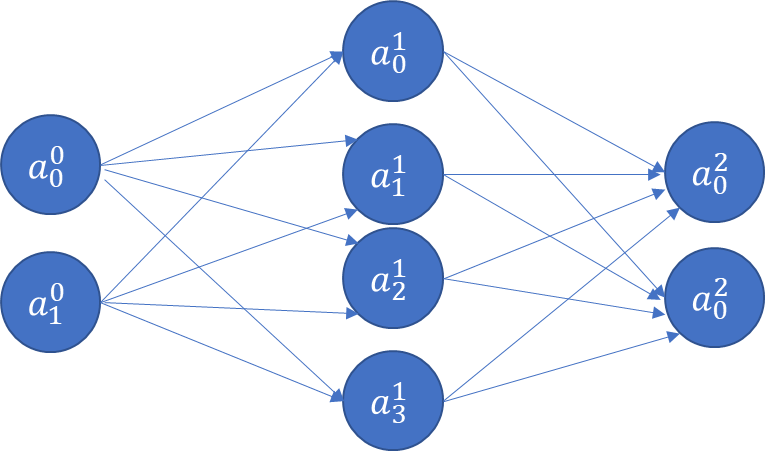

So far we have seen the input layer (called Layer 0), and the output layer (Layer 1). We started with one input, one output, and went to two inputs and two outputs.

At this point, we have 4 weights, and 2 bias terms. We also don’t know what their values should be. Next, let us think about adding a new hidden layer between the input and the output layers!

The above is a fully connected network, because each node is connected with every node in the subsequent layer. ‘Connected’ means the node contributes to the calculation of the subsequent node in the network.

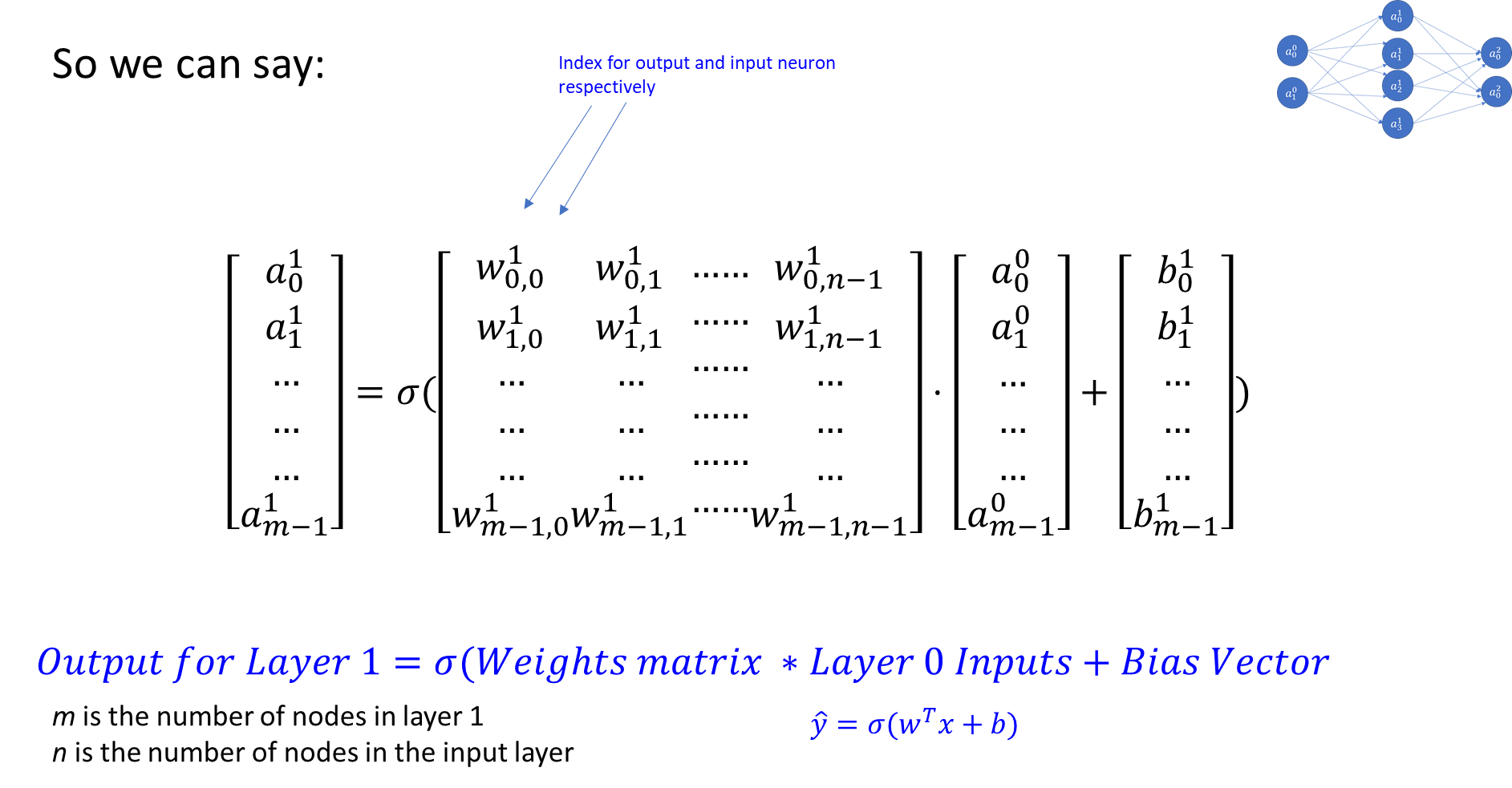

The value of each node (other than the input layer which is provided to us) can be calculated by a generalization of the formula below. Fortunately, all the weights for a Layer can be captured as a weights matrix, and all the biases can also be captured as a vector. That makes the math expression very concise.

An Example Calculation

Generalizing the Calculation

In fact, not only can we combine all the weights and bias for a single layer into a convenient matrix representation, we can also combine all the weights and biases for all the layers in a network into a single weights matrix, and a bias matrix.

The arrangement of the weights and biases matrices, that is, the manner in which the dimensions are chosen, and the weights/biases are recorded inside the matrix, is done in a way that they can simply be multiplied to get the final results.

This reduces the representation of the network’s equation to: , where w is the weights matrix, b is the bias matrix, and \sigma is the activation function. (y-hat is the prediction, and stands for transpose of the weights matrix. The transposition is generally required given the traditional way of writing the weights matrix.)

Activation Function

So far all we have covered is that in a neural net, every subsequent layer is a function of weights, bias, and something that is called an activation function.

Before the function is applied, the math is linear, and the equation similar to the one for regression (compare to ).

An activation function is applied to the linear output of a layer to obtain a non-linear output. The ability to obtain non-linear results significantly expands the nature of problems that can be solved, as decision boundaries of any shape can be modeled. There are many different choices of activation functions, and a choice is generally made based on the use case.

The below are the most commonly used activation functions.

You can find many other specialized activation functions in other textbooks, and also on Wikipedia.

The main function of activation functions is to allow non-linearity into the outputs, increasing significantly the flexibility of the patterns that can be modeled by a neural network.

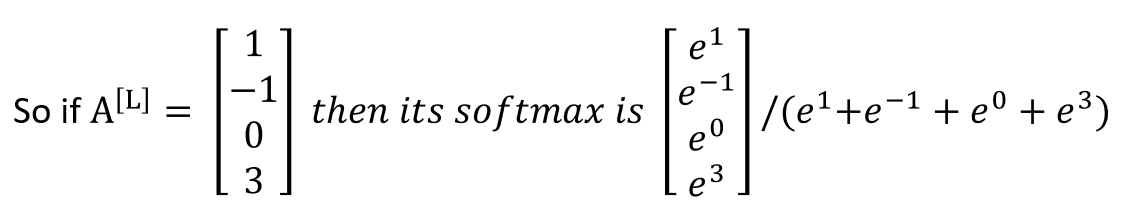

Softmax Activation

The softmax activation takes a vector and raises to the power of each of its elements. This has the effect of making everything a positive number.

If we want probabilities, then we can divide each of the elements by the sum of the elements, ie by dividing the softmax by .

A vector obtained from a softmax operations will have probabilities that add to 1.

A HARDMAX will be identical to a softmax except that all entries will be zero except one which will be equal to 1.

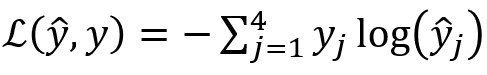

Loss Calculation for Softmax

In this case, the loss for the above will be calculated as:

Here you have only the second category, ie , the rest are zero.

So effectively the loss reduces to .

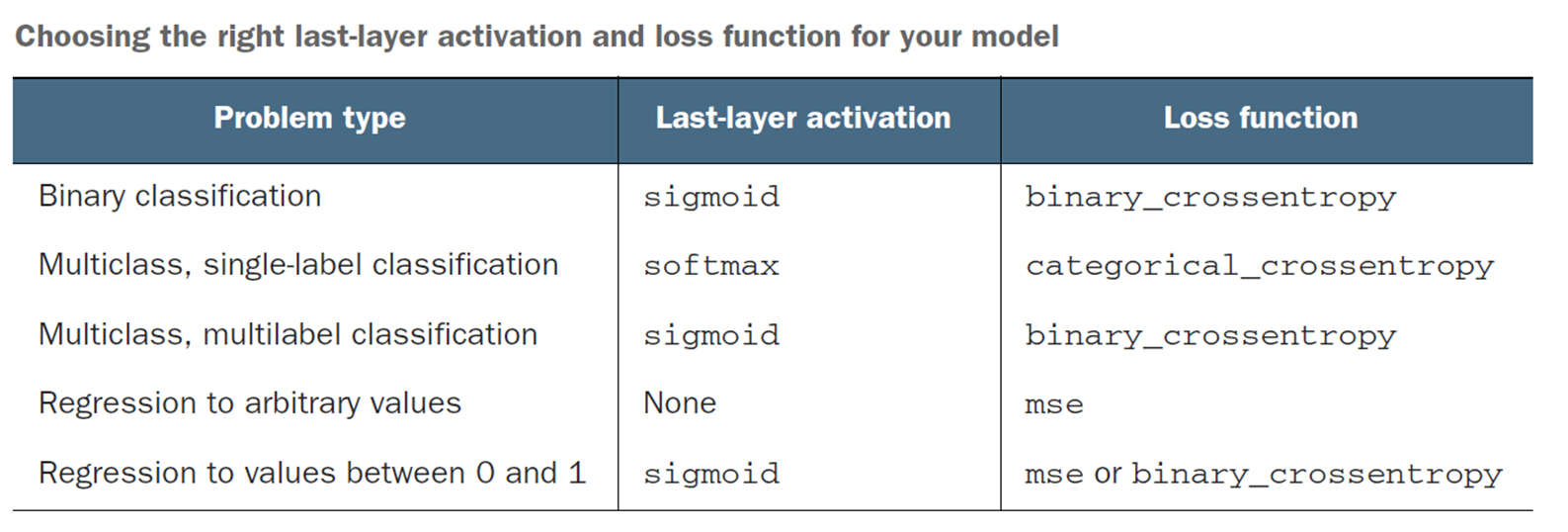

Which Activation Function to Use?

Mostly always RELU for hidden layers.

The last layer’s activation function must match your use case, and give you an answer in the shape you desire your output. So SIGMOID would not be a useful activation function for a regression problem (eg, home prices).

Source: Deep Learning with Python, François Chollet, Manning Publications

Compiling a Model

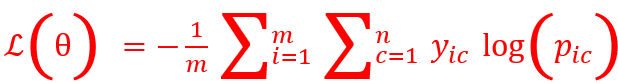

Compiling a model means configures it for training. As part of compiling the model, you specify the loss function, the metrics and the optimizer to use. These are provided as three parameters:

- Optimizer: Use rmsprop, and leave the default learning rate. (You can also try adam if rmsprop errors out.)

- Loss: Generally mse for regression problems, and binary_crossentropy/ categorical_crossentropy for binary and multiclass classification problems respectively.

- Categorical Cross Entropy loss is given by

where i=1 to m are m observations, c= 1 to n are n classes, and is the predicted probability.

- If you have only two classes, ie binary classification, you get the loss

- Metrics: This can be accuracy for classification, and MAE or MSE for regression problems.

The difference between Metrics and Loss is that metrics are for humans to interpret, and need to be intuitive. Loss functions may use similar measures that are mathematically elegant, eg differentiable, continuous etc. Often they can be the same (eg MSE), but sometimes they can be different (eg, cross entropy for classification, but accuracy for humans).

Backpropagation

How do we get w and b?

So far, we have understood how a neural net is constructed, but how do we get the values of weights and biases so that the final layer gives us our desired output?

At this point, calculus comes to our rescue.

If y is our label/target, we want y-hat to be the same as (or as close as possible) to y.

The loss function that we seek to minimize is a function of , where is our prediction of , and is the true value/label. Know that is also known as , using the convention for nodes.

The Setup for Understanding Backprop

Let us consider a simple example of binary classification, with two features x_1 and x_2 in our feature vector X. There are two weights, w_1 and w_2, and a bias term b. Our output is a. In other words:

Our goal is to calculate a, or

, where

We use the sigmoid function as our activation function, and use the log-loss as our Loss function to optimize.

-

Activation Function: . (Remember, )

-

Loss Function: )

We need to minimize the loss function. We can minimize a function by calculating its derivative (and setting it equal to zero, etc)

Our loss function is a function of and . (Look again at the equations on the prior slide to confirm.)

If we can calculate the partial derivatives , and (or the Jacobian vector of partial derivatives), we can get to the minimum for our Loss function.

For the loss function for our example, the derivative of the log-loss function is (stated without proof, but can be easily derived using the chain rule).

That is an elegant derivative, easily computed. Since backpropagation uses the chain rule for derivatives, which ends up pulling in the activation function into the mix together with the loss function, it is important that activation functions be differentiable.

How it works

1. We start with random values for , and .

2. We figure out the formulas for , and .

3. For each observation in our training set, we calculate the value of the derivative for each , etc.

4. We average the derivatives for the observations to get the derivative for our entire training population.

5. We use this average derivative value to get the next better value of , and .

-

-

-

6. Where is the learning rate as we don’t want to go too fast and miss the minima.

7. Once we have better values of , and , we repeat this again.

Backpropagation

-

Perform iterations – a full forward pass followed by a backward pass is a single iteration.

-

For every iteration, you can use all the observations, or only a sample to speed up learning. The number of observations in an iteration is called batch size.

-

When all observations have completed an iteration, an epoch is said to have been completed.

That, in short, is how back-propagation works. Because it uses derivatives to arrive at the optimum value, it is also called gradient descent.

We considered a very simple two variable case, but even with larger networks and thousands of variables, the concept is the same.

Batch Sizes & Epochs

BATCH GRADIENT DESCENT

If you have m examples and pass all of them through the forward and backward pass simultaneously, it would be called BATCH GRADIENT DESCENT.

If m is very large, say 5 million observations, then the gradient descent process can become very slow.

MINI-BATCH GRADIENT DESCENT

A better strategy may be to divide the m observations into mini-batches of 1000 each so that we can start getting the benefit from gradient descent quickly.

So we can divide m into ‘t’ mini-batches and loop through the t batches one by one, and keep improving network performance with each mini-batch.

Mini batch sizes are generally powers of 2, eg 64 (2^6), 128, 256, 512, 1024 etc.

So if m is 5 million, and mini-batch size is 1000, t will be from 1 to 5000.

STOCHASTIC GRADIENT DESCENT

When mini-batch size is 1, it is called stochastic gradient descent, or SGD.

To sum up:

- When mini batch size = m, it is called BATCH GRADIENT DESCENT.

- When mini batch size = 1, it is called STOCHASTIC GRADIENT DESCENT.

- When mini batch size is between 1 and m, it is called MINI-BATCH GRADIENT DESCENT.

What is an EPOCH

An epoch is when the entire training dataset has been worked through the backpropagation algorithm. That is when a complete pass of the data has been completed through the backpropagation algorithm.

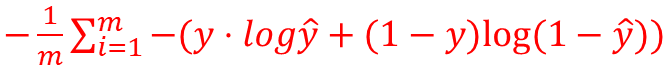

Learning Rate

We take small steps from our random starting point to the optimum value of the weights and biases. The step size is controlled by the learning rate (alpha). If the learning rate is too small, it will take very long for the training to complete. If the rate is large, we may miss the minima as we may step over it.

- Intuitively, what we want is large steps in the beginning, and slower steps as we get closer to the optimal point.

- We can do this by using a momentum term, which can make the move towards the op+timum faster.

- The momentum term is called beta, and it is in addition to the \alpha term.

- There are several optimization algorithms to choose from (eg ADAM, RMS Prop), and each may have its own implementation of beta.

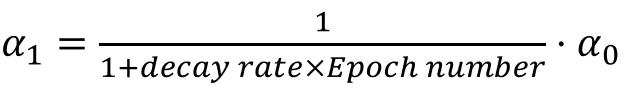

- We can also vary the learning rate by decaying it for each subsequent epoch, for example:

etc

Parameters and Hyperparameters

Parameters: weights and biases

Consider the network in the image. Each node’s output is , and represents a 'feature' for consumption by the next layer.

This feature is a new feature calculated as a synthetic combination of previous inputs using .

Each layer will have a weights vector , and a bias .

Let us pause a moment to think about how many weights and biases we need, and generally the ‘shape’ of our network.

- Layer 0 is the input layer. It will have m observations and n features.

- In the example network below, there are 2 features in our input layer.

- The 2 features join with 4 nodes in Layer 1. For each node in Layer 1, we need 2 weights and 1 bias term. Since there are 4 nodes in Layer 2, we will need 8 weights and 4 biases.

- For the output layer, each of the 2 nodes will have 4 weights, and 1 bias term, making for 8 weights and 2 bias parameters.

- For the entire network, we need to optimize 16 weights and 6 bias parameters. And this has to be done for every single observation in the training set for each epoch.

Hyperparameters

Hyperparameters for a network control several architectural aspects of a network.

The below are the key hyperparameters an analyst needs to think about:

- Learning rate (alpha)

- Mini-batch size

- Beta (momentum term)

- Number of hidden neurons in each layer

- Number of layers

There are other hyperparameters as well, and different hyperparameters for different network architectures.

All hyperparameters can be specified as part of the deep learning network.

Applied deep learning is a very empirical process, ie, requires a lot of trial and error.

Overfitting

The Problem of Overfitting

Optimization refers to the process of adjusting a model to get the best performance on training data.

Generalization refers to how well the model performs on data it has not seen before.

As part of optimization, the model will try to "memorize" the training data using the parameters it is allowed. If there is not a lot of data to train on, optimization may happen quickly, as patterns would be learned.

But such a model may have poor real world performance, while having exceptional training set performance. This problem is called ‘overfitting’.

Fighting Overfitting

There are several ways of dealing with overfitting:

- Get more training data: More training data means better generalization, and avoiding learning misleading patterns that only exist in the training data.

- Reduce the size of the network: By reducing layers and nodes in the network, we can reduce the ability of the network to overfit. Surprisingly, smaller networks can have better results than larger ones!

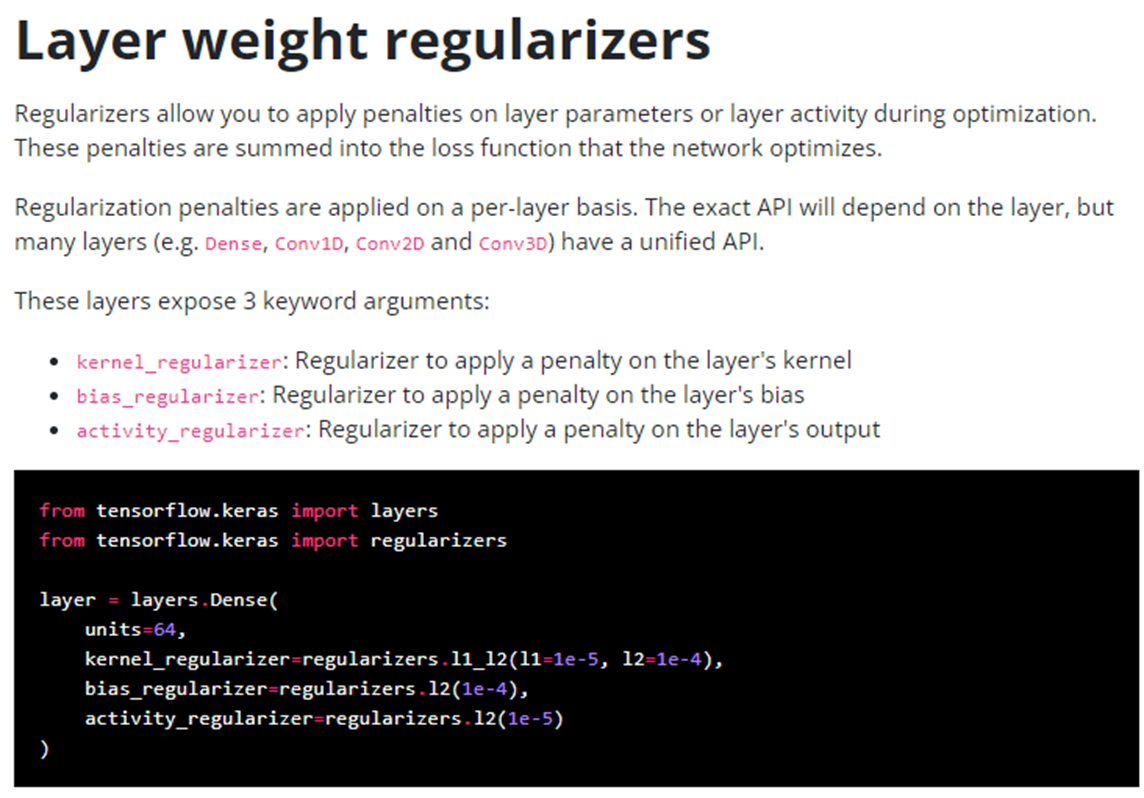

- Regularization: Penalize large weights, biases and activations.

Regularization of Networks

L2 Regularization

- In regularization, we add a term to our cost function as to create a penalty for large w vectors. This helps reduce variance (overfitting) by pushing w entries closer to zero.

- But regularization can increase bias, but often a balance can be struck.

- L2 regularization can cause ‘weight decay’, ie gradient descent shrinks the weights on each iteration.

In Keras, regularization can be specified as part of the layer parameters.

Source: https://keras.io/api/layers/regularizers/

Drop-out Regularization

With drop-out regularization, we drop, which means completely zero out many of a network’s nodes by setting their output to zero. We do this separately for each observation in the forward prop step. Which means in the same pass, different nodes would be deleted for each training example. This has the effect of reducing network size, hence reducing variance/overfitting.

Setting a node’s output to zero means eliminating an input into the next layer. Which means reducing features at random. Since inputs disappear at random, the weight gets spread out instead of relying upon one feature.

‘Keep-prob’ means how much of the network we keep. So 80% keep-prob means we drop 20%. You can have different keep-prob values for different layers.

One disadvantage of drop-out regularization is that the cost function becomes ill defined. And gradient descent does not work well.

So you can still optimize without drop-outs, and once all hyper-parameters have been optimized, switch to a drop-out version with the hope that the same hyper-parameters are still the best.

Drop-out regularization is implemented in Keras as a layer type.

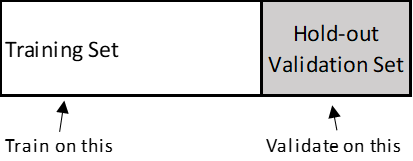

Training, Validation and Test Sets

In deep learning, data is split into 3 sets: training, validation and test.

- Train on training data, and evaluate on validation data.

- When ready for the real world, test it a final time on the test set

Why not just training and test sets? This is because developing a model always involves tuning its hyperparameters. This tuning happens on the validation set.

Doing it repeatedly can lead to overfitting to the validation set, even though the model never directly sees it. As you tweak the hyperparameters repeatedly, information leakage occurs where the algorithm starts to fit the model to do well on the validation set, with poor generalization.

Approaches to Validation

Two primary approaches:

- Simple hold-out validation: Useful if you have lots of data

- K-fold Validation: (in the illustration below, k is 4) – if you have less data

Data Pre-Processing for Neural Nets

All data must be expressed as tensors (another name for arrays) of floating point data. Not integers, not text. Neural networks:

- Do not like large numbers. Ideally, your data should be in the 0-1 range.

- Do not like heterogenous data. Data is heterogenous when one feature is in the range, say, 0-1, and another is in the range 0-100.

The above upset gradient updates, and the network may not converge or give you good results. Standard Scaling of the data can help avoid the above problems. As a default option – always standard scale your data.

Examples

California Housing - Deep Learning

Next, we try to predict home prices using the California Housing dataset

## California housing dataset. medv is the median value to predict

from sklearn import datasets

X = datasets.fetch_california_housing()['data']

y = datasets.fetch_california_housing()['target']

features = datasets.fetch_california_housing()['feature_names']

DESCR = datasets.fetch_california_housing()['DESCR']

cali_df = pd.DataFrame(X, columns = features)

cali_df.insert(0,'Value', y)

cali_df

| Value | MedInc | HouseAge | AveRooms | AveBedrms | Population | AveOccup | Latitude | Longitude | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 4.526 | 8.3252 | 41.0 | 6.984127 | 1.023810 | 322.0 | 2.555556 | 37.88 | -122.23 |

| 1 | 3.585 | 8.3014 | 21.0 | 6.238137 | 0.971880 | 2401.0 | 2.109842 | 37.86 | -122.22 |

| 2 | 3.521 | 7.2574 | 52.0 | 8.288136 | 1.073446 | 496.0 | 2.802260 | 37.85 | -122.24 |

| 3 | 3.413 | 5.6431 | 52.0 | 5.817352 | 1.073059 | 558.0 | 2.547945 | 37.85 | -122.25 |

| 4 | 3.422 | 3.8462 | 52.0 | 6.281853 | 1.081081 | 565.0 | 2.181467 | 37.85 | -122.25 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 20635 | 0.781 | 1.5603 | 25.0 | 5.045455 | 1.133333 | 845.0 | 2.560606 | 39.48 | -121.09 |

| 20636 | 0.771 | 2.5568 | 18.0 | 6.114035 | 1.315789 | 356.0 | 3.122807 | 39.49 | -121.21 |

| 20637 | 0.923 | 1.7000 | 17.0 | 5.205543 | 1.120092 | 1007.0 | 2.325635 | 39.43 | -121.22 |

| 20638 | 0.847 | 1.8672 | 18.0 | 5.329513 | 1.171920 | 741.0 | 2.123209 | 39.43 | -121.32 |

| 20639 | 0.894 | 2.3886 | 16.0 | 5.254717 | 1.162264 | 1387.0 | 2.616981 | 39.37 | -121.24 |

20640 rows × 9 columns

cali_df.Value.describe()

count 20640.000000

mean 2.068558

std 1.153956

min 0.149990

25% 1.196000

50% 1.797000

75% 2.647250

max 5.000010

Name: Value, dtype: float64

cali_df = cali_df.query("Value<5")

X = cali_df.iloc[:, 1:]

y = cali_df.iloc[:, :1]

X = pd.DataFrame(preproc.StandardScaler().fit_transform(X))

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

X

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

|---|---|---|---|---|---|---|---|---|

| 0 | 2.959952 | 1.009853 | 0.707472 | -0.161044 | -0.978430 | -0.050851 | 1.036333 | -1.330014 |

| 1 | 2.944799 | -0.589669 | 0.382175 | -0.275899 | 0.838805 | -0.092746 | 1.027030 | -1.325029 |

| 2 | 2.280068 | 1.889591 | 1.276098 | -0.051258 | -0.826338 | -0.027663 | 1.022379 | -1.335000 |

| 3 | 1.252220 | 1.889591 | 0.198688 | -0.052114 | -0.772144 | -0.051567 | 1.022379 | -1.339986 |

| 4 | 0.108107 | 1.889591 | 0.401238 | -0.034372 | -0.766026 | -0.086014 | 1.022379 | -1.339986 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 19643 | -1.347359 | -0.269765 | -0.137906 | 0.081199 | -0.521280 | -0.050377 | 1.780515 | -0.761637 |

| 19644 | -0.712873 | -0.829598 | 0.328060 | 0.484752 | -0.948710 | 0.002467 | 1.785166 | -0.821466 |

| 19645 | -1.258410 | -0.909574 | -0.068098 | 0.051913 | -0.379677 | -0.072463 | 1.757259 | -0.826452 |

| 19646 | -1.151951 | -0.829598 | -0.014039 | 0.166543 | -0.612186 | -0.091490 | 1.757259 | -0.876310 |

| 19647 | -0.819968 | -0.989550 | -0.046655 | 0.145187 | -0.047523 | -0.045078 | 1.729352 | -0.836423 |

19648 rows × 8 columns

model = keras.Sequential()

model.add(Input(shape=(X_train.shape[1],))) ## INPUT layer 0

model.add(Dense(100, activation = 'relu')) ## Hidden layer 1

model.add(Dropout(0.2)) ## Hidden layer 2

model.add(Dense(200, activation = 'relu')) ## Hidden layer 3

model.add(Dense(1)) ## OUTPUT layer

X_train.shape[1]

8

model.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_5 (Dense) (None, 100) 900

dropout (Dropout) (None, 100) 0

dense_6 (Dense) (None, 200) 20200

dense_7 (Dense) (None, 1) 201

=================================================================

Total params: 21301 (83.21 KB)

Trainable params: 21301 (83.21 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

model.compile(loss= "mean_squared_error" ,

optimizer="adam",

metrics=["mean_squared_error"])

callback = tf.keras.callbacks.EarlyStopping(monitor='val_mean_squared_error', patience=4)

history = model.fit(X_train, y_train, epochs=100, batch_size=128, validation_split = 0.1, callbacks=[callback])

print('Done')

Epoch 1/100

111/111 [==============================] - 1s 3ms/step - loss: 0.8616 - mean_squared_error: 0.8616 - val_loss: 0.4140 - val_mean_squared_error: 0.4140

Epoch 2/100

111/111 [==============================] - 0s 2ms/step - loss: 0.4173 - mean_squared_error: 0.4173 - val_loss: 0.3466 - val_mean_squared_error: 0.3466

Epoch 3/100

111/111 [==============================] - 0s 2ms/step - loss: 0.3650 - mean_squared_error: 0.3650 - val_loss: 0.3306 - val_mean_squared_error: 0.3306

Epoch 4/100

111/111 [==============================] - 0s 2ms/step - loss: 0.3327 - mean_squared_error: 0.3327 - val_loss: 0.3113 - val_mean_squared_error: 0.3113

Epoch 5/100

111/111 [==============================] - 0s 2ms/step - loss: 0.3244 - mean_squared_error: 0.3244 - val_loss: 0.3214 - val_mean_squared_error: 0.3214

Epoch 6/100

111/111 [==============================] - 0s 2ms/step - loss: 0.3583 - mean_squared_error: 0.3583 - val_loss: 0.3136 - val_mean_squared_error: 0.3136

Epoch 7/100

111/111 [==============================] - 0s 2ms/step - loss: 0.3115 - mean_squared_error: 0.3115 - val_loss: 0.3035 - val_mean_squared_error: 0.3035

Epoch 8/100

111/111 [==============================] - 0s 2ms/step - loss: 0.3084 - mean_squared_error: 0.3084 - val_loss: 0.2995 - val_mean_squared_error: 0.2995

Epoch 9/100

111/111 [==============================] - 0s 2ms/step - loss: 0.3039 - mean_squared_error: 0.3039 - val_loss: 0.2998 - val_mean_squared_error: 0.2998

Epoch 10/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2893 - mean_squared_error: 0.2893 - val_loss: 0.2898 - val_mean_squared_error: 0.2898

Epoch 11/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2906 - mean_squared_error: 0.2906 - val_loss: 0.2893 - val_mean_squared_error: 0.2893

Epoch 12/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2838 - mean_squared_error: 0.2838 - val_loss: 0.2837 - val_mean_squared_error: 0.2837

Epoch 13/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2838 - mean_squared_error: 0.2838 - val_loss: 0.2882 - val_mean_squared_error: 0.2882

Epoch 14/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2748 - mean_squared_error: 0.2748 - val_loss: 0.2906 - val_mean_squared_error: 0.2906

Epoch 15/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2686 - mean_squared_error: 0.2686 - val_loss: 0.2779 - val_mean_squared_error: 0.2779

Epoch 16/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2899 - mean_squared_error: 0.2899 - val_loss: 0.2749 - val_mean_squared_error: 0.2749

Epoch 17/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2641 - mean_squared_error: 0.2641 - val_loss: 0.2742 - val_mean_squared_error: 0.2742

Epoch 18/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2642 - mean_squared_error: 0.2642 - val_loss: 0.2711 - val_mean_squared_error: 0.2711

Epoch 19/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2600 - mean_squared_error: 0.2600 - val_loss: 0.2696 - val_mean_squared_error: 0.2696

Epoch 20/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2546 - mean_squared_error: 0.2546 - val_loss: 0.2728 - val_mean_squared_error: 0.2728

Epoch 21/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2507 - mean_squared_error: 0.2507 - val_loss: 0.2722 - val_mean_squared_error: 0.2722

Epoch 22/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2475 - mean_squared_error: 0.2475 - val_loss: 0.2684 - val_mean_squared_error: 0.2684

Epoch 23/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2563 - mean_squared_error: 0.2563 - val_loss: 0.2663 - val_mean_squared_error: 0.2663

Epoch 24/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2449 - mean_squared_error: 0.2449 - val_loss: 0.2682 - val_mean_squared_error: 0.2682

Epoch 25/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2439 - mean_squared_error: 0.2439 - val_loss: 0.2696 - val_mean_squared_error: 0.2696

Epoch 26/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2468 - mean_squared_error: 0.2468 - val_loss: 0.2630 - val_mean_squared_error: 0.2630

Epoch 27/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2410 - mean_squared_error: 0.2410 - val_loss: 0.2633 - val_mean_squared_error: 0.2633

Epoch 28/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2407 - mean_squared_error: 0.2407 - val_loss: 0.2615 - val_mean_squared_error: 0.2615

Epoch 29/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2377 - mean_squared_error: 0.2377 - val_loss: 0.2610 - val_mean_squared_error: 0.2610

Epoch 30/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2391 - mean_squared_error: 0.2391 - val_loss: 0.2605 - val_mean_squared_error: 0.2605

Epoch 31/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2334 - mean_squared_error: 0.2334 - val_loss: 0.2612 - val_mean_squared_error: 0.2612

Epoch 32/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2345 - mean_squared_error: 0.2345 - val_loss: 0.2622 - val_mean_squared_error: 0.2622

Epoch 33/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2312 - mean_squared_error: 0.2312 - val_loss: 0.2577 - val_mean_squared_error: 0.2577

Epoch 34/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2319 - mean_squared_error: 0.2319 - val_loss: 0.2610 - val_mean_squared_error: 0.2610

Epoch 35/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2329 - mean_squared_error: 0.2329 - val_loss: 0.2548 - val_mean_squared_error: 0.2548

Epoch 36/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2339 - mean_squared_error: 0.2339 - val_loss: 0.2648 - val_mean_squared_error: 0.2648

Epoch 37/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2401 - mean_squared_error: 0.2401 - val_loss: 0.2620 - val_mean_squared_error: 0.2620

Epoch 38/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2264 - mean_squared_error: 0.2264 - val_loss: 0.2524 - val_mean_squared_error: 0.2524

Epoch 39/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2295 - mean_squared_error: 0.2295 - val_loss: 0.2530 - val_mean_squared_error: 0.2530

Epoch 40/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2325 - mean_squared_error: 0.2325 - val_loss: 0.2554 - val_mean_squared_error: 0.2554

Epoch 41/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2277 - mean_squared_error: 0.2277 - val_loss: 0.2542 - val_mean_squared_error: 0.2542

Epoch 42/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2319 - mean_squared_error: 0.2319 - val_loss: 0.2520 - val_mean_squared_error: 0.2520

Epoch 43/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2253 - mean_squared_error: 0.2253 - val_loss: 0.2557 - val_mean_squared_error: 0.2557

Epoch 44/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2277 - mean_squared_error: 0.2277 - val_loss: 0.2496 - val_mean_squared_error: 0.2496

Epoch 45/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2268 - mean_squared_error: 0.2268 - val_loss: 0.2554 - val_mean_squared_error: 0.2554

Epoch 46/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2245 - mean_squared_error: 0.2245 - val_loss: 0.2567 - val_mean_squared_error: 0.2567

Epoch 47/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2217 - mean_squared_error: 0.2217 - val_loss: 0.2502 - val_mean_squared_error: 0.2502

Epoch 48/100

111/111 [==============================] - 0s 2ms/step - loss: 0.2201 - mean_squared_error: 0.2201 - val_loss: 0.2516 - val_mean_squared_error: 0.2516

Done

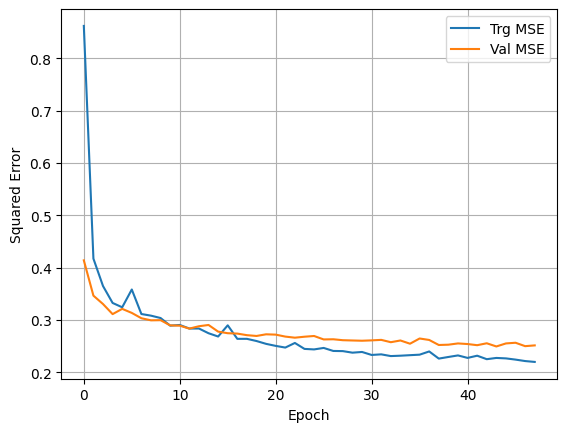

plt.plot(history.history['mean_squared_error'], label='Trg MSE')

plt.plot(history.history['val_mean_squared_error'], label='Val MSE')

plt.xlabel('Epoch')

plt.ylabel('Squared Error')

plt.legend()

plt.grid(True)

y_pred = model.predict(X_test)

123/123 [==============================] - 0s 791us/step

print('MSE = ', mean_squared_error(y_test,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_test,y_pred)))

print('MAE = ', mean_absolute_error(y_test,y_pred))

MSE = 0.22933335851508532

RMSE = 0.47888762618706837

MAE = 0.3322660378818949

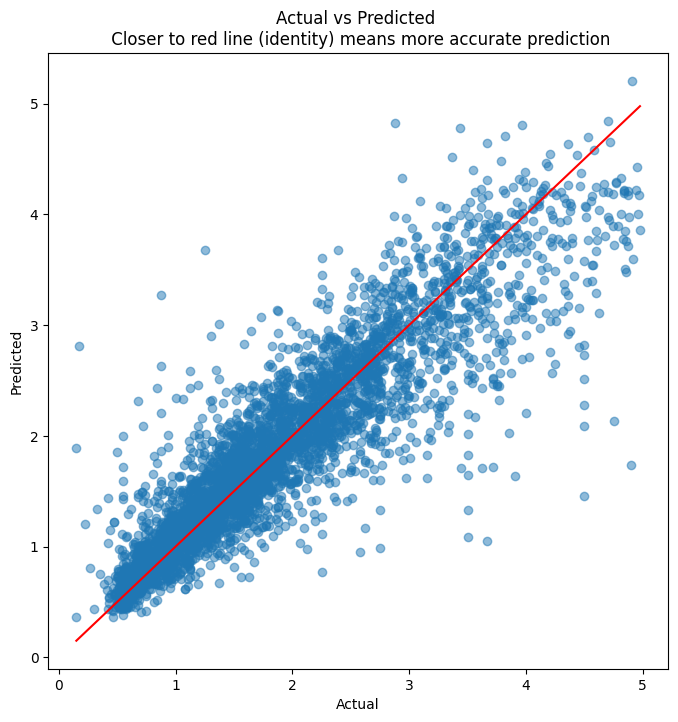

plt.figure(figsize = (8,8))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.title('Actual vs Predicted \n Closer to red line (identity) means more accurate prediction')

plt.plot( [y_test.min(),y_test.max()],[y_test.min(),y_test.max()], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted")

Text(0, 0.5, 'Predicted')

## R-squared calculation

pd.DataFrame({'actual':y_test.iloc[:,0].values, 'predicted':y_pred.ravel()}).corr()**2

| actual | predicted | |

|---|---|---|

| actual | 1.000000 | 0.756613 |

| predicted | 0.756613 | 1.000000 |

California Housing - XGBoost

Just with a view to comparing the performance of our deep learning network above to XGBoost, we fit an XGBoost model.

## Fit model

from xgboost import XGBRegressor

model_xgb_regr = XGBRegressor()

model_xgb_regr.fit(X_train, y_train)

model_xgb_regr.predict(X_test)

array([0.8157365, 2.2790809, 1.1525728, ..., 2.6292877, 1.9455711,

1.3955337], dtype=float32)

## Evaluate model

y_pred = model_xgb_regr.predict(X_test)

from sklearn.metrics import mean_absolute_error, mean_squared_error

print('MSE = ', mean_squared_error(y_test,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_test,y_pred)))

print('MAE = ', mean_absolute_error(y_test,y_pred))

MSE = 0.18441491976668073

RMSE = 0.4294355827905749

MAE = 0.2915835292178608

## Evaluate residuals

plt.figure(figsize = (8,8))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.title('Actual vs Predicted \n Closer to red line (identity) means more accurate prediction')

plt.plot( [y_test.min(),y_test.max()],[y_test.min(),y_test.max()], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted");

## R-squared calculation

pd.DataFrame({'actual':y_test.iloc[:,0].values, 'predicted':y_pred.ravel()}).corr()**2

| actual | predicted | |

|---|---|---|

| actual | 1.000000 | 0.803717 |

| predicted | 0.803717 | 1.000000 |

Classification Example

df = pd.read_csv('diabetes.csv')

print(df.shape)

df.head()

(768, 9)

| Pregnancies | Glucose | BloodPressure | SkinThickness | Insulin | BMI | DiabetesPedigreeFunction | Age | Outcome | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 6 | 148 | 72 | 35 | 0 | 33.6 | 0.627 | 50 | 1 |

| 1 | 1 | 85 | 66 | 29 | 0 | 26.6 | 0.351 | 31 | 0 |

| 2 | 8 | 183 | 64 | 0 | 0 | 23.3 | 0.672 | 32 | 1 |

| 3 | 1 | 89 | 66 | 23 | 94 | 28.1 | 0.167 | 21 | 0 |

| 4 | 0 | 137 | 40 | 35 | 168 | 43.1 | 2.288 | 33 | 1 |

from sklearn.model_selection import train_test_split

X = df.iloc[:,:8]

X = pd.DataFrame(preproc.StandardScaler().fit_transform(X))

y = df.Outcome

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25)

X_train.shape[1]

8

model = keras.Sequential()

model.add(Dense(100, input_dim=X_train.shape[1], activation='relu'))

## model.add(Dense(100, activation='relu'))

## model.add(Dense(200, activation='relu'))

## model.add(Dense(256, activation='relu'))

model.add(Dense(8, activation='relu'))

## model.add(Dense(200, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(loss= "binary_crossentropy" , optimizer="adam", metrics=["accuracy"])

callback = tf.keras.callbacks.EarlyStopping(monitor='val_accuracy', patience=4)

history = model.fit(X_train, y_train, epochs=100, batch_size=32, validation_split = 0.2, callbacks=[callback])

print('\nDone!!')

Epoch 1/100

15/15 [==============================] - 0s 8ms/step - loss: 0.6887 - accuracy: 0.5674 - val_loss: 0.6003 - val_accuracy: 0.7328

Epoch 2/100

15/15 [==============================] - 0s 3ms/step - loss: 0.5745 - accuracy: 0.7522 - val_loss: 0.5376 - val_accuracy: 0.7672

Epoch 3/100

15/15 [==============================] - 0s 3ms/step - loss: 0.5270 - accuracy: 0.7717 - val_loss: 0.4996 - val_accuracy: 0.7845

Epoch 4/100

15/15 [==============================] - 0s 3ms/step - loss: 0.4931 - accuracy: 0.7848 - val_loss: 0.4788 - val_accuracy: 0.7759

Epoch 5/100

15/15 [==============================] - 0s 3ms/step - loss: 0.4717 - accuracy: 0.7891 - val_loss: 0.4639 - val_accuracy: 0.7586

Epoch 6/100

15/15 [==============================] - 0s 3ms/step - loss: 0.4551 - accuracy: 0.7891 - val_loss: 0.4618 - val_accuracy: 0.7586

Epoch 7/100

15/15 [==============================] - 0s 3ms/step - loss: 0.4434 - accuracy: 0.8065 - val_loss: 0.4620 - val_accuracy: 0.7672

Done!!

model.evaluate(X_test, y_test)

6/6 [==============================] - 0s 1ms/step - loss: 0.4920 - accuracy: 0.7344

[0.4920426607131958, 0.734375]

## evaluate the keras model

ss, accuracy = model.evaluate(X_test, y_test)

print('Accuracy:', accuracy*100)

6/6 [==============================] - 0s 1ms/step - loss: 0.4920 - accuracy: 0.7344

Accuracy: 73.4375

## Training Accuracy

pred_prob = model.predict(X_train)

threshold = 0.50

## pred = list(map(round, pred_prob))

pred = (model.predict(X_train)>threshold) * 1

from sklearn.metrics import (confusion_matrix, accuracy_score)

## confusion matrix

cm = confusion_matrix(y_train, pred)

print ("Confusion Matrix : \n", cm)

## accuracy score of the model

print('Train accuracy = ', accuracy_score(y_train, pred))

18/18 [==============================] - 0s 885us/step

18/18 [==============================] - 0s 909us/step

Confusion Matrix :

[[346 31]

[ 83 116]]

Train accuracy = 0.8020833333333334

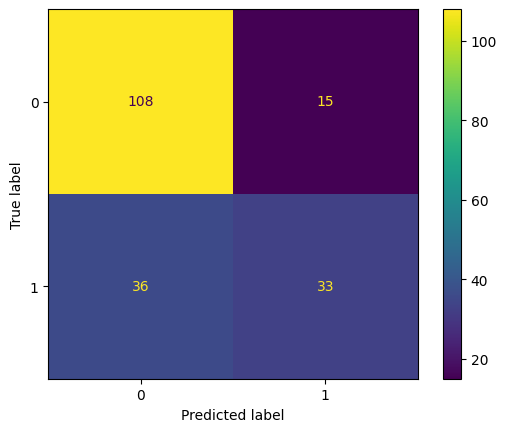

## Testing Accuracy

pred_prob = model.predict(X_test)

threshold = 0.50

## pred = list(map(round, pred_prob))

pred = (model.predict(X_test)>threshold) * 1

from sklearn.metrics import (confusion_matrix, accuracy_score)

## confusion matrix

cm = confusion_matrix(y_test, pred)

print ("Confusion Matrix : \n", cm)

## accuracy score of the model

print('Test accuracy = ', accuracy_score(y_test, pred))

6/6 [==============================] - 0s 1ms/step

6/6 [==============================] - 0s 1ms/step

Confusion Matrix :

[[108 15]

[ 36 33]]

Test accuracy = 0.734375

# Look at the first 10 probability scores

pred_prob[:10]

array([[0.721288 ],

[0.18479884],

[0.77215225],

[0.1881301 ],

[0.1023524 ],

[0.32380095],

[0.43650356],

[0.05792086],

[0.05308765],

[0.37288687]], dtype=float32)

pred = (model.predict(X_test)>threshold) * 1

6/6 [==============================] - 0s 1ms/step

pred.shape

(192, 1)

from sklearn.metrics import ConfusionMatrixDisplay

print(classification_report(y_true = y_test, y_pred = pred))

ConfusionMatrixDisplay(confusion_matrix=cm).plot()

## ConfusionMatrixDisplay.from_predictions(y_true = y_test, y_pred = pred, cmap='Greys')

precision recall f1-score support

0 0.75 0.88 0.81 123

1 0.69 0.48 0.56 69

accuracy 0.73 192

macro avg 0.72 0.68 0.69 192

weighted avg 0.73 0.73 0.72 192

<sklearn.metrics._plot.confusion_matrix.ConfusionMatrixDisplay at 0x188112d7d10>

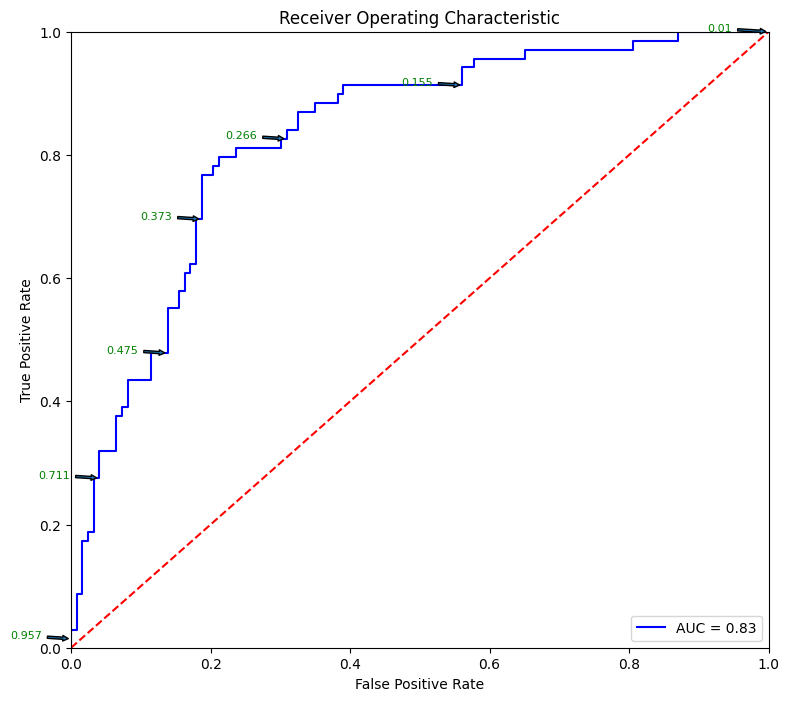

## AUC calculation

metrics.roc_auc_score(y_test, pred_prob)

0.829386119948156

# Source for code below: https://stackoverflow.com/questions/25009284/how-to-plot-roc-curve-in-python

fpr, tpr, thresholds = metrics.roc_curve(y_test, pred_prob)

roc_auc = metrics.auc(fpr, tpr)

plt.figure(figsize = (9,8))

plt.title('Receiver Operating Characteristic')

plt.plot(fpr, tpr, 'b', label = 'AUC = %0.2f' % roc_auc)

plt.legend(loc = 'lower right')

plt.plot([0, 1], [0, 1],'r--')

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate')

for i, txt in enumerate(thresholds):

if i in np.arange(1, len(thresholds), 10): # print every 10th point to prevent overplotting:

plt.annotate(text = round(txt,3), xy = (fpr[i], tpr[i]),

xytext=(-44, 0), textcoords='offset points',

arrowprops={'arrowstyle':"simple"}, color='green',fontsize=8)

plt.show()

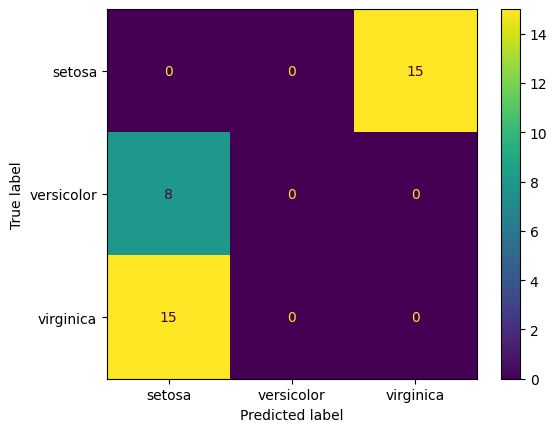

Multi-class Classification Example

df=sns.load_dataset('iris')

df

| sepal_length | sepal_width | petal_length | petal_width | species | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | setosa |

| ... | ... | ... | ... | ... | ... |

| 145 | 6.7 | 3.0 | 5.2 | 2.3 | virginica |

| 146 | 6.3 | 2.5 | 5.0 | 1.9 | virginica |

| 147 | 6.5 | 3.0 | 5.2 | 2.0 | virginica |

| 148 | 6.2 | 3.4 | 5.4 | 2.3 | virginica |

| 149 | 5.9 | 3.0 | 5.1 | 1.8 | virginica |

150 rows × 5 columns

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

encoded_labels = le.fit_transform(df['species'].values.ravel()) ## This needs a 1D arrary

list(enumerate(le.classes_))

[(0, 'setosa'), (1, 'versicolor'), (2, 'virginica')]

from tensorflow.keras.utils import to_categorical

X = df.iloc[:,:4]

y = to_categorical(encoded_labels)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25)

model = keras.Sequential()

model.add(Dense(12, input_dim=X_train.shape[1], activation='relu'))

model.add(Dense(8, activation='relu'))

model.add(Dense(3, activation='softmax'))

## compile the keras model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

## fit the keras model on the dataset

callback = tf.keras.callbacks.EarlyStopping(monitor='accuracy', patience=4)

model.fit(X_train, y_train, epochs=150, batch_size=10, callbacks = [callback])

print('\nDone')

Epoch 1/150

12/12 [==============================] - 0s 1ms/step - loss: 2.8803 - accuracy: 0.0268

Epoch 2/150

12/12 [==============================] - 0s 1ms/step - loss: 2.3614 - accuracy: 0.0000e+00

Epoch 3/150

12/12 [==============================] - 0s 1ms/step - loss: 1.9783 - accuracy: 0.0000e+00

Epoch 4/150

12/12 [==============================] - 0s 1ms/step - loss: 1.6714 - accuracy: 0.0000e+00

Epoch 5/150

12/12 [==============================] - 0s 1ms/step - loss: 1.4442 - accuracy: 0.0000e+00

Done

model.evaluate(X_test, y_test)

2/2 [==============================] - 0s 2ms/step - loss: 1.3997 - accuracy: 0.0000e+00

[1.3997128009796143, 0.0]

y_test

array([[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[0., 0., 1.],

[0., 1., 0.],

[0., 1., 0.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 1., 0.],

[1., 0., 0.],

[1., 0., 0.],

[0., 0., 1.],

[1., 0., 0.],

[0., 1., 0.],

[1., 0., 0.],

[0., 1., 0.],

[1., 0., 0.],

[0., 0., 1.],

[1., 0., 0.],

[0., 1., 0.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[1., 0., 0.],

[0., 1., 0.],

[0., 0., 1.],

[1., 0., 0.],

[1., 0., 0.],

[0., 0., 1.],

[0., 1., 0.],

[1., 0., 0.],

[1., 0., 0.]], dtype=float32)

pred = model.predict(X_test)

pred

2/2 [==============================] - 0s 2ms/step

array([[0.2822613 , 0.05553382, 0.66220486],

[0.2793297 , 0.06595141, 0.6547189 ],

[0.29135096, 0.07464809, 0.63400096],

[0.28353864, 0.07236306, 0.6440983 ],

[0.43382686, 0.34968728, 0.21648583],

[0.45698914, 0.31611806, 0.22689272],

[0.41666666, 0.32700518, 0.2563282 ],

[0.44071954, 0.37179634, 0.18748417],

[0.40844283, 0.37726644, 0.21429074],

[0.4495495 , 0.35323077, 0.19721965],

[0.49933362, 0.31062174, 0.1900446 ],

[0.24345762, 0.03898294, 0.7175594 ],

[0.28153655, 0.06344951, 0.655014 ],

[0.45637476, 0.3687389 , 0.17488642],

[0.3104543 , 0.07258127, 0.61696446],

[0.4537215 , 0.31842723, 0.22785126],

[0.271435 , 0.05542643, 0.67313856],

[0.4581745 , 0.3283537 , 0.21347174],

[0.25978264, 0.05289331, 0.68732405],

[0.4725002 , 0.35439146, 0.17310826],

[0.33035162, 0.07470612, 0.59494233],

[0.43799627, 0.34311345, 0.21889023],

[0.47776258, 0.35668057, 0.1655568 ],

[0.45363948, 0.3735682 , 0.17279233],

[0.43064523, 0.36924413, 0.20011069],

[0.42152312, 0.38463953, 0.19383734],

[0.42944792, 0.3875062 , 0.18304592],

[0.44620407, 0.37442878, 0.17936715],

[0.4499338 , 0.34822026, 0.201846 ],

[0.26532286, 0.05515821, 0.67951894],

[0.44138023, 0.32050413, 0.23811558],

[0.4528861 , 0.34326744, 0.2038465 ],

[0.2731558 , 0.06118189, 0.6656623 ],

[0.19635768, 0.02701073, 0.77663165],

[0.43125 , 0.35458365, 0.21416634],

[0.4739512 , 0.31133884, 0.21471 ],

[0.33960223, 0.11830997, 0.5420878 ],

[0.26582348, 0.05316177, 0.6810147 ]], dtype=float32)

pred.shape

(38, 3)

np.array(le.classes_)

array(['setosa', 'versicolor', 'virginica'], dtype=object)

print(classification_report(y_true = [le.classes_[np.argmax(x)] for x in y_test], y_pred = [le.classes_[np.argmax(x)] for x in pred]))

precision recall f1-score support

setosa 0.00 0.00 0.00 15.0

versicolor 0.00 0.00 0.00 8.0

virginica 0.00 0.00 0.00 15.0

accuracy 0.00 38.0

macro avg 0.00 0.00 0.00 38.0

weighted avg 0.00 0.00 0.00 38.0

C:\Users\user\AppData\Local\Programs\Python\Python311\Lib\site-packages\sklearn\metrics\_classification.py:1469: UndefinedMetricWarning: Precision and F-score are ill-defined and being set to 0.0 in labels with no predicted samples. Use `zero_division` parameter to control this behavior.

_warn_prf(average, modifier, msg_start, len(result))

C:\Users\user\AppData\Local\Programs\Python\Python311\Lib\site-packages\sklearn\metrics\_classification.py:1469: UndefinedMetricWarning: Precision and F-score are ill-defined and being set to 0.0 in labels with no predicted samples. Use `zero_division` parameter to control this behavior.

_warn_prf(average, modifier, msg_start, len(result))

C:\Users\user\AppData\Local\Programs\Python\Python311\Lib\site-packages\sklearn\metrics\_classification.py:1469: UndefinedMetricWarning: Precision and F-score are ill-defined and being set to 0.0 in labels with no predicted samples. Use `zero_division` parameter to control this behavior.

_warn_prf(average, modifier, msg_start, len(result))

ConfusionMatrixDisplay.from_predictions([np.argmax(x) for x in y_test], [np.argmax(x) for x in pred], display_labels=le.classes_)

<sklearn.metrics._plot.confusion_matrix.ConfusionMatrixDisplay at 0x18812252a10>

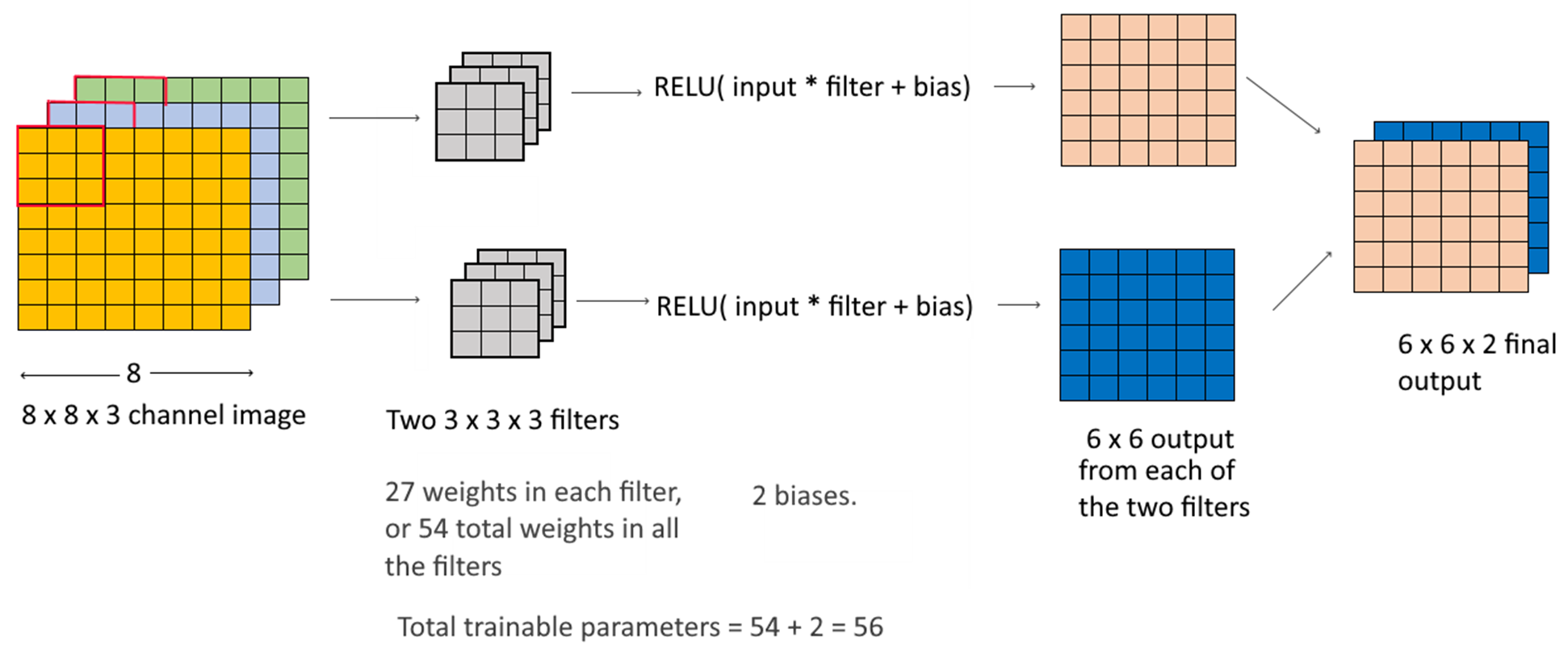

Image Recognition with CNNs

CNNs are used for image related predictions and analytics. Uses include image classification, image detection (identify multiple objects in an image), classification with localization (draw a bounding box around an object of interest).

CNNs also use weights and biases, but the approach and calculations are different from those done in a dense layer. A convolutional layer applies to images, which are 3-dimensional arrays – height, width and channel. Color images have 3 channels (one for each color RGB), while greyscale images have only 1 channel.

Consider a 3 x 3 filter applied to a 3-channel 8 x 8 image:

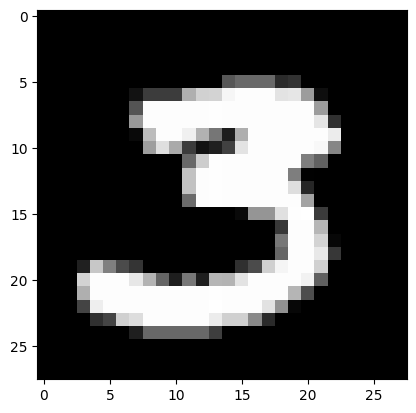

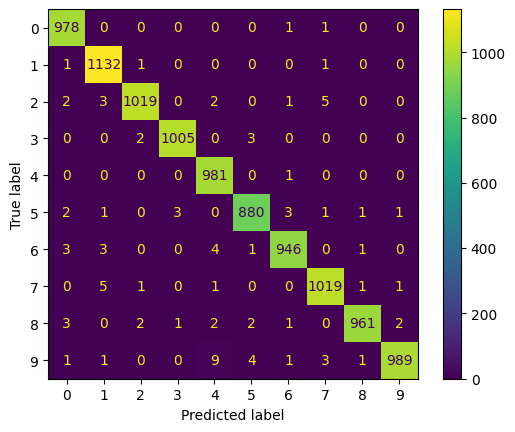

We classify the MNIST dataset, which is built-in into keras. This is a set of 60,000 training images, plus 10,000 test images, assembled by the National Institute of Standards and Technology (the NIST in MNIST) in the 1980s.

Modeling the MNIST image dataset is akin to the ‘Hello World’ of image based deep learning. Every image is a 28 x 28 array, with numbers between 1 and 255 ()

Example images:

Next, we will try to build a network to identify the digits in the MNIST dataset

from tensorflow.keras.datasets import mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

train_images.shape

(60000, 28, 28)

image_number = 1847 -4

plt.imshow(train_images[image_number], cmap='gray')

print('Labeled as:', train_labels[image_number])

Labeled as: 3

train_labels[image_number]

3

## We reshape the image arrays in a form that can be fed to the CNN

train_images = train_images.reshape((60000, 28, 28, 1))

train_images = train_images.astype("float32") / 255

test_images = test_images.reshape((10000, 28, 28, 1))

test_images = test_images.astype("float32") / 255

from tensorflow import keras

from tensorflow.keras.layers import Flatten, MaxPooling2D, Conv2D, Input

model = keras.Sequential()

model.add(Input(shape=(28, 28, 1)))

model.add(Conv2D(filters=32, kernel_size=3, activation="relu"))

model.add(MaxPooling2D(pool_size=2))

model.add(Conv2D(filters=64, kernel_size=3, activation="relu"))

model.add(MaxPooling2D(pool_size=2))

model.add(Conv2D(filters=128, kernel_size=3, activation="relu"))

model.add(Flatten())

model.add(Dense(10, activation='softmax'))

## compile the keras model

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

Model: "sequential_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_6 (Conv2D) (None, 26, 26, 32) 320

max_pooling2d_4 (MaxPoolin (None, 13, 13, 32) 0

g2D)

conv2d_7 (Conv2D) (None, 11, 11, 64) 18496

max_pooling2d_5 (MaxPoolin (None, 5, 5, 64) 0

g2D)

conv2d_8 (Conv2D) (None, 3, 3, 128) 73856

flatten_2 (Flatten) (None, 1152) 0

dense_16 (Dense) (None, 10) 11530

=================================================================

Total params: 104202 (407.04 KB)

Trainable params: 104202 (407.04 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

model.compile(optimizer="rmsprop", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

model.fit(train_images, train_labels, epochs=5, batch_size=64)

Epoch 1/5

938/938 [==============================] - 21s 22ms/step - loss: 0.1591 - accuracy: 0.9510

Epoch 2/5

938/938 [==============================] - 20s 22ms/step - loss: 0.0434 - accuracy: 0.9865

Epoch 3/5

938/938 [==============================] - 21s 23ms/step - loss: 0.0300 - accuracy: 0.9913

Epoch 4/5

938/938 [==============================] - 22s 23ms/step - loss: 0.0221 - accuracy: 0.9933

Epoch 5/5

938/938 [==============================] - 22s 23ms/step - loss: 0.0175 - accuracy: 0.9947

<keras.src.callbacks.History at 0x1881333c350>

test_loss, test_acc = model.evaluate(test_images, test_labels)

print("Test accuracy:", test_acc)

313/313 [==============================] - 2s 6ms/step - loss: 0.0268 - accuracy: 0.9910

Test accuracy: 0.9909999966621399

pred = model.predict(test_images)

pred

313/313 [==============================] - 2s 6ms/step

array([[2.26517693e-09, 5.06499775e-09, 5.41575895e-09, ...,

9.99999881e-01, 2.45444859e-10, 4.99686914e-09],

[1.38298981e-06, 1.13962898e-07, 9.99997616e-01, ...,

1.18933270e-12, 5.49632805e-11, 4.08483706e-14],

[8.74673223e-09, 9.99998808e-01, 1.18670798e-08, ...,

2.46586637e-07, 4.74215556e-09, 2.20593410e-09],

...,

[1.74903860e-16, 2.70464449e-11, 2.62210590e-15, ...,

1.32708133e-11, 8.26101167e-12, 8.61862394e-14],

[8.24222894e-08, 8.11386403e-10, 1.62018628e-11, ...,

1.12429285e-11, 1.36881863e-05, 2.13236234e-10],

[1.15086030e-08, 4.16140927e-10, 2.80235701e-09, ...,

1.33498078e-15, 8.03823452e-10, 2.84819829e-13]], dtype=float32)

pred.shape

(10000, 10)

test_labels

array([7, 2, 1, ..., 4, 5, 6], dtype=uint8)

print(classification_report(y_true = [np.argmax(x) for x in pred], y_pred = test_labels))

precision recall f1-score support

0 1.00 0.99 0.99 990

1 1.00 0.99 0.99 1145

2 0.99 0.99 0.99 1025

3 1.00 1.00 1.00 1009

4 1.00 0.98 0.99 999

5 0.99 0.99 0.99 890

6 0.99 0.99 0.99 954

7 0.99 0.99 0.99 1030

8 0.99 1.00 0.99 965

9 0.98 1.00 0.99 993

accuracy 0.99 10000

macro avg 0.99 0.99 0.99 10000

weighted avg 0.99 0.99 0.99 10000

ConfusionMatrixDisplay.from_predictions(test_labels, [np.argmax(x) for x in pred])

<sklearn.metrics._plot.confusion_matrix.ConfusionMatrixDisplay at 0x18811de5350>

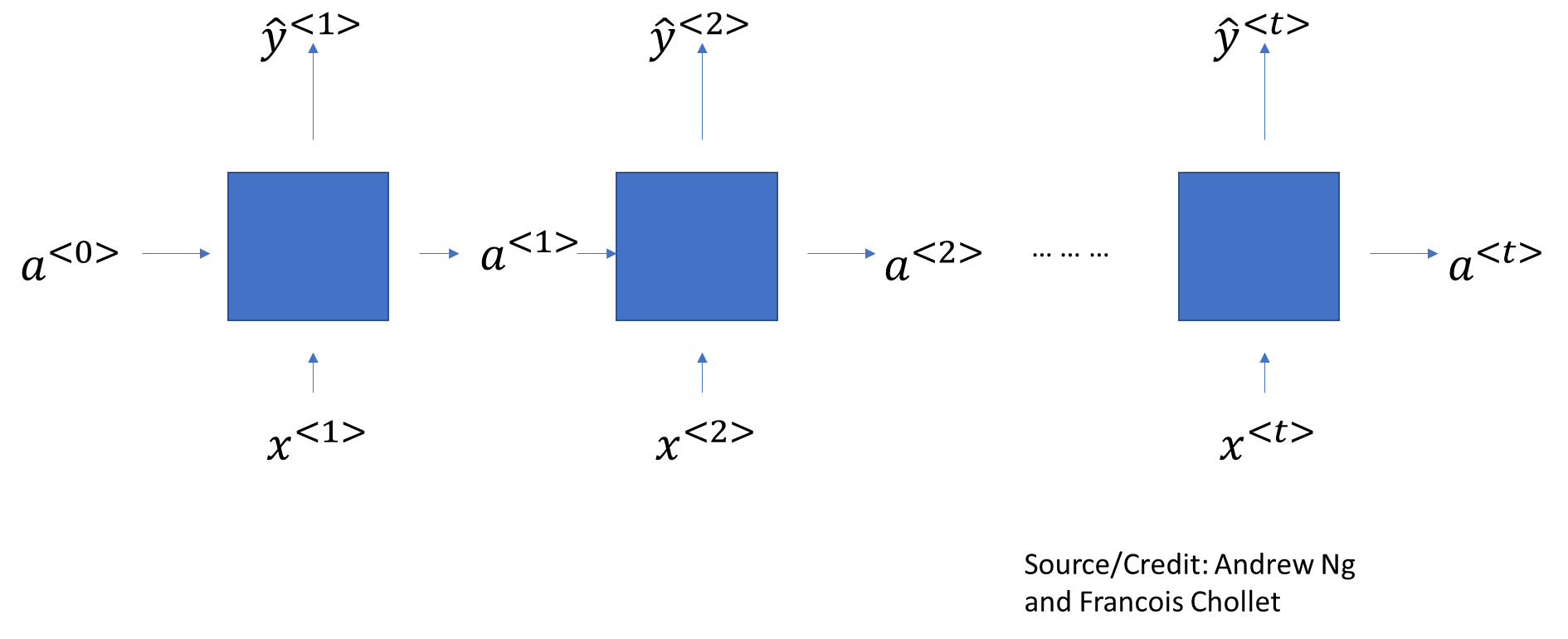

Recurrent Neural Networks

One issue with Dense layers is they have no ‘memory’. Every input is different, and processed separately, with no knowledge of what was processed before.

In such networks, sequenced data is generally arranged back-to-back as a single vector, and fed into the network. Such networks are called feedforward networks.

While this works for structured/tabular data, it does not work too well for sequenced, or temporal data (eg, a time series, or a sentence, where words follow each other in a sequence).

Recurrent Neural Networks try to solve for this problem by maintaining a memory, or state, of what it has seen so far. The memory carries from cell to cell, gradually diminishing over time.

A SimpleRNN cell processes batches of sequences. It takes an input of shape (batch_size, timesteps, input_features).

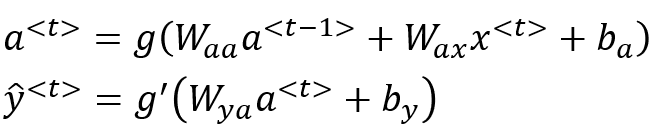

How the network calculates is:

So for each element of the sequence, it calculates an , and then it also calculates the output as a function of both and . State information from previous steps is carried forward in the form of a.

However SimpleRNNs suffer from the problem of exploding or vanishing gradients, and they don’t carry forward information into subsequent cells as well as they should.

In practice, we use LSTM and GRU layers, which are also recurrent layers.

The GRU Layer

GRU = Gated Recurrent Unit

The purpose of GRU is to retain memory of older layers, and persist old data in subsequent layers. In GRU, an additional ‘memory cell’ is also output that is carried forward.

The way it works is: find a ‘candidate value’ for called . Then find a ‘gate’, which is a 0 or 1 value, to decide whether to carry forward the value from the prior layer, or update it.

where

- is the UPDATE GATE,

- is the RESET GATE,

- are the various weight vectors, are the biases

- are the inputs, are the activations

- is the activation function

Source/Credit: Andrew Ng

The LSTM Layer

LSTM = Long Short Term Memory

LSTM is a generalization of GRU. The way it differs from a GRU is that in GRUs, and are the same, but in an LSTM they are different.

where

- is the UPDATE GATE,

- is the FORGET GATE,

- is the OUTPUT GATE

- are the various weight vectors, are the biases

- are the inputs, are the activations

- is the activation function

Finally...

Deep Learning is a rapidly evolving field, and most state-of-the-art modeling tends to be fairly complex than the simple models explained in this brief class.

Network architectures are difficult to optimize, there is no easy answer to the question of the number and types of layers, their size and order in which they are arranged.

Data scientists spend a lot of time optimizing architecture and hyperparameters.

Network architectures can be made arbitrarily complex. While we only looked at ‘sequential’ models, models that accept multiple inputs, split processing in the network, and produce multiple outcomes are common.

END

STOP HERE

Example of the same model built using the Keras Functional API

from tensorflow import keras

from tensorflow.keras import layers

inputs = keras.Input(shape=(28, 28, 1))

x = layers.Conv2D(filters=32, kernel_size=3, activation="relu")(inputs)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=64, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=128, kernel_size=3, activation="relu")(x)

x = layers.Flatten()(x)

outputs = layers.Dense(10, activation="softmax")(x)

model = keras.Model(inputs=inputs, outputs=outputs)