Machine Learning and Modeling

What we will cover

In the previous chapters, we looked at the larger field of artificial intelligence which relates to automating intellectual tasks performed by humans. At one time, it was thought that all human decision making could be coded as a set of rules, which, if followed, would mimic intelligence. The idea was that while these rules could be extremely complex in terms of their length and count, and in the way these were nested with each other, but in the end a set of properly structured if-else rules held the key to creating an artificial mind.

Of course, we know now that is not accurate. Rule based systems cannot generalize from patterns like the human mind does, and tend to be brittle to the point that they can be practically unusable.

Machine learning algorithms attempt to identify patterns in the data with which they create a solution to solve problems that haven't been seen before. That is the topic for the discussion in this chapter.

Deep learning is a special case (or a subset) of machine learning where layers of data abstractions (called neural networks) are used. However, you may hear of a distinction being sometimes made between machine learning, also sometimes called 'shallow learning', from deep learning that we will cover in the next chapter. Machine learning is sometimes called 'shallow learning' because it is based on a single layer of data transformations. It is called so to distinguish it from 'deep learning' that relies upon multiple layers of data transformations, with each layer extracting a different elements of useful information from the input.

This is not to suggest that machine learning is less useful or less powerful than deep learning - on the contrary simpler algorithms regularly beat deep learning algorithms for certain kinds of tasks. The type of learning to use is driven by the use case, performance obtained, and the desired explainability.

Next, we will cover the key machine learning algorithms that are used for classification, regression and clustering. We will cover deep learning in the next chapter.

Agenda:

- Decision Trees

- Random Forest

- XGBoost

- Linear Discriminant Analysis

- Support Vector Machines

- Naïve Bayes

- K-Nearest Neighbors

- K-Means Clustering

- Hierarchical Clustering

All our work will follow the ML workflow discussed earlier, and repeated below:

- Prepare your data – cleanse, convert to numbers, etc

- Split the data into training and test sets

- Training sets are what algorithms learn from

- Test sets are the ‘hold-out’ data on which model effectiveness is measured

- No set rules, often a 80:20 split between train and test data suffices. If there is a lot of training data, you may keep a smaller number as the test set.

- Fit a model.

- Check model accuracy based on the test set.

- Use for predictions.

What you need to think about

As we cover the algorithms, think about the below ideas for each.

- What is the conceptual basis for the algorithm?

- This will help you think about the problems the algorithm can be applied to,

- You should also think about the parameters you can control in the model,

- You should think about model explainability, how essential is it to your use case, and who your audience is.

- Do you need to scale/standardize the data?

- Or can you use the raw data as is?

- Whether it can perform regression, classification or clustering

- Regression models help forecast numeric quantities, while classification algorithms help determine class membership.

- Some algorithms can only perform either regression or classification, while others can do both.

- If it is a classification algorithm, does it provide just the class membership, or probability estimates

- If reliable probability estimates are available from the model, you can perform more advanced model evaluations, and tweak the probability cut-off to obtain your desired True Positive/False Positive rates.

Some library imports first...

import numpy as np

import pandas as pd

import statsmodels.api as sm

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

import seaborn as sns

from sklearn import tree

from sklearn.metrics import confusion_matrix, accuracy_score, classification_report

from sklearn.metrics import mean_absolute_error, mean_squared_error, ConfusionMatrixDisplay

from sklearn import metrics

# from sklearn.metrics import mean_absolute_percentage_error

from sklearn.preprocessing import StandardScaler, LabelEncoder

from sklearn.model_selection import train_test_split

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn.ensemble import RandomForestClassifier

from sklearn import svm

import sklearn.preprocessing as preproc

Decision Trees

Decision Trees (DTs) are a non-parametric supervised learning method used for classification and regression. The goal is to create a model that predicts the value of a target variable by learning simple decision rules inferred from the data features. In simple words, decision trees are a collection of if/else conditions that are applied to data till a prediction is reached. Trees are constructed by splitting data by a variable, and then doing the same again and again till the desired level of accuracy is reached (or we run out of data). Trees can be visualized, and are therefore easier to interpret – and in that sense they are a ‘white box model’.

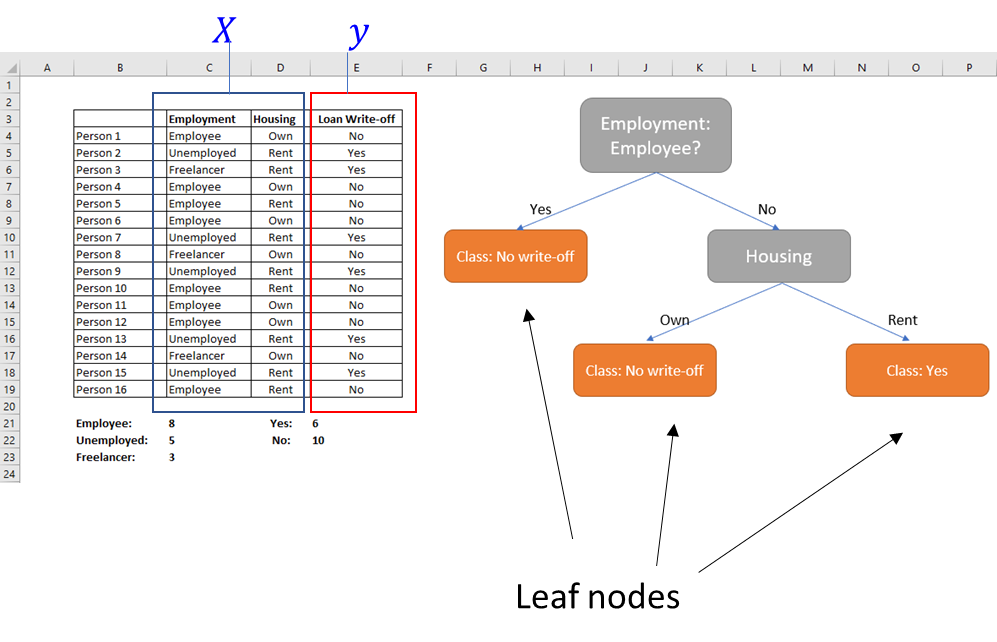

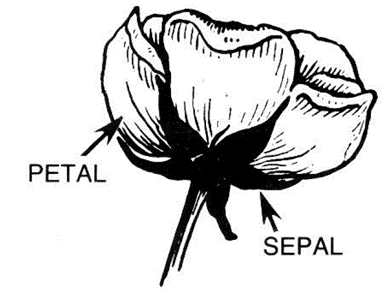

Trivial Example

Consider a made-up dataset where we know the employment and housing status of our customers, and whether they have paid back or defaulted on their loans. When a new customer requests a loan, can we use this data to decide the whether there is likely to be a default?

We would like the ‘leaf nodes’ to be pure, ie contain instances that tend to belong to the same class.

Several practical issues arise in the above example:

- Attributes rarely neatly split a group. In the made up example, everything lined up neatly but rarely will in reality.

- How does one select what order to select attributes in? We could have started with housing instead of looking at whether a person was an employee or not.

- Many attributes will not be binary, may have multiple unique values.

- Some attributes may be numeric. For example, we may know their credit scores. In such a case, how do we split the nodes?

- Finally, how do we decide we are done? Should we keep going till we run out of variables, or till all leaf nodes are pure?

Measuring Purity - Entropy and Gini Impurity

Entropy

The most common splitting criterion is called information gain, and is based on a measure called entropy.

Entropy is a measure of disorder that can be applied to a collection.

Disorder corresponds to how mixed (impure) the group is with respect to the properties of interest.

A node is pure when entropy = 0. So we are looking for ways to minimize entropy.

Gini Impurity

Another measure of impurity is the Gini Impurity.

Like entropy, the Gini Impurity has a minimum of 0. In a two class problem, the maximum value for the Gini Impurity will be 0.5. Both Entropy and the Gini Impurity behave similarly, the Gini Impurity is supposedly less computationally intensive.

With entropy as the measure of disorder, we calculate Information Gain offered by each attribute when used as the basis of segmentation.

Information gain is the reduction in entropy by splitting our data on the basis of a single attribute.

For our toy example, the entropy for the top parent node was 0.95. This was reduced to 0.41 at the next child node, calculated as . We start our segmentation with the attribute that provides the most information gain.

Fortunately, automated algorithms do this for us, so we do not have to calculate any of this. But the concept of information gain and how regression tree algorithms decide to split the data is important to be aware of.

Toy Example Continued

Let us continue the example introduced earlier.

# Entropy before the first split

entropy1 = -((6/16) * np.log2(6/16))-((10/16) * np.log2(10/16))

entropy1

0.954434002924965

# Entroy after the split

entropy2 = \

(8/16) * (-(8/8) * np.log2(8/8)) \

+ \

((8/16) * (- (2/8) * np.log2(2/8) - (6/8) * np.log2(6/8) ))

entropy2

0.4056390622295664

#Information Gain

entropy1 - entropy2

0.5487949406953987

Another simple example

We look at another example where we try to build a decision tree to predict whether a debt was written-off for a customer given other attributes.

df = pd.read_excel('write-off.xlsx')

df

| Name | Balance | Age | Employed | Write-off | |

|---|---|---|---|---|---|

| 0 | Mike | 200000 | 42 | no | yes |

| 1 | Mary | 35000 | 33 | yes | no |

| 2 | Claudio | 115000 | 40 | no | no |

| 3 | Robert | 29000 | 23 | yes | yes |

| 4 | Dora | 72000 | 31 | no | no |

Balance, Age and Employed are independent variables, and Write-off is the predicted variable. Of these, the Write-off and Employed columns are strings and have to be converted to numerical variables so they can be used in algorithms.

df['Write-off'] = df['Write-off'].astype('category') #convert to category

df['write-off-label'] = df['Write-off'].cat.codes #use category codes as labels

df = pd.get_dummies(df, columns=["Employed"]) #one hot encoding using pandas

df

| Name | Balance | Age | Write-off | write-off-label | Employed_no | Employed_yes | |

|---|---|---|---|---|---|---|---|

| 0 | Mike | 200000 | 42 | yes | 1 | True | False |

| 1 | Mary | 35000 | 33 | no | 0 | False | True |

| 2 | Claudio | 115000 | 40 | no | 0 | True | False |

| 3 | Robert | 29000 | 23 | yes | 1 | False | True |

| 4 | Dora | 72000 | 31 | no | 0 | True | False |

type(df['Write-off'])

pandas.core.series.Series

df = df.iloc[:,[0,3,4,1,2,5,6]]

df

| Name | Write-off | write-off-label | Balance | Age | Employed_no | Employed_yes | |

|---|---|---|---|---|---|---|---|

| 0 | Mike | yes | 1 | 200000 | 42 | True | False |

| 1 | Mary | no | 0 | 35000 | 33 | False | True |

| 2 | Claudio | no | 0 | 115000 | 40 | True | False |

| 3 | Robert | yes | 1 | 29000 | 23 | False | True |

| 4 | Dora | no | 0 | 72000 | 31 | True | False |

df.iloc[:, 2:]

| write-off-label | Balance | Age | Employed_no | Employed_yes | |

|---|---|---|---|---|---|

| 0 | 1 | 200000 | 42 | True | False |

| 1 | 0 | 35000 | 33 | False | True |

| 2 | 0 | 115000 | 40 | True | False |

| 3 | 1 | 29000 | 23 | False | True |

| 4 | 0 | 72000 | 31 | True | False |

# This below command is required only to get back to the home folder if you aren't there already

# import os

# os.chdir('/home/jovyan')

X = df.iloc[:,3:]

y = df.iloc[:,2]

clf = tree.DecisionTreeClassifier()

clf = clf.fit(X, y)

import graphviz

dot_data = tree.export_graphviz(clf, out_file=None)

graph = graphviz.Source(dot_data)

# graph.render("df")

dot_data = tree.export_graphviz(clf, out_file=None,

feature_names=X.columns,

class_names=['yes', 'no'], # Plain English names for classes_

filled=True, rounded=True,

special_characters=True)

graph = graphviz.Source(dot_data)

graph

clf.classes_

array([0, 1], dtype=int8)

y

0 1

1 0

2 0

3 1

4 0

Name: write-off-label, dtype: int8

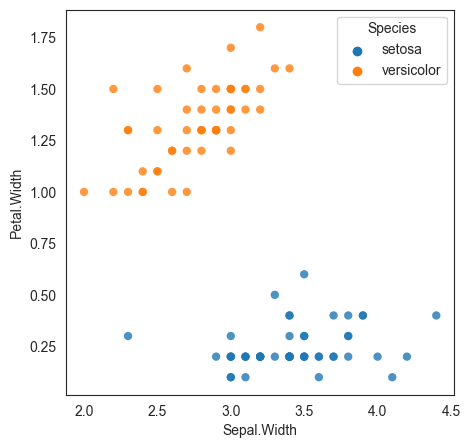

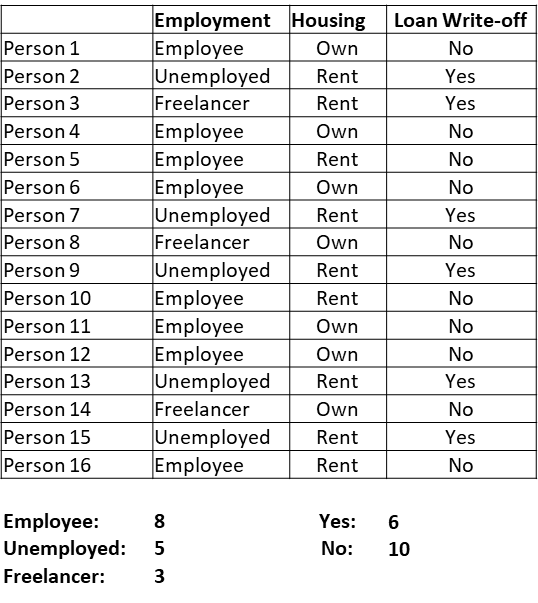

Iris Flower Dataset

We consider the Iris dataset, a multivariate data set introduced by the British statistician and biologist Ronald Fisher in a 1936 paper. The data set consists of 50 samples from each of three species of Iris (Iris setosa, Iris virginica and Iris versicolor).

Four features were measured from each sample: the length and the width of the sepals and petals, in centimeters.

Source: Wikipedia

Image Source/Attribution: https://commons.wikimedia.org/w/index.php?curid=248095

Difference between a petal and a sepal:

Scikit Learn’s decision tree classifier algorithm, combined with another package called graphviz, can provide decision trees together with good graphing capabilities.

Unfortunately, sklearn requires all data to be numeric and as numpy arrays. This creates practical problems for the data analyst – categorical variables have to be labeled or one-hot encoded, and their plain English meanings have to be tracked separately.

# Load the data

iris = sm.datasets.get_rdataset('iris').data

iris.sample(6)

| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species | |

|---|---|---|---|---|---|

| 77 | 6.7 | 3.0 | 5.0 | 1.7 | versicolor |

| 103 | 6.3 | 2.9 | 5.6 | 1.8 | virginica |

| 25 | 5.0 | 3.0 | 1.6 | 0.2 | setosa |

| 126 | 6.2 | 2.8 | 4.8 | 1.8 | virginica |

| 55 | 5.7 | 2.8 | 4.5 | 1.3 | versicolor |

| 131 | 7.9 | 3.8 | 6.4 | 2.0 | virginica |

Our task is: Based on these features, can we create a decision tree to distinguish between the three species of the Iris flower?

# Let us look at some basic descriptive stats for each of the flower species.

iris.pivot_table(columns = ['Species'], aggfunc = [np.mean, min, max]).transpose()

| Petal.Length | Petal.Width | Sepal.Length | Sepal.Width | ||

|---|---|---|---|---|---|

| Species | |||||

| mean | setosa | 1.462 | 0.246 | 5.006 | 3.428 |

| versicolor | 4.260 | 1.326 | 5.936 | 2.770 | |

| virginica | 5.552 | 2.026 | 6.588 | 2.974 | |

| min | setosa | 1.000 | 0.100 | 4.300 | 2.300 |

| versicolor | 3.000 | 1.000 | 4.900 | 2.000 | |

| virginica | 4.500 | 1.400 | 4.900 | 2.200 | |

| max | setosa | 1.900 | 0.600 | 5.800 | 4.400 |

| versicolor | 5.100 | 1.800 | 7.000 | 3.400 | |

| virginica | 6.900 | 2.500 | 7.900 | 3.800 |

# Next, we build the decision tree

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn import tree

iris = load_iris()

X, y = iris.data, iris.target

# Train-test split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

# Create the classifier and visualize the decision tree

clf = tree.DecisionTreeClassifier()

clf = clf.fit(X_train, y_train)

import graphviz

dot_data = tree.export_graphviz(clf, out_file=None)

graph = graphviz.Source(dot_data)

graph.render("iris")

dot_data = tree.export_graphviz(clf, out_file=None,

feature_names=iris.feature_names,

class_names=iris.target_names,

filled=True, rounded=True,

special_characters=True)

graph = graphviz.Source(dot_data)

graph

# Another way to build the tree is something as simple as

# typing `tree.plot_tree(clf)` but the above code gives

# us much better results.

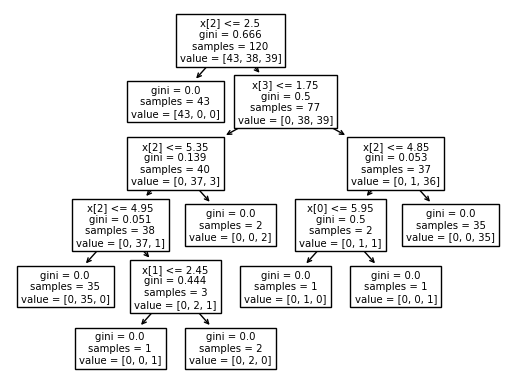

print(tree.plot_tree(clf))

[Text(0.4444444444444444, 0.9166666666666666, 'x[2] <= 2.5\ngini = 0.666\nsamples = 120\nvalue = [43, 38, 39]'), Text(0.3333333333333333, 0.75, 'gini = 0.0\nsamples = 43\nvalue = [43, 0, 0]'), Text(0.5555555555555556, 0.75, 'x[3] <= 1.75\ngini = 0.5\nsamples = 77\nvalue = [0, 38, 39]'), Text(0.3333333333333333, 0.5833333333333334, 'x[2] <= 5.35\ngini = 0.139\nsamples = 40\nvalue = [0, 37, 3]'), Text(0.2222222222222222, 0.4166666666666667, 'x[2] <= 4.95\ngini = 0.051\nsamples = 38\nvalue = [0, 37, 1]'), Text(0.1111111111111111, 0.25, 'gini = 0.0\nsamples = 35\nvalue = [0, 35, 0]'), Text(0.3333333333333333, 0.25, 'x[1] <= 2.45\ngini = 0.444\nsamples = 3\nvalue = [0, 2, 1]'), Text(0.2222222222222222, 0.08333333333333333, 'gini = 0.0\nsamples = 1\nvalue = [0, 0, 1]'), Text(0.4444444444444444, 0.08333333333333333, 'gini = 0.0\nsamples = 2\nvalue = [0, 2, 0]'), Text(0.4444444444444444, 0.4166666666666667, 'gini = 0.0\nsamples = 2\nvalue = [0, 0, 2]'), Text(0.7777777777777778, 0.5833333333333334, 'x[2] <= 4.85\ngini = 0.053\nsamples = 37\nvalue = [0, 1, 36]'), Text(0.6666666666666666, 0.4166666666666667, 'x[0] <= 5.95\ngini = 0.5\nsamples = 2\nvalue = [0, 1, 1]'), Text(0.5555555555555556, 0.25, 'gini = 0.0\nsamples = 1\nvalue = [0, 1, 0]'), Text(0.7777777777777778, 0.25, 'gini = 0.0\nsamples = 1\nvalue = [0, 0, 1]'), Text(0.8888888888888888, 0.4166666666666667, 'gini = 0.0\nsamples = 35\nvalue = [0, 0, 35]')]

# List categories in the classifier

iris.target_names

array(['setosa', 'versicolor', 'virginica'], dtype='<U10')

# Perform predictions

clf.predict(X_test)

array([1, 1, 1, 2, 1, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 2, 1, 2, 2, 1, 2, 2,

1, 0, 2, 0, 2, 0, 2, 0])

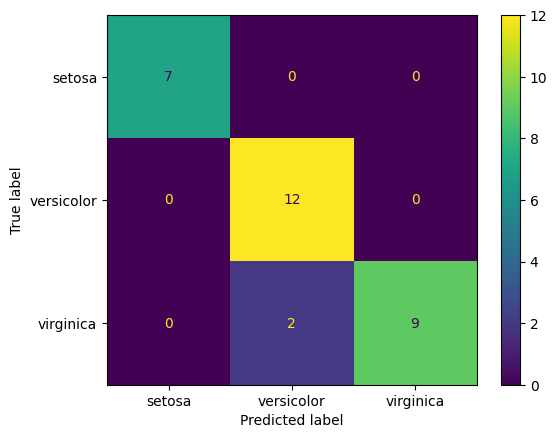

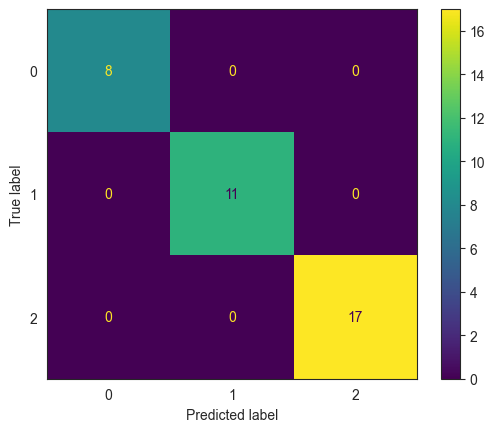

Confusion Matrix and Classification Report

We did not split the data into train/test sets. For now, we will evaluate the model based on the entire data set (ie, on the training set).

For this trivial example, the decision tree has done a perfect job of predicting flower species.

confusion_matrix(y_true = y_test, y_pred = clf.predict(X_test))

array([[ 7, 0, 0],

[ 0, 12, 0],

[ 0, 2, 9]], dtype=int64)

print(classification_report(y_true = y_test, y_pred = clf.predict(X_test)))

precision recall f1-score support

0 1.00 1.00 1.00 7

1 0.86 1.00 0.92 12

2 1.00 0.82 0.90 11

accuracy 0.93 30

macro avg 0.95 0.94 0.94 30

weighted avg 0.94 0.93 0.93 30

ConfusionMatrixDisplay.from_estimator(clf, X_test, y_test, display_labels=iris.target_names);

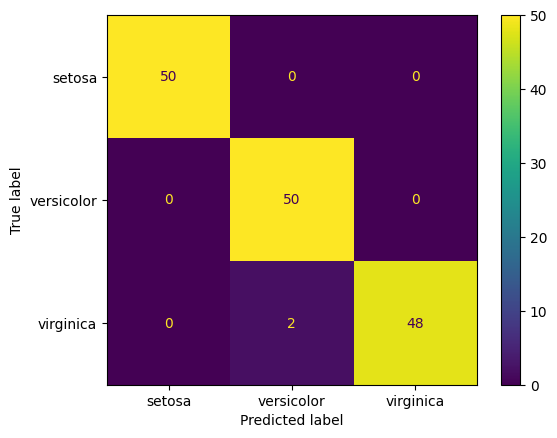

Confusion Matrix for All Data

# Just for the heck of it, let us predict the entire dataset using our model,

# and check the results

print(classification_report(y_true = y, y_pred = clf.predict(X)))

precision recall f1-score support

0 1.00 1.00 1.00 50

1 0.96 1.00 0.98 50

2 1.00 0.96 0.98 50

accuracy 0.99 150

macro avg 0.99 0.99 0.99 150

weighted avg 0.99 0.99 0.99 150

ConfusionMatrixDisplay.from_estimator(clf, X, y, display_labels=iris.target_names);

Class probabilities with decision trees

Decision Trees do not do a great job of predicting the probability of belonging to a particular class, for example, when compared to Logistic Regression.

Probabilities for class membership are just the proportion of observations in a particular class in the appropriate leaf node. For a tree with unlimited nodes, we will always mostly have p=100% for most predictions.

Scikit Learn provides a method to predict probabilities, clf.predict_proba(). If we apply this to our decision tree (first five observations only), we get as below:

# As can be seen below, the model does not give class probabilities

clf.predict(X)

array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 1, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2])

# Get class probabilities

clf.predict_proba(X[:5])

array([[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.]])

Predictions with decision trees

With this model, how do I predict if I have the measurements for a new flower?

Once a Decision Tree Classifier is built, new predictions can be obtained using the predict(X) method.

Imagine we have a new flower with dimensions 5, 3, 1 and 2 and need to predict its species. Since we have the featureset, we feed this information to the model and obtain the prediction.

Refer below for the steps in Python

# let us remind ourselves of what features need to predict a flower's species

iris.feature_names

['sepal length (cm)',

'sepal width (cm)',

'petal length (cm)',

'petal width (cm)']

# let us also look at existing feature set

X[:4]

array([[5.1, 3.5, 1.4, 0.2],

[4.9, 3. , 1.4, 0.2],

[4.7, 3.2, 1.3, 0.2],

[4.6, 3.1, 1.5, 0.2]])

# Next, the measurements for the new flower

new_flower = [[5,3,1,2]]

# Now the prediction

clf.predict(new_flower)

array([0])

# The above means it is the category at index 1 in the target

# Let us look at what the target names are.

# We see that the 'versicolor' is at index 1, so that is the prediction for the new flower

iris.target_names

array(['setosa', 'versicolor', 'virginica'], dtype='<U10')

# or, all of the above in one line

print(iris.target_names[clf.predict(new_flower)])

['setosa']

Decision Tree Regression

Decision trees can also be applied to estimating continuous values for the target variable.

They work in the same way as decision trees for classification, except that information gain is measured differently, eg by a reduction in standard deviation at the node level.

So splits for a node would be performed based on a variable/value that creates the maximum reduction in the standard deviation of the y values in the node.

The prediction is then the average of the observations in the leaf node.

As an example, let us consider the Boston House Price dataset that is built into sklearn. There are 506 rows × 14 variables

# Load the data

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing()

X = housing['data']

y = housing['target']

features = housing['feature_names']

DESCR = housing['DESCR']

cali_df = pd.DataFrame(X, columns = features)

cali_df.insert(0,'medv', y)

cali_df

| medv | MedInc | HouseAge | AveRooms | AveBedrms | Population | AveOccup | Latitude | Longitude | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 4.526 | 8.3252 | 41.0 | 6.984127 | 1.023810 | 322.0 | 2.555556 | 37.88 | -122.23 |

| 1 | 3.585 | 8.3014 | 21.0 | 6.238137 | 0.971880 | 2401.0 | 2.109842 | 37.86 | -122.22 |

| 2 | 3.521 | 7.2574 | 52.0 | 8.288136 | 1.073446 | 496.0 | 2.802260 | 37.85 | -122.24 |

| 3 | 3.413 | 5.6431 | 52.0 | 5.817352 | 1.073059 | 558.0 | 2.547945 | 37.85 | -122.25 |

| 4 | 3.422 | 3.8462 | 52.0 | 6.281853 | 1.081081 | 565.0 | 2.181467 | 37.85 | -122.25 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 20635 | 0.781 | 1.5603 | 25.0 | 5.045455 | 1.133333 | 845.0 | 2.560606 | 39.48 | -121.09 |

| 20636 | 0.771 | 2.5568 | 18.0 | 6.114035 | 1.315789 | 356.0 | 3.122807 | 39.49 | -121.21 |

| 20637 | 0.923 | 1.7000 | 17.0 | 5.205543 | 1.120092 | 1007.0 | 2.325635 | 39.43 | -121.22 |

| 20638 | 0.847 | 1.8672 | 18.0 | 5.329513 | 1.171920 | 741.0 | 2.123209 | 39.43 | -121.32 |

| 20639 | 0.894 | 2.3886 | 16.0 | 5.254717 | 1.162264 | 1387.0 | 2.616981 | 39.37 | -121.24 |

20640 rows × 9 columns

# Let us look at the data dictionary

print(DESCR)

.. _california_housing_dataset:

California Housing dataset

--------------------------

**Data Set Characteristics:**

:Number of Instances: 20640

:Number of Attributes: 8 numeric, predictive attributes and the target

:Attribute Information:

- MedInc median income in block group

- HouseAge median house age in block group

- AveRooms average number of rooms per household

- AveBedrms average number of bedrooms per household

- Population block group population

- AveOccup average number of household members

- Latitude block group latitude

- Longitude block group longitude

:Missing Attribute Values: None

This dataset was obtained from the StatLib repository.

https://www.dcc.fc.up.pt/~ltorgo/Regression/cal_housing.html

The target variable is the median house value for California districts,

expressed in hundreds of thousands of dollars ($100,000).

This dataset was derived from the 1990 U.S. census, using one row per census

block group. A block group is the smallest geographical unit for which the U.S.

Census Bureau publishes sample data (a block group typically has a population

of 600 to 3,000 people).

A household is a group of people residing within a home. Since the average

number of rooms and bedrooms in this dataset are provided per household, these

columns may take surprisingly large values for block groups with few households

and many empty houses, such as vacation resorts.

It can be downloaded/loaded using the

:func:`sklearn.datasets.fetch_california_housing` function.

.. topic:: References

- Pace, R. Kelley and Ronald Barry, Sparse Spatial Autoregressions,

Statistics and Probability Letters, 33 (1997) 291-297

We can fit a decision tree regressor to the data.

1. First, we load the data.

1. Next, we split the data into train and test sets, keeping 20% for the test set.

1. Then we fit a model to the training data, and store the model object in the variable model.

1. Next we use the model to predict the test cases.

1. Finally, we evaluate the results.

# Train-test split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

# model = tree.DecisionTreeRegressor()

model = tree.DecisionTreeRegressor(max_depth=9)

model = model.fit(X_train, y_train)

model.predict(X_test)

array([3.0852 , 0.96175 , 0.96001341, ..., 2.26529224, 2.15065625,

2.02124038])

print(model.tree_.max_depth)

9

y_pred = model.predict(X_test)

print('MSE = ', mean_squared_error(y_test,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_test,y_pred)))

print('MAE = ', mean_absolute_error(y_test,y_pred))

MSE = 0.38773905612449

RMSE = 0.622686964794101

MAE = 0.4204310076086696

# Just checking to see if we have everything working right

print('Count of predictions:', len(y_pred))

print('Count of ground truth labels:', len(y_test))

Count of predictions: 4128

Count of ground truth labels: 4128

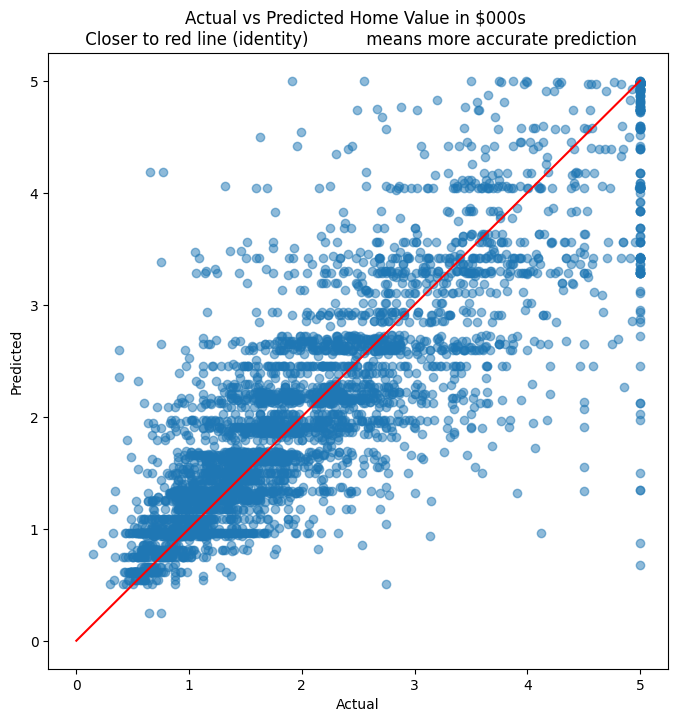

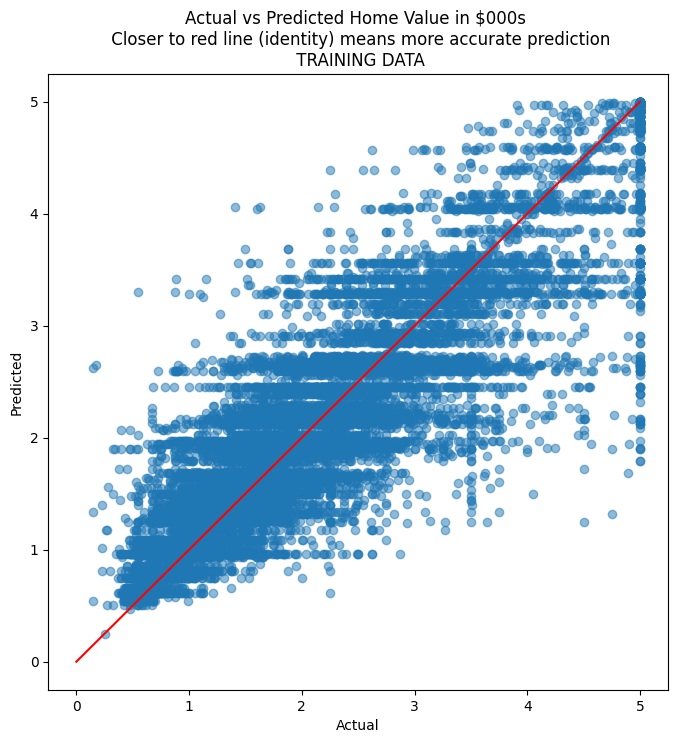

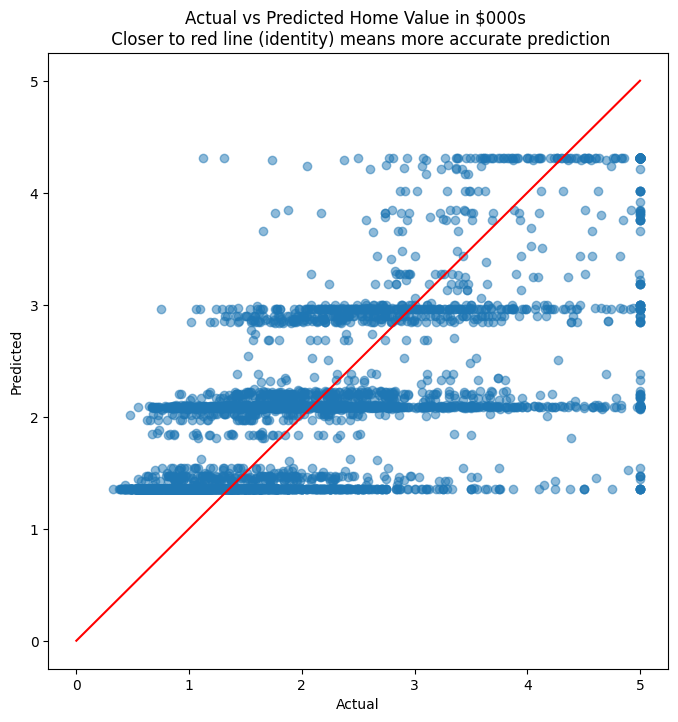

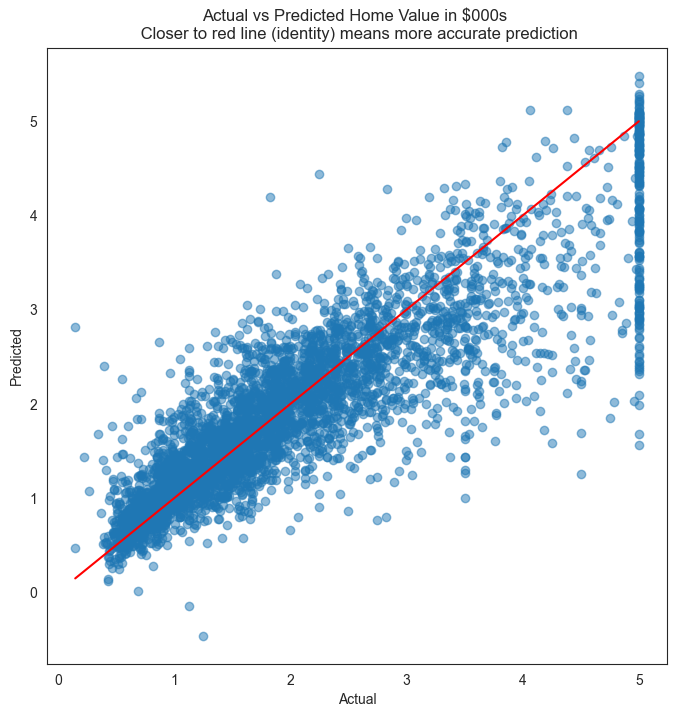

# We plot the actual home prices vs the predictions in a scatterplot

plt.figure(figsize = (8,8))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.title('Actual vs Predicted Home Value in $000s \n Closer to red line (identity) \

means more accurate prediction')

plt.plot( [0,5],[0,5], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted")

Text(0, 0.5, 'Predicted')

# Context for the RMSE. What is the mean, min and max?

cali_df.medv.describe()

count 20640.000000

mean 2.068558

std 1.153956

min 0.149990

25% 1.196000

50% 1.797000

75% 2.647250

max 5.000010

Name: medv, dtype: float64

# R-squared calculation

pd.DataFrame({'actual':y_test, 'predicted':y_pred}).corr()**2

| actual | predicted | |

|---|---|---|

| actual | 1.000000 | 0.720348 |

| predicted | 0.720348 | 1.000000 |

How well did my model generalize?

Let us see how my model did on the training data

# R-squared

pd.DataFrame({'actual':y_train, 'predicted':model.predict(X_train)}).corr()**2

| actual | predicted | |

|---|---|---|

| actual | 1.000000 | 0.794566 |

| predicted | 0.794566 | 1.000000 |

# Calculate MSE, RMSE and MAE

y_pred = model.predict(X_train)

print('MSE = ', mean_squared_error(y_train,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_train,y_pred)))

print('MAE = ', mean_absolute_error(y_train,y_pred))

MSE = 0.27139484003226105

RMSE = 0.5209556987232802

MAE = 0.3574534397649355

# Scatterplot for actual vs predicted on TRAINING data

plt.figure(figsize = (8,8))

plt.scatter(y_train, model.predict(X_train), alpha=0.5)

plt.title('Actual vs Predicted Home Value in $000s \n Closer to red line (identity) means more accurate prediction\n TRAINING DATA')

plt.plot( [0,5],[0,5], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted")

Text(0, 0.5, 'Predicted')

Addressing Overfitting in Decision Trees

The simplest way to address overfitting in decision trees is to limit the depth of the trees using the max_depth parameter when fitting the model. The depth of a decision tree is the length of the longest path from a root to a leaf.

Find out the current value of the max tree depth in the example (print(model.tree_.max_depth)), and change the max_depth parameter to see if you can reduce the RMSE for the test set.

You can also change the minimum count of samples required to be present in a leaf node (min_samples_leaf), and the minimum number of observations required before a node is allowed to split (min_samples_split).

Random Forest

A random forest fits a number of decision tree classifiers on various sub-samples of the dataset and uses averaging to improve the predictive accuracy and control over-fitting.

Random Forest almost always gives results superior to decision trees, and is therefore preferred over decision trees. However, because the results provided by random forest are the result of averaging multiple trees, explainability can become an issue.

Therefore decision trees may still be preferred over random forest in the interest of explainability.

At this point, it is important to introduce two new concepts: bootstrapping, and bagging.

Bootstrapping

In bootstrapping, you treat the sample as if it were the population, and draw repeated samples of equal size from it. The samples are drawn with replacement. Now think that for each of these new samples you calculate a population characteristic, say the median. Because you potentially have a very large number of samples (theoretically infinite), you can get a distribution of the median of the population from our original single sample.

If we hadn’t done bootstrapping (ie resample from the sample with replacement), we would have only one point estimate for the median.

Bootstrapping improves the estimation process and reduces variance.

Bagging (Bootstrap + Aggregation)

Bagging is a type of ensemble learning. Ensemble learning is where we combine multiple models to produce a better prediction or classification.

In bagging, we produce multiple different training sets (called bootstrap samples), by sampling with replacement from the original dataset. Then, for each bootstrap sample, we build a model.

The results in an ensemble of models, where each model votes with the equal weight. Typically, the goal of this procedure is to reduce the variance of the model of interest (e.g. decision trees).

The Random Forest algorithm is when the above technique is applied to decision trees.

Random Forests

Random forests are an example of ensemble learning, where multiple models are combined to produce a better prediction or classification.

Random forests are collections of trees. Predictions are equivalent to the average prediction of component trees.

Multiple decision trees are created from the source data using a technique called bagging. Multiple different training sets (called bootstrap samples) are created by sampling with replacement from the original dataset.

Then, for each bootstrap sample, we build a model. The results in an ensemble of models, where each model votes with the equal weight. Typically, the goal of this procedure is to reduce the variance of the model of interest.

When applied to decision trees, this becomes random forest.

Random Forest for Classification

# load the data

college = pd.read_csv('collegePlace.csv')

college.shape

(2966, 8)

college

| Age | Gender | Stream | Internships | CGPA | Hostel | HistoryOfBacklogs | PlacedOrNot | |

|---|---|---|---|---|---|---|---|---|

| 0 | 22 | Male | Electronics And Communication | 1 | 8 | 1 | 1 | 1 |

| 1 | 21 | Female | Computer Science | 0 | 7 | 1 | 1 | 1 |

| 2 | 22 | Female | Information Technology | 1 | 6 | 0 | 0 | 1 |

| 3 | 21 | Male | Information Technology | 0 | 8 | 0 | 1 | 1 |

| 4 | 22 | Male | Mechanical | 0 | 8 | 1 | 0 | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2961 | 23 | Male | Information Technology | 0 | 7 | 0 | 0 | 0 |

| 2962 | 23 | Male | Mechanical | 1 | 7 | 1 | 0 | 0 |

| 2963 | 22 | Male | Information Technology | 1 | 7 | 0 | 0 | 0 |

| 2964 | 22 | Male | Computer Science | 1 | 7 | 0 | 0 | 0 |

| 2965 | 23 | Male | Civil | 0 | 8 | 0 | 0 | 1 |

2966 rows × 8 columns

# divide the dataset into train and test sets, separating the features and target variable

X = college[['Age', 'Internships', 'CGPA', 'Hostel', 'HistoryOfBacklogs']].values

y = college['PlacedOrNot'].values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# classify using random forest classifier

RandomForest = RandomForestClassifier()

model_rf = RandomForest.fit(X_train, y_train)

pred = model_rf.predict(X_test)

print(classification_report(y_true = y_test, y_pred = pred))

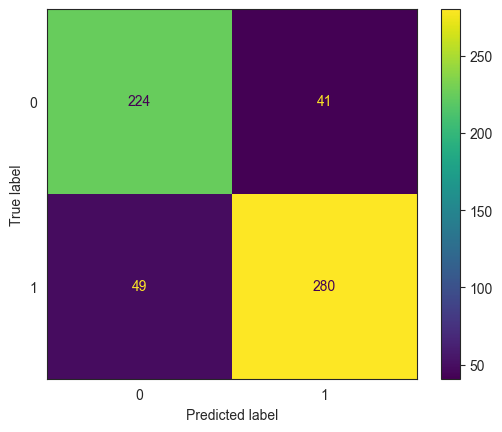

ConfusionMatrixDisplay.from_estimator(model_rf, X_test, y_test);

precision recall f1-score support

0 0.79 0.96 0.87 279

1 0.96 0.77 0.86 315

accuracy 0.86 594

macro avg 0.87 0.87 0.86 594

weighted avg 0.88 0.86 0.86 594

# get probabilities for each observation in the test set

model_rf.predict_proba(X_test)

array([[0.92120988, 0.07879012],

[0.79331614, 0.20668386],

[0. , 1. ],

...,

[0.01571429, 0.98428571],

[0. , 1. ],

[0.73565005, 0.26434995]])

y_test

array([0, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 0,

0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0,

0, 1, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 1, 1, 1, 0, 1, 0, 0, 1, 1, 0,

0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 1, 0,

1, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1,

1, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 1, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1,

1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 0, 1, 0,

0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0,

0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0,

0, 0, 1, 1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0,

1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 1,

0, 0, 0, 1, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,

1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0,

0, 1, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1,

0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1,

1, 1, 0, 1, 1, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 1, 1,

0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 0, 0,

1, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0, 1, 0, 1, 1, 1, 0, 1,

1, 1, 1, 1, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1,

1, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0,

0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 1,

1, 1, 0, 0, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 1, 0, 1, 1, 1, 1, 1, 0,

0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 1, 0, 0, 0, 1, 1,

1, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0,

0, 1, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0,

1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1,

1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1],

dtype=int64)

# get probabilities for each observation in the test set

pred_prob = model_rf.predict_proba(X_test)[:,1]

model_rf.classes_

array([0, 1], dtype=int64)

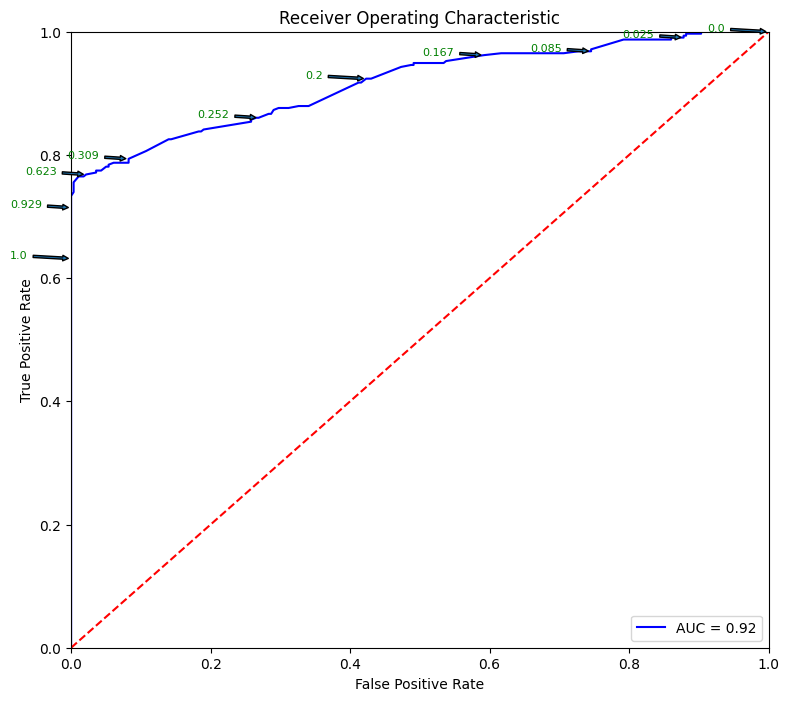

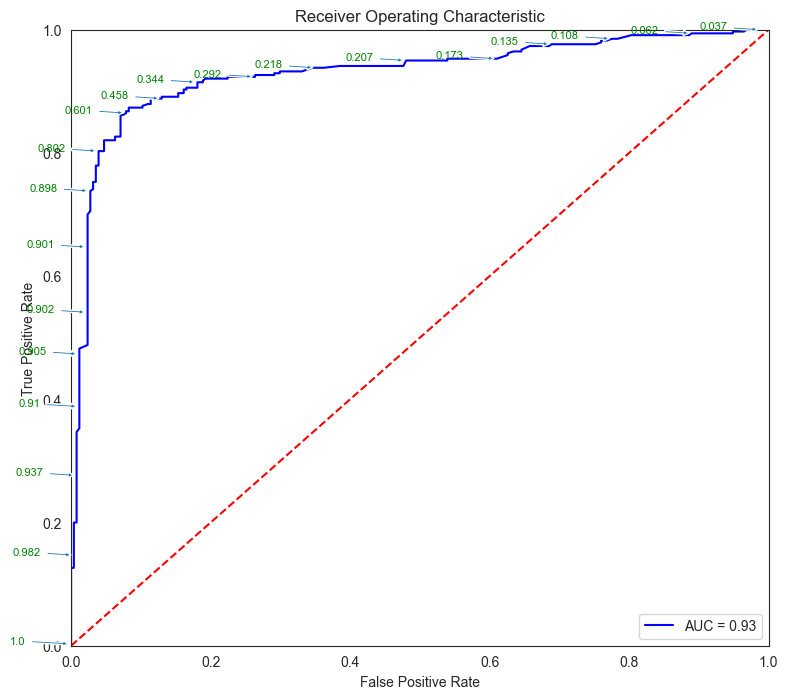

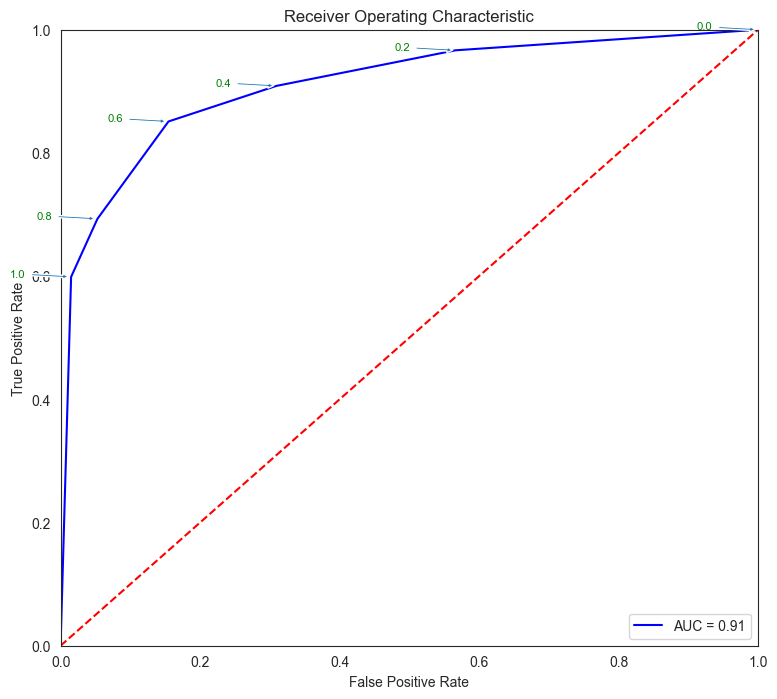

# Source for code below: https://stackoverflow.com/questions/25009284/how-to-plot-roc-curve-in-python

fpr, tpr, thresholds = metrics.roc_curve(y_test, pred_prob)

roc_auc = metrics.auc(fpr, tpr)

plt.figure(figsize = (9,8))

plt.title('Receiver Operating Characteristic')

plt.plot(fpr, tpr, 'b', label = 'AUC = %0.2f' % roc_auc)

plt.legend(loc = 'lower right')

plt.plot([0, 1], [0, 1],'r--')

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate')

for i, txt in enumerate(thresholds):

if i in np.arange(1, len(thresholds), 10): # print every 10th point to prevent overplotting:

plt.annotate(text = round(txt,3), xy = (fpr[i], tpr[i]),

xytext=(-44, 0), textcoords='offset points',

arrowprops={'arrowstyle':"simple"}, color='green',fontsize=8)

plt.show()

threshold_dataframe = pd.DataFrame({'fpr':fpr, 'tpr': tpr, 'threshold':thresholds}).sort_values(by='threshold')

threshold_dataframe.head()

| fpr | tpr | threshold | |

|---|---|---|---|

| 91 | 1.000000 | 1.000000 | 0.000000 |

| 90 | 0.921147 | 1.000000 | 0.001429 |

| 89 | 0.906810 | 1.000000 | 0.002843 |

| 88 | 0.903226 | 1.000000 | 0.003333 |

| 87 | 0.903226 | 0.996825 | 0.006190 |

Random Forest for Regression

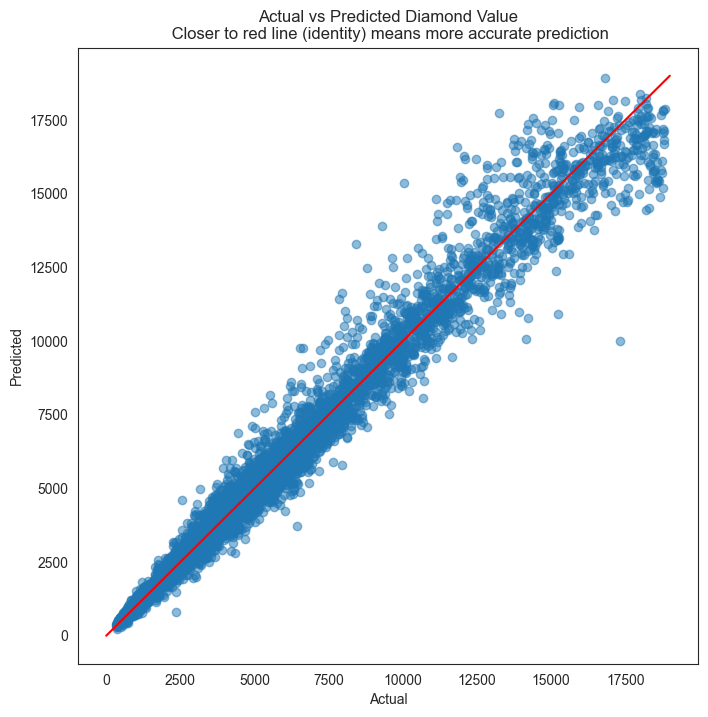

The Random Forest algorithm can also be used effectively for regression problems. Let us try a larger dataset this time.

We will try to predict diamond prices based on all the other attributes we know about the diamonds.

However, our data contains a number of categorical variables. We will need to convert these into numerical using one-hot encoding. Let us do that next!

from sklearn.ensemble import RandomForestRegressor

diamonds = sns.load_dataset("diamonds")

diamonds.head()

| carat | cut | color | clarity | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | Ideal | E | SI2 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 |

| 1 | 0.21 | Premium | E | SI1 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 |

| 2 | 0.23 | Good | E | VS1 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 |

| 3 | 0.29 | Premium | I | VS2 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 |

| 4 | 0.31 | Good | J | SI2 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 |

diamonds = pd.get_dummies(diamonds)

diamonds.head()

| carat | depth | table | price | x | y | z | cut_Ideal | cut_Premium | cut_Very Good | ... | color_I | color_J | clarity_IF | clarity_VVS1 | clarity_VVS2 | clarity_VS1 | clarity_VS2 | clarity_SI1 | clarity_SI2 | clarity_I1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 | True | False | False | ... | False | False | False | False | False | False | False | False | True | False |

| 1 | 0.21 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 | False | True | False | ... | False | False | False | False | False | False | False | True | False | False |

| 2 | 0.23 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 | False | False | False | ... | False | False | False | False | False | True | False | False | False | False |

| 3 | 0.29 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 | False | True | False | ... | True | False | False | False | False | False | True | False | False | False |

| 4 | 0.31 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 | False | False | False | ... | False | True | False | False | False | False | False | False | True | False |

5 rows × 27 columns

# Define X and y as arrays. y is the price column, X is everything else

X = diamonds.loc[:, diamonds.columns != 'price'].values

y = diamonds.price.values

# Train test split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

# Fit model

model_rf_regr = RandomForestRegressor(max_depth=2, random_state=0)

model_rf_regr.fit(X_train, y_train)

model_rf_regr.predict(X_test)

array([1054.29089419, 1054.29089419, 1054.29089419, ..., 6145.62603236,

1054.29089419, 1054.29089419])

# Evaluate model

y_pred = model_rf_regr.predict(X_test)

from sklearn.metrics import mean_absolute_error, mean_squared_error

print('MSE = ', mean_squared_error(y_test,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_test,y_pred)))

print('MAE = ', mean_absolute_error(y_test,y_pred))

MSE = 2757832.1354701095

RMSE = 1660.6721938631083

MAE = 1036.4110791707412

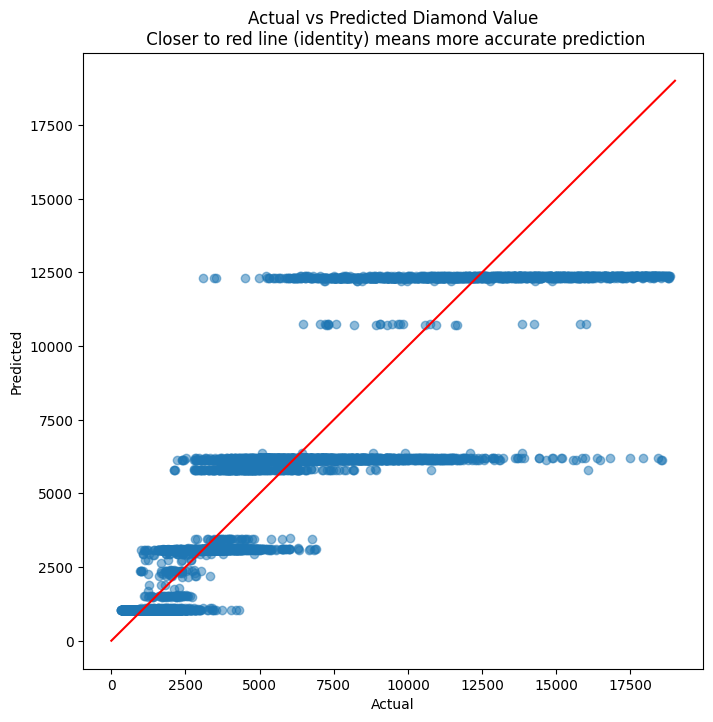

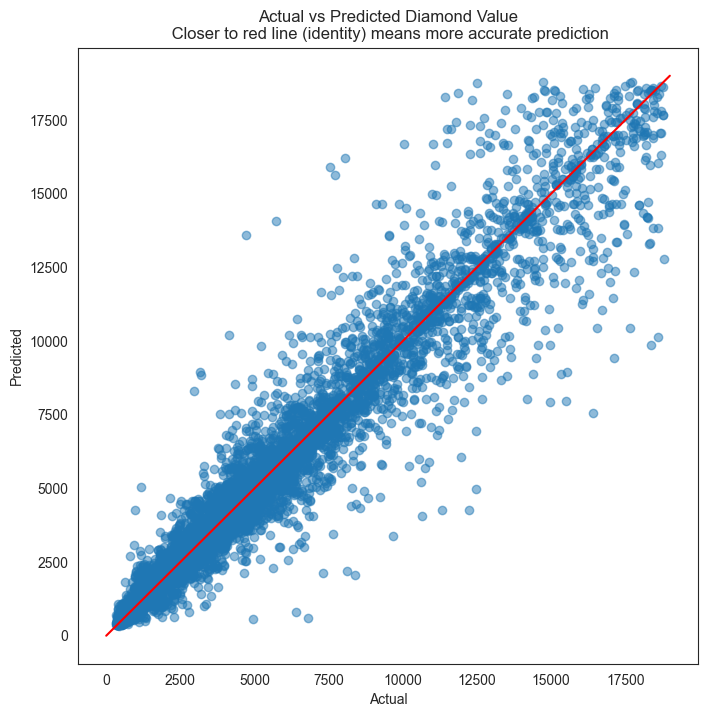

# Evaluate residuals

plt.figure(figsize = (8,8))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.title('Actual vs Predicted Diamond Value\n Closer to red line (identity) means more accurate prediction')

plt.plot( [0,19000],[0,19000], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted")

Text(0, 0.5, 'Predicted')

diamonds.price.describe()

count 53940.000000

mean 3932.799722

std 3989.439738

min 326.000000

25% 950.000000

50% 2401.000000

75% 5324.250000

max 18823.000000

Name: price, dtype: float64

# R-squared calculation

pd.DataFrame({'actual':y_test, 'predicted':y_pred}).corr()**2

| actual | predicted | |

|---|---|---|

| actual | 1.000000 | 0.827564 |

| predicted | 0.827564 | 1.000000 |

importance = model_rf_regr.feature_importances_

feature_names = diamonds.loc[:, diamonds.columns != 'price'].columns

pd.DataFrame({'Feature':feature_names, 'Importance':importance}).sort_values(by='Importance', ascending=False)

| Feature | Importance | |

|---|---|---|

| 0 | carat | 0.668208 |

| 4 | y | 0.331792 |

| 14 | color_G | 0.000000 |

| 24 | clarity_SI2 | 0.000000 |

| 23 | clarity_SI1 | 0.000000 |

| 22 | clarity_VS2 | 0.000000 |

| 21 | clarity_VS1 | 0.000000 |

| 20 | clarity_VVS2 | 0.000000 |

| 19 | clarity_VVS1 | 0.000000 |

| 18 | clarity_IF | 0.000000 |

| 17 | color_J | 0.000000 |

| 16 | color_I | 0.000000 |

| 15 | color_H | 0.000000 |

| 13 | color_F | 0.000000 |

| 1 | depth | 0.000000 |

| 12 | color_E | 0.000000 |

| 11 | color_D | 0.000000 |

| 10 | cut_Fair | 0.000000 |

| 9 | cut_Good | 0.000000 |

| 8 | cut_Very Good | 0.000000 |

| 7 | cut_Premium | 0.000000 |

| 6 | cut_Ideal | 0.000000 |

| 5 | z | 0.000000 |

| 3 | x | 0.000000 |

| 2 | table | 0.000000 |

| 25 | clarity_I1 | 0.000000 |

Random Forest Regression - Another Example

Let us look at our California Housing Dataset that we examined before to predict home prices.

# Load the data

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing()

X = housing['data']

y = housing['target']

features = housing['feature_names']

DESCR = housing['DESCR']

cali_df = pd.DataFrame(X, columns = features)

cali_df.insert(0,'medv', y)

cali_df

| medv | MedInc | HouseAge | AveRooms | AveBedrms | Population | AveOccup | Latitude | Longitude | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 4.526 | 8.3252 | 41.0 | 6.984127 | 1.023810 | 322.0 | 2.555556 | 37.88 | -122.23 |

| 1 | 3.585 | 8.3014 | 21.0 | 6.238137 | 0.971880 | 2401.0 | 2.109842 | 37.86 | -122.22 |

| 2 | 3.521 | 7.2574 | 52.0 | 8.288136 | 1.073446 | 496.0 | 2.802260 | 37.85 | -122.24 |

| 3 | 3.413 | 5.6431 | 52.0 | 5.817352 | 1.073059 | 558.0 | 2.547945 | 37.85 | -122.25 |

| 4 | 3.422 | 3.8462 | 52.0 | 6.281853 | 1.081081 | 565.0 | 2.181467 | 37.85 | -122.25 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 20635 | 0.781 | 1.5603 | 25.0 | 5.045455 | 1.133333 | 845.0 | 2.560606 | 39.48 | -121.09 |

| 20636 | 0.771 | 2.5568 | 18.0 | 6.114035 | 1.315789 | 356.0 | 3.122807 | 39.49 | -121.21 |

| 20637 | 0.923 | 1.7000 | 17.0 | 5.205543 | 1.120092 | 1007.0 | 2.325635 | 39.43 | -121.22 |

| 20638 | 0.847 | 1.8672 | 18.0 | 5.329513 | 1.171920 | 741.0 | 2.123209 | 39.43 | -121.32 |

| 20639 | 0.894 | 2.3886 | 16.0 | 5.254717 | 1.162264 | 1387.0 | 2.616981 | 39.37 | -121.24 |

20640 rows × 9 columns

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

from sklearn.ensemble import RandomForestRegressor

model = RandomForestRegressor(max_depth=2, random_state=0)

model.fit(X_train, y_train)

RandomForestRegressor(max_depth=2, random_state=0)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

RandomForestRegressor(max_depth=2, random_state=0)

y_pred = model.predict(X_test)

from sklearn.metrics import mean_absolute_error, mean_squared_error

print('MSE = ', mean_squared_error(y_test,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_test,y_pred)))

print('MAE = ', mean_absolute_error(y_test,y_pred))

MSE = 0.7109737317347218

RMSE = 0.8431925828271509

MAE = 0.6387472402358885

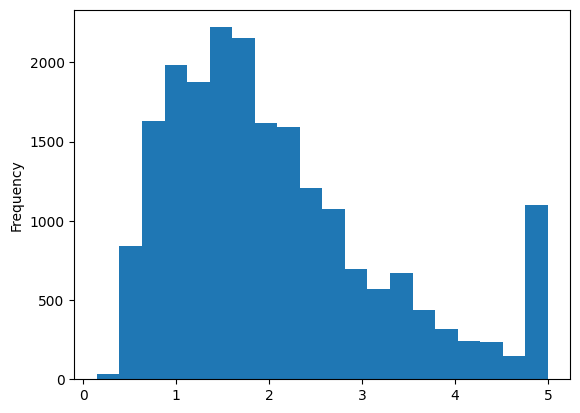

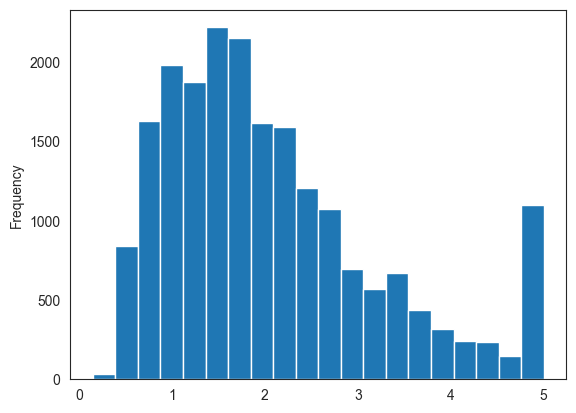

print(cali_df.medv.describe())

cali_df.medv.plot.hist(bins=20)

count 20640.000000

mean 2.068558

std 1.153956

min 0.149990

25% 1.196000

50% 1.797000

75% 2.647250

max 5.000010

Name: medv, dtype: float64

<Axes: ylabel='Frequency'>

plt.figure(figsize = (8,8))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.title('Actual vs Predicted Home Value in $000s \n Closer to red line (identity) means more accurate prediction')

plt.plot( [0,5],[0,5], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted")

Text(0, 0.5, 'Predicted')

XGBoost

Like Random Forest, XGBoost is a tree based algorithm. In Random Forest, multiple trees are built in parallel, and averaged. In XGBoost, trees are built sequentially, with each tree correcting the errors of the previous one.

Trees are built in sequence, with each next tree in the sequence targeting the errors of the previous one. The trees are then added, with a multiplicative constant ‘learning rate’ between 0 and 1 applied to each tree.

XGBoost has by far exceeded the performance of other algorithms, and is one of the most used algorithms on Kaggle. In many cases, it outperforms Neural Nets.

Extensive documentation is available at https://xgboost.readthedocs.io/en/latest

Example

Let us consider our college placement dataset, and check if we are able to predict the ‘PlacedOrNot’ variable correctly.

We will convert the categorical variables (stream of study, gender, etc) into numerical using one-hot encoding.

We will keep 20% of the data as the test set, and fit a model using the XGBoost algorithm.

XGBoost - Classification

# load the data

college = pd.read_csv('collegePlace.csv')

college = pd.get_dummies(college)

college

| Age | Internships | CGPA | Hostel | HistoryOfBacklogs | PlacedOrNot | Gender_Female | Gender_Male | Stream_Civil | Stream_Computer Science | Stream_Electrical | Stream_Electronics And Communication | Stream_Information Technology | Stream_Mechanical | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 22 | 1 | 8 | 1 | 1 | 1 | False | True | False | False | False | True | False | False |

| 1 | 21 | 0 | 7 | 1 | 1 | 1 | True | False | False | True | False | False | False | False |

| 2 | 22 | 1 | 6 | 0 | 0 | 1 | True | False | False | False | False | False | True | False |

| 3 | 21 | 0 | 8 | 0 | 1 | 1 | False | True | False | False | False | False | True | False |

| 4 | 22 | 0 | 8 | 1 | 0 | 1 | False | True | False | False | False | False | False | True |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2961 | 23 | 0 | 7 | 0 | 0 | 0 | False | True | False | False | False | False | True | False |

| 2962 | 23 | 1 | 7 | 1 | 0 | 0 | False | True | False | False | False | False | False | True |

| 2963 | 22 | 1 | 7 | 0 | 0 | 0 | False | True | False | False | False | False | True | False |

| 2964 | 22 | 1 | 7 | 0 | 0 | 0 | False | True | False | True | False | False | False | False |

| 2965 | 23 | 0 | 8 | 0 | 0 | 1 | False | True | True | False | False | False | False | False |

2966 rows × 14 columns

# Test train split

X = college.loc[:, college.columns != 'PlacedOrNot']

y = college['PlacedOrNot']

feature_names = college.loc[:, college.columns != 'PlacedOrNot'].columns

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Fit the model

from xgboost import XGBClassifier

model_xgb = XGBClassifier(use_label_encoder=False, objective= 'binary:logistic')

model_xgb.fit(X_train, y_train)

XGBClassifier(base_score=None, booster=None, callbacks=None,

colsample_bylevel=None, colsample_bynode=None,

colsample_bytree=None, device=None, early_stopping_rounds=None,

enable_categorical=False, eval_metric=None, feature_types=None,

gamma=None, grow_policy=None, importance_type=None,

interaction_constraints=None, learning_rate=None, max_bin=None,

max_cat_threshold=None, max_cat_to_onehot=None,

max_delta_step=None, max_depth=None, max_leaves=None,

min_child_weight=None, missing=nan, monotone_constraints=None,

multi_strategy=None, n_estimators=None, n_jobs=None,

num_parallel_tree=None, random_state=None, ...)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

XGBClassifier(base_score=None, booster=None, callbacks=None,

colsample_bylevel=None, colsample_bynode=None,

colsample_bytree=None, device=None, early_stopping_rounds=None,

enable_categorical=False, eval_metric=None, feature_types=None,

gamma=None, grow_policy=None, importance_type=None,

interaction_constraints=None, learning_rate=None, max_bin=None,

max_cat_threshold=None, max_cat_to_onehot=None,

max_delta_step=None, max_depth=None, max_leaves=None,

min_child_weight=None, missing=nan, monotone_constraints=None,

multi_strategy=None, n_estimators=None, n_jobs=None,

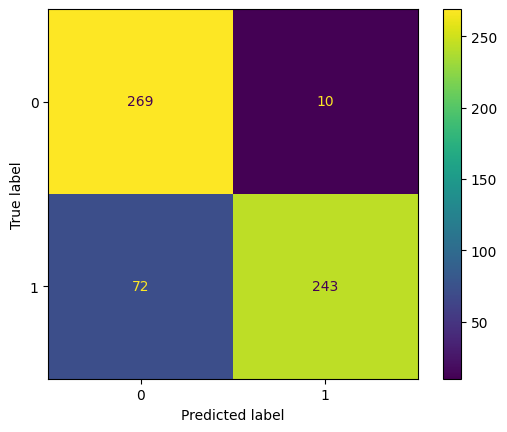

num_parallel_tree=None, random_state=None, ...)# Perform predictions, and store the results in a variable called 'pred'

pred = model_xgb.predict(X_test)

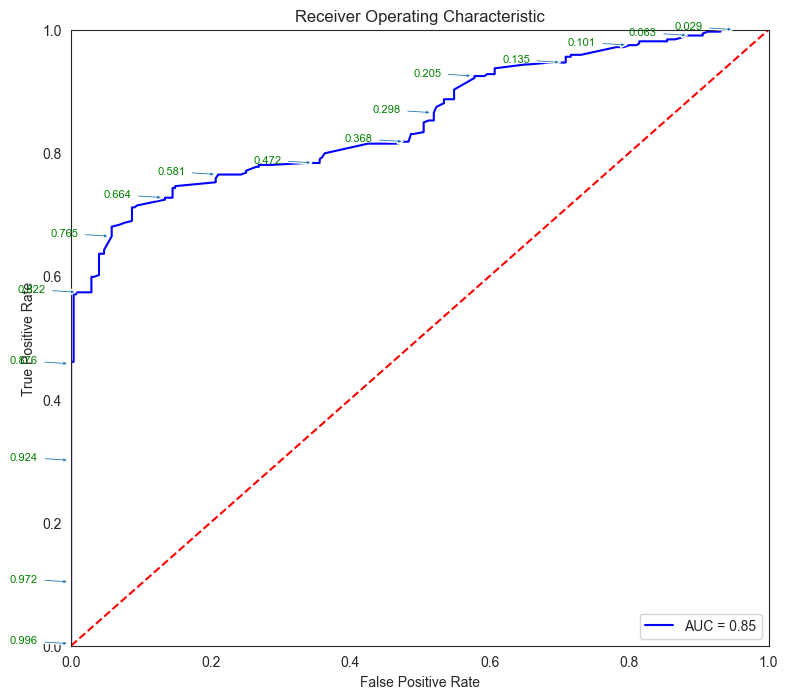

# Check the classification report and the confusion matrix

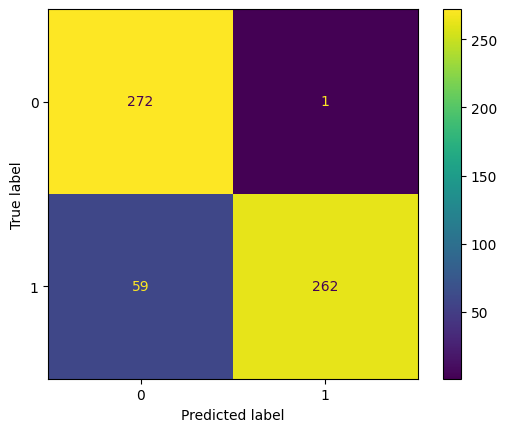

print(classification_report(y_true = y_test, y_pred = pred))

ConfusionMatrixDisplay.from_estimator(model_xgb, X_test, y_test);

precision recall f1-score support

0 0.82 0.92 0.87 269

1 0.93 0.84 0.88 325

accuracy 0.88 594

macro avg 0.88 0.88 0.88 594

weighted avg 0.88 0.88 0.88 594

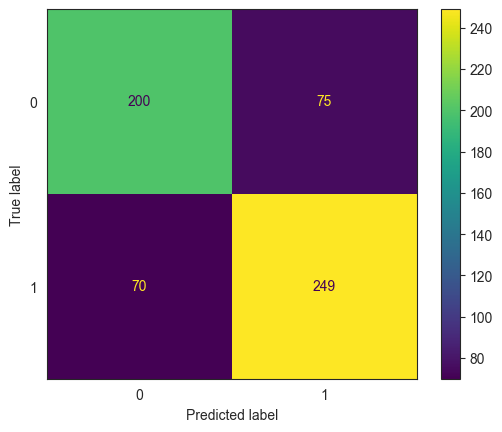

Class Probabilities

We can obtain class probabilities from an XGBoost model. These can help us use different thresholds for cutoff and decide on the error rates we are comfortable with.

model_xgb.classes_

array([0, 1])

y_test

1435 0

1899 1

1475 1

1978 1

100 1

..

1614 1

1717 0

556 0

1773 0

1294 0

Name: PlacedOrNot, Length: 594, dtype: int64

pred_prob = model_xgb.predict_proba(X_test).round(3)

pred_prob

array([[0.314, 0.686],

[0.004, 0.996],

[0.002, 0.998],

...,

[0.984, 0.016],

[0.758, 0.242],

[0.773, 0.227]], dtype=float32)

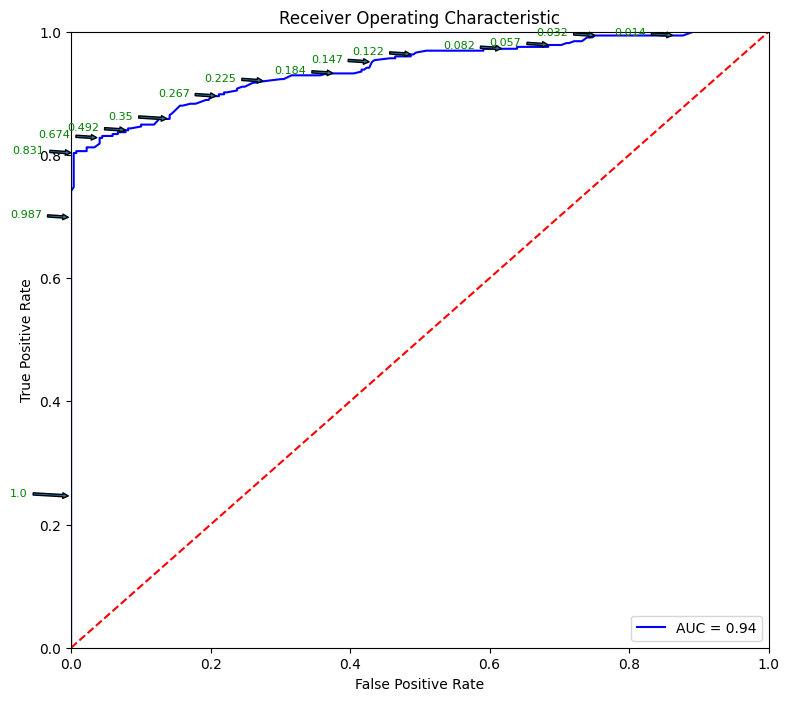

# Source for code below: https://stackoverflow.com/questions/25009284/how-to-plot-roc-curve-in-python

fpr, tpr, thresholds = metrics.roc_curve(y_test, pred_prob[:,1])

roc_auc = metrics.auc(fpr, tpr)

plt.figure(figsize = (9,8))

plt.title('Receiver Operating Characteristic')

plt.plot(fpr, tpr, 'b', label = 'AUC = %0.2f' % roc_auc)

plt.legend(loc = 'lower right')

plt.plot([0, 1], [0, 1],'r--')

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate')

for i, txt in enumerate(thresholds):

if i in np.arange(1, len(thresholds), 10): # print every 10th point to prevent overplotting:

plt.annotate(text = round(txt,3), xy = (fpr[i], tpr[i]),

xytext=(-44, 0), textcoords='offset points',

arrowprops={'arrowstyle':"simple"}, color='green',fontsize=8)

plt.show()

threshold_dataframe = pd.DataFrame({'fpr':fpr, 'tpr': tpr, 'threshold':thresholds}).sort_values(by='threshold')

threshold_dataframe.head()

| fpr | tpr | threshold | |

|---|---|---|---|

| 150 | 1.000000 | 1.0 | 0.000 |

| 149 | 0.985130 | 1.0 | 0.002 |

| 148 | 0.981413 | 1.0 | 0.003 |

| 147 | 0.947955 | 1.0 | 0.006 |

| 146 | 0.929368 | 1.0 | 0.007 |

Change results by varying threshold

# Look at how the probabilities look for the first 10 observations

# The first column is class 0, and the second column is class 1

model_xgb.predict_proba(X_test)[:10]

array([[3.143e-01, 6.857e-01],

[4.300e-03, 9.957e-01],

[2.000e-03, 9.980e-01],

[8.000e-04, 9.992e-01],

[8.798e-01, 1.202e-01],

[5.881e-01, 4.119e-01],

[8.866e-01, 1.134e-01],

[3.000e-04, 9.997e-01],

[9.956e-01, 4.400e-03],

[1.463e-01, 8.537e-01]], dtype=float32)

# Let us round the above as to make it a bit easier to read...

# same thing as prior cell, just presentation

np.round(model_xgb.predict_proba(X_test)[:10], 3)

array([[0.314, 0.686],

[0.004, 0.996],

[0.002, 0.998],

[0.001, 0.999],

[0.88 , 0.12 ],

[0.588, 0.412],

[0.887, 0.113],

[0. , 1. ],

[0.996, 0.004],

[0.146, 0.854]], dtype=float32)

# Now see what the actual prediction is for the first 10 items

# You can see the model has picked the most probable item

# for identifying which category it should be assigned.

#

# We can vary the threshold to change the predictions.

# We do this next

model_xgb.predict(X_test)[:10]

array([1, 1, 1, 1, 0, 0, 0, 1, 0, 1])

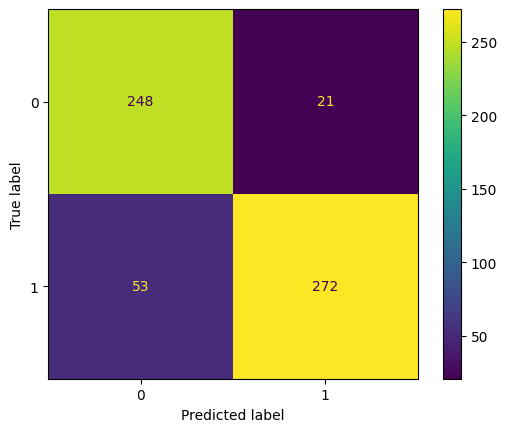

# Set threshold for identifying class 1

threshold = 0.9

# Create predictions. Note that predictions give us probabilities, not classes!

pred_prob = model_xgb.predict_proba(X_test)

# We drop the probabilities for class 0, and keep just the second column

pred_prob = pred_prob[:,1]

# Convert probabilities to 1s and 0s based on threshold

pred = (pred_prob>threshold).astype(int)

# confusion matrix

cm = confusion_matrix(y_test, pred)

print ("Confusion Matrix : \n", cm)

ConfusionMatrixDisplay(confusion_matrix=cm).plot();

# accuracy score of the model

print('Test accuracy = ', accuracy_score(y_test, pred))

print(classification_report(y_true = y_test, y_pred = pred,))

Confusion Matrix :

[[272 1]

[ 59 262]]

Test accuracy = 0.898989898989899

precision recall f1-score support

0 0.82 1.00 0.90 273

1 1.00 0.82 0.90 321

accuracy 0.90 594

macro avg 0.91 0.91 0.90 594

weighted avg 0.92 0.90 0.90 594

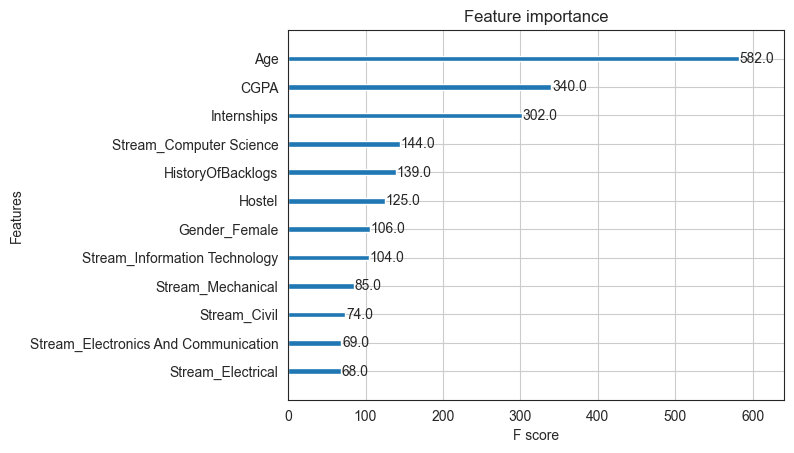

Feature Importance

Using the method feature_importances_, we can get a sense for what the model considers more important than others. However, feature importance identified in this way should be reviewed in the context of domain knowledge. Refer article at https://explained.ai/rf-importance/

# Check feature importance

# This can be misleading though - check out https://explained.ai/rf-importance/

importance = model_xgb.feature_importances_

pd.DataFrame({'Feature':feature_names, 'Importance':importance}).sort_values(by='Importance', ascending=False)

| Feature | Importance | |

|---|---|---|

| 2 | CGPA | 0.525521 |

| 9 | Stream_Electrical | 0.086142 |

| 1 | Internships | 0.073115 |

| 10 | Stream_Electronics And Communication | 0.059893 |

| 7 | Stream_Civil | 0.049865 |

| 0 | Age | 0.047633 |

| 12 | Stream_Mechanical | 0.042866 |

| 4 | HistoryOfBacklogs | 0.041009 |

| 11 | Stream_Information Technology | 0.019961 |

| 3 | Hostel | 0.018885 |

| 5 | Gender_Female | 0.017859 |

| 8 | Stream_Computer Science | 0.017251 |

| 6 | Gender_Male | 0.000000 |

from xgboost import plot_importance

# plot feature importance

plot_importance(model_xgb)

plt.show()

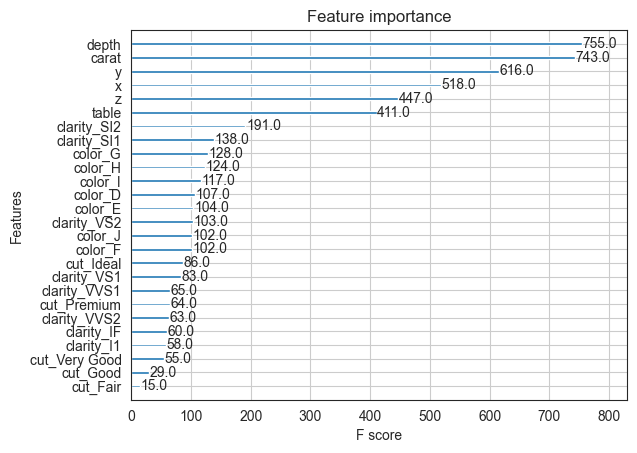

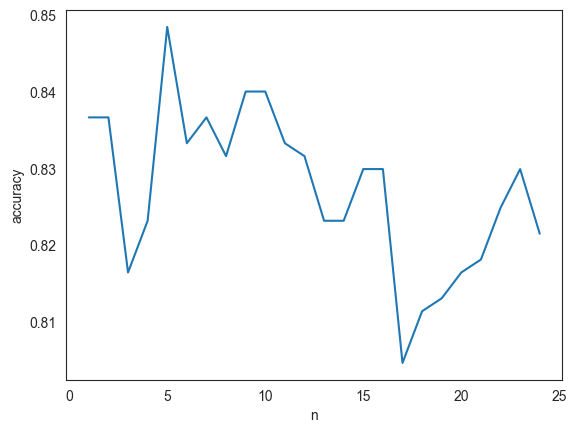

XGBoost for Regression

Let us try to predict diamond prices again, this time using XGBoost. As we can see below, RMSE is half of what we had with Random Forest.

# Load data

diamonds = sns.load_dataset("diamonds")

diamonds.head()

| carat | cut | color | clarity | depth | table | price | x | y | z | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | Ideal | E | SI2 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 |

| 1 | 0.21 | Premium | E | SI1 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 |

| 2 | 0.23 | Good | E | VS1 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 |

| 3 | 0.29 | Premium | I | VS2 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 |

| 4 | 0.31 | Good | J | SI2 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 |

# Get dummy variables

diamonds = pd.get_dummies(diamonds)

diamonds.head()

| carat | depth | table | price | x | y | z | cut_Ideal | cut_Premium | cut_Very Good | cut_Good | cut_Fair | color_D | color_E | color_F | color_G | color_H | color_I | color_J | clarity_IF | clarity_VVS1 | clarity_VVS2 | clarity_VS1 | clarity_VS2 | clarity_SI1 | clarity_SI2 | clarity_I1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.23 | 61.5 | 55.0 | 326 | 3.95 | 3.98 | 2.43 | True | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False | True | False |

| 1 | 0.21 | 59.8 | 61.0 | 326 | 3.89 | 3.84 | 2.31 | False | True | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | True | False | False |

| 2 | 0.23 | 56.9 | 65.0 | 327 | 4.05 | 4.07 | 2.31 | False | False | False | True | False | False | True | False | False | False | False | False | False | False | False | True | False | False | False | False |

| 3 | 0.29 | 62.4 | 58.0 | 334 | 4.20 | 4.23 | 2.63 | False | True | False | False | False | False | False | False | False | False | True | False | False | False | False | False | True | False | False | False |

| 4 | 0.31 | 63.3 | 58.0 | 335 | 4.34 | 4.35 | 2.75 | False | False | False | True | False | False | False | False | False | False | False | True | False | False | False | False | False | False | True | False |

# Define X and y as arrays. y is the price column, X is everything else

X = diamonds.loc[:, diamonds.columns != 'price'].values

y = diamonds.price.values

# Define X and y as arrays. y is the price column, X is everything else

X = diamonds.loc[:, diamonds.columns != 'price']

y = diamonds.price

# Train test split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

# Fit model

from xgboost import XGBRegressor

model_xgb_regr = XGBRegressor()

model_xgb_regr.fit(X_train, y_train)

model_xgb_regr.predict(X_test)

array([ 7206.3213, 3110.482 , 5646.054 , ..., 13976.481 , 5555.7554,

11428.439 ], dtype=float32)

# Evaluate model

y_pred = model_xgb_regr.predict(X_test)

from sklearn.metrics import mean_absolute_error, mean_squared_error

print('MSE = ', mean_squared_error(y_test,y_pred))

print('RMSE = ', np.sqrt(mean_squared_error(y_test,y_pred)))

print('MAE = ', mean_absolute_error(y_test,y_pred))

MSE = 280824.1563066477

RMSE = 529.9284445155287

MAE = 276.8015830181774

# Evaluate residuals

plt.figure(figsize = (8,8))

plt.scatter(y_test, y_pred, alpha=0.5)

plt.title('Actual vs Predicted Diamond Value\n Closer to red line (identity) means more accurate prediction')

plt.plot( [0,19000],[0,19000], color='red' )

plt.xlabel("Actual")

plt.ylabel("Predicted");

# R-squared calculation

pd.DataFrame({'actual':y_test, 'predicted':y_pred}).corr()**2

| actual | predicted | |

|---|---|---|

| actual | 1.000000 | 0.982202 |

| predicted | 0.982202 | 1.000000 |

from xgboost import plot_importance

# plot feature importance

plot_importance(model_xgb_regr);

As we can see, XGBoost has vastly improved the prediction results. R-squared is 0.98, and the residual plot looks much better than with Random Forest.

diamonds.price.describe()

count 53940.000000

mean 3932.799722

std 3989.439738

min 326.000000

25% 950.000000

50% 2401.000000

75% 5324.250000

max 18823.000000

Name: price, dtype: float64

Linear Methods

Linear methods are different from tree based methods that we looked at earlier. They approach the problem from the perspective of plotting the points and drawing a line (or a plane) that separates the categories.

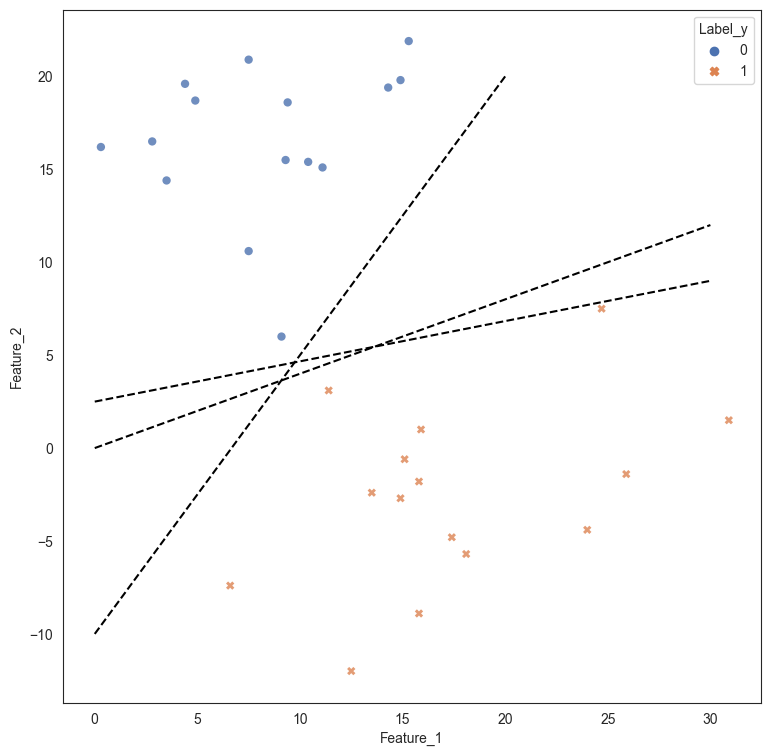

Let us consider a toy dataset that we create at random. The dataset has two features (Feature_1 and Feature_2), that help us distinguish between two classes - 0 and 1.

The data is graphed in the scatterplot below. The point to note here is that it is pretty easy to distinguish between the two classes by drawing a straight line between the two classes. The question though is which line is the best possible line for classification, given an infinite number of such lines can be drawn?

# Import libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.datasets import make_blobs, make_classification

# Generate random data

X, y, centers = make_blobs(n_samples=30, centers=2,

n_features=2, random_state=14,

return_centers=True,

center_box=(0,20), cluster_std = 5)

# Round to one place of decimal

X = np.round_(X,1)

y = np.round_(y,1)

# Create a dataframe with the features and the y variable

df = pd.DataFrame(dict(Feature_1=X[:,0], Feature_2=X[:,1], Label_y=y))

df = round(df,ndigits=2)

# Plot the data

plt.figure(figsize=(9,9))

sns.scatterplot(data = df, x = 'Feature_1', y = 'Feature_2', style = 'Label_y', hue = 'Label_y',

alpha = .8, palette="deep",edgecolor = 'None')

# Plot possible lines to discriminate between classes

plt.plot([0,30],[2.5,9], 'k--')

plt.plot([0,30], [0,12], 'k--')

plt.plot([0,20], [-10,20], 'k--');

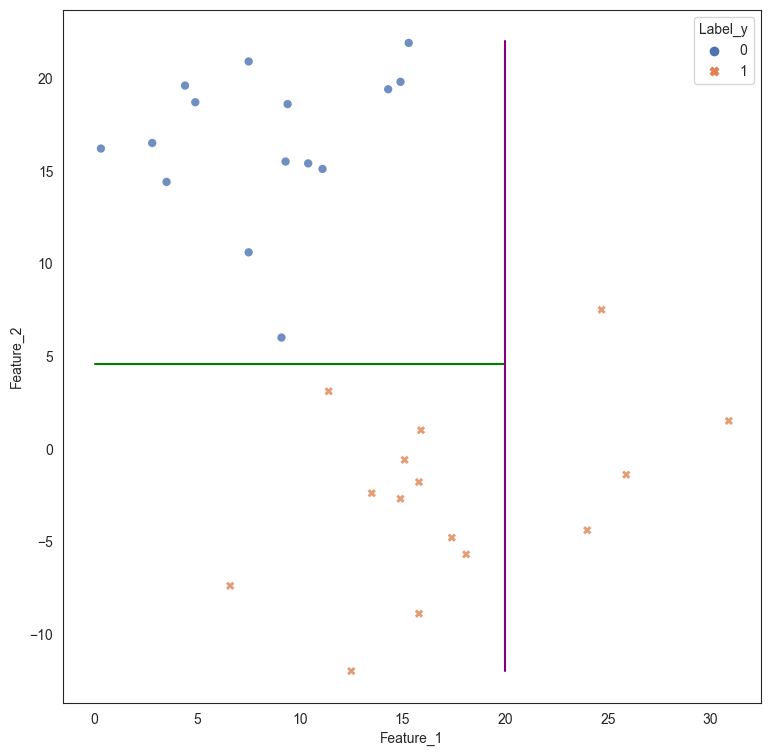

If we were to create a decision tree, the problem is solved as the decision tree draws two straight line boundaries - first at Feature_2 > 4.55, and the second at Feature_1 > 20. While this works for the current data, we can obviously see that a more robust and simpler solution would be to draw a straight line between the data that is at an angle separating the two classes.

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn import tree

# iris = load_iris()

clf = tree.DecisionTreeClassifier()

clf = clf.fit(X, y)

import graphviz

dot_data = tree.export_graphviz(clf, out_file=None)

graph = graphviz.Source(dot_data)

# graph.render("iris")

dot_data = tree.export_graphviz(clf, out_file=None,

feature_names=['Feature_1', 'Feature_2'],

class_names=['Class_0', 'Class_1'],

filled=True, rounded=True,

special_characters=True)

graph = graphviz.Source(dot_data)

graph

# Plot the data

plt.figure(figsize=(9,9))

sns.scatterplot(data = df, x = 'Feature_1', y = 'Feature_2', style = 'Label_y', hue = 'Label_y',

alpha = .8, palette="deep",edgecolor = 'None')

# Plot possible lines to discriminate between classes

plt.plot([0,20],[4.55,4.55], color='green')

plt.plot([20,20], [-12,22], color = 'purple');

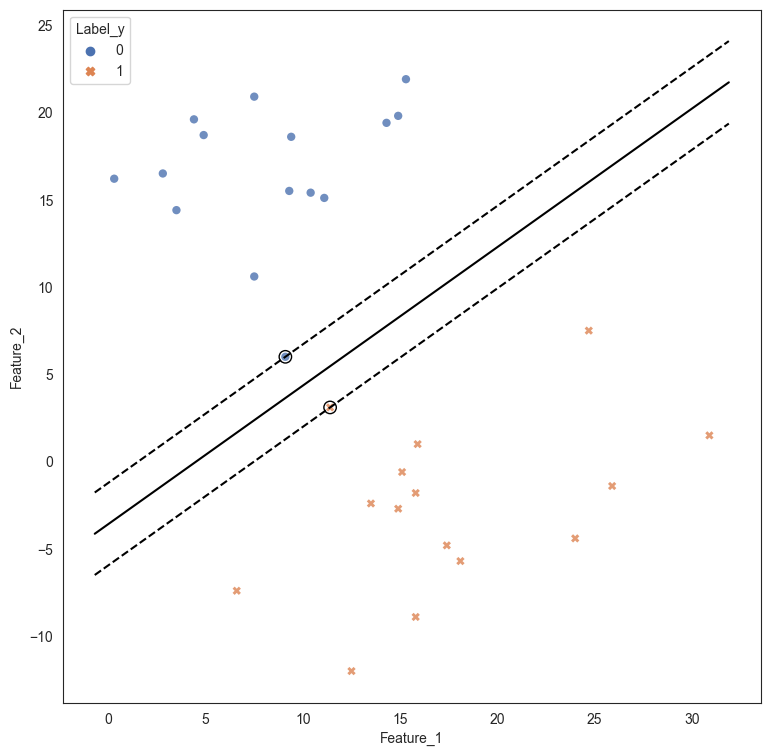

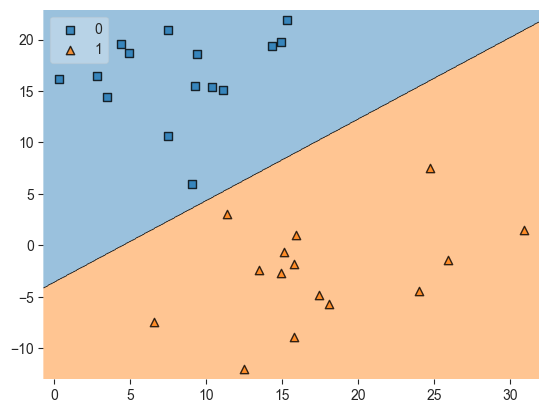

However, we can see that a single linear split provides better results.

This is an example of a Linear Classifier. The decision boundary is essentially a line represented as the weighted sum of the two axes. This is called a linear discriminant because it discriminates between the two classes using a linear combination of the independent attributes.

A general linear model would look as follows:

For our example, the linear classifier line is defined by the following example:

The coefficients, or weights, are often loosely interpreted as the importance of the features, assuming all feature values have been normalized.

The question is: How do we identify the correct line as many different lines are possible.

There are many methods to determine the line that serves as our linear discriminant. Each method differs in the ‘objective function’ that is optimized to arrive at the solution.

Two of the common methods used are:

- Linear Discriminant Analysis, and

- Support Vector Machines

# Fit linear model

from sklearn.svm import SVC

model_svc = SVC(kernel="linear")

model_svc.fit(X, y)

SVC(kernel='linear')In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

SVC(kernel='linear')

# Plot the data, and line dividing the classification

plt.figure(figsize=(9,9))

sns.scatterplot(data = df, x = 'Feature_1', y = 'Feature_2', style = 'Label_y', hue = 'Label_y',

alpha = .8, palette="deep",edgecolor = 'None');

# Plot the equation of the linear discriminant

w = model_svc.coef_[0]

a = -w[0] / w[1]

xx = np.linspace(df.Feature_1.min()-1, df.Feature_1.max()+1)

yy = a * xx - (model_svc.intercept_[0]) / w[1]

plt.plot(xx, yy, 'k-')

# Identify the support vectors, ie the points that decide the decision boundary

plt.scatter(

model_svc.support_vectors_[:, 0],

model_svc.support_vectors_[:, 1],

s=80,

facecolors="none",

zorder=10,

edgecolors="k",

)

# Plot the margin lines

margin = 1 / np.sqrt(np.sum(model_svc.coef_**2))

yy_down = yy - np.sqrt(1 + a**2) * margin

yy_up = yy + np.sqrt(1 + a**2) * margin

plt.plot(xx, yy_down, "k--")

plt.plot(xx, yy_up, "k--");

# Another way to plot the decision boundary for SVM models

# Source: https://stackoverflow.com/questions/51297423/plot-scikit-learn-sklearn-svm-decision-boundary-surface

from sklearn.svm import SVC

import matplotlib.pyplot as plt

from mlxtend.plotting import plot_decision_regions

svm_graph = SVC(kernel='linear')

svm_graph.fit(X, y)

plot_decision_regions(X, y, clf=svm_graph, legend=2)

plt.show()

Linear Discriminant Analysis

LDA assumes a normal distribution for the data points for the different categories, and attempts to create a 1D projection in a way that separates classes well.

Fortunately, there are libraries available that do all the tough math for us.

LDA expects predictor variables to be continuous due to its distributional assumption of independent variables being multivariate normal. This limits its use in situations where the predictor variables are categorical.

You do not need to standardize the feature set prior to using linear discriminant analysis. You should rule out logistic regression as a better alternative before using linear discriminant analysis.

LDA in Action

We revisit the collegePlace.csv data.

About the data:

A University Announced Its On-Campus Placement Records For The Engineering Course. The Data Is From The Years 2013 And 2014.

Data Fields:

- Age: Age At The Time Of Final Year

- Gender: Gender Of Candidate

- Stream: Engineering Stream That The Candidate Belongs To

- Internships: Number Of Internships Undertaken During The Course Of Studies, Not Necessarily Related To College Studies Or Stream

- CGPA: CGPA Till 6th Semester

- Hostel: Whether Student Lives In College Accomodation

- HistoryOfBacklogs: Whether Student Ever Had Any Backlogs In Any Subjects

- PlacedOrNot: Target Variable

# load the data

college = pd.read_csv('collegePlace.csv')

college

| Age | Gender | Stream | Internships | CGPA | Hostel | HistoryOfBacklogs | PlacedOrNot | |

|---|---|---|---|---|---|---|---|---|

| 0 | 22 | Male | Electronics And Communication | 1 | 8 | 1 | 1 | 1 |

| 1 | 21 | Female | Computer Science | 0 | 7 | 1 | 1 | 1 |

| 2 | 22 | Female | Information Technology | 1 | 6 | 0 | 0 | 1 |

| 3 | 21 | Male | Information Technology | 0 | 8 | 0 | 1 | 1 |

| 4 | 22 | Male | Mechanical | 0 | 8 | 1 | 0 | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2961 | 23 | Male | Information Technology | 0 | 7 | 0 | 0 | 0 |

| 2962 | 23 | Male | Mechanical | 1 | 7 | 1 | 0 | 0 |

| 2963 | 22 | Male | Information Technology | 1 | 7 | 0 | 0 | 0 |

| 2964 | 22 | Male | Computer Science | 1 | 7 | 0 | 0 | 0 |

| 2965 | 23 | Male | Civil | 0 | 8 | 0 | 0 | 1 |

2966 rows × 8 columns

college.columns

Index(['Age', 'Gender', 'Stream', 'Internships', 'CGPA', 'Hostel', 'HistoryOfBacklogs', 'PlacedOrNot'], dtype='object')

# divide the dataset into train and test sets, separating the features and target variable

X = college[['Age', 'Internships', 'CGPA', 'Hostel', 'HistoryOfBacklogs']].values

y = college['PlacedOrNot'].values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# apply Linear Discriminant Analysis

LDA = LinearDiscriminantAnalysis()

model_lda = LDA.fit(X = X_train, y = y_train)

pred = model_lda.predict(X_test)

college.PlacedOrNot.value_counts()

PlacedOrNot

1 1639

0 1327

Name: count, dtype: int64

1639/(1639+1327)

0.552596089008766

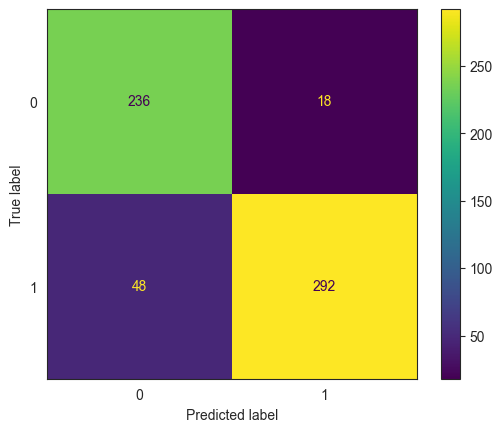

# evaluate performance

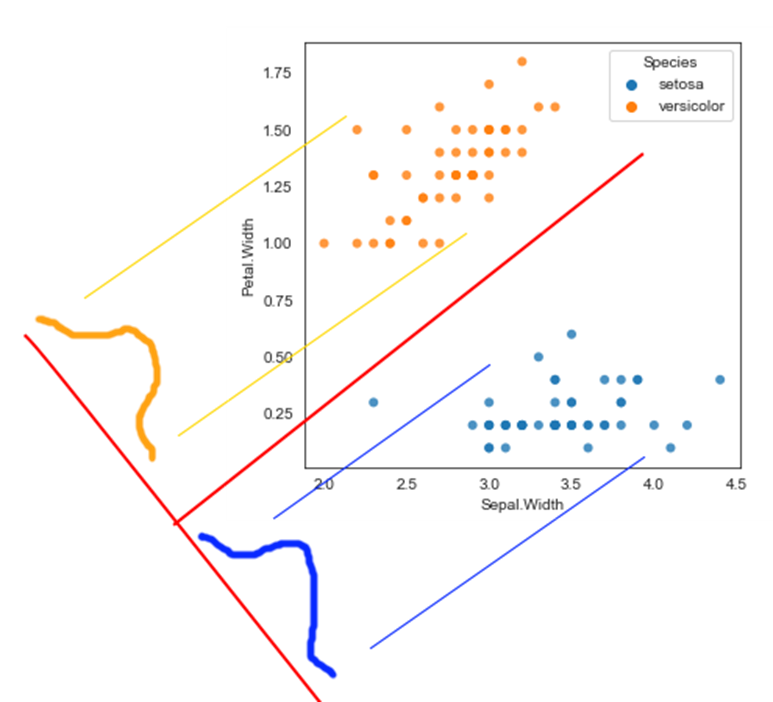

ConfusionMatrixDisplay.from_estimator(model_lda, X_test, y_test);

print(classification_report(y_true = y_test, y_pred = pred))

precision recall f1-score support

0 0.74 0.73 0.73 275

1 0.77 0.78 0.77 319

accuracy 0.76 594

macro avg 0.75 0.75 0.75 594

weighted avg 0.76 0.76 0.76 594

confusion_matrix(y_true = y_test, y_pred = pred)

array([[200, 75],

[ 70, 249]], dtype=int64)

# Get predictions

model_lda.predict(X_test)

array([0, 1, 1, 1, 0, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1,

1, 1, 0, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1,

0, 1, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1,

1, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 1, 0, 1, 0, 1, 0, 0, 1,

0, 0, 1, 1, 1, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 0, 0,

0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 0, 0, 1, 1, 1, 1, 0,

0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1,

1, 0, 1, 0, 1, 0, 1, 1, 1, 0, 1, 1, 1, 0, 1, 0, 0, 1, 1, 0, 1, 0,

1, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1,

1, 0, 0, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 0,

1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 1, 1, 1,

0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 0, 1,

1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1,

0, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 0, 0, 0, 0, 1,

0, 1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1,

1, 0, 1, 1, 1, 0, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 1,

0, 1, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1, 0, 0, 0,

0, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0,

1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 0,

1, 0, 1, 1, 0, 1, 1, 1, 0, 1, 0, 0, 1, 0, 1, 0, 1, 1, 1, 0, 1, 1,

0, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 1, 0, 1, 1, 0, 1, 1,

1, 0, 0, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 1, 1, 1, 1,

1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0,

0, 1, 1, 1, 0, 1, 0, 1, 1, 0, 1, 0, 1, 1, 0, 1, 1, 1, 0, 0, 0, 1,

0, 1, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1, 0, 1, 1, 0, 0,

1, 1, 1, 0, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 0, 1,

0, 1, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1],

dtype=int64)

# Get probability of class membership

pred_prob = model_lda.predict_proba(X_test)

pred_prob

array([[0.876743 , 0.123257 ],

[0.0832324 , 0.9167676 ],

[0.23516243, 0.76483757],

...,

[0.05691089, 0.94308911],

[0.11393595, 0.88606405],

[0.06217806, 0.93782194]])

y_test

array([0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 1,

1, 1, 0, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0,

0, 1, 0, 1, 1, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 1, 0, 1, 1, 0, 1,

1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1,

1, 0, 1, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 0, 0, 1, 0, 1, 0, 0,

0, 1, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1,

1, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 1, 1, 1, 1, 1, 1,

1, 0, 1, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 1, 1, 0, 0, 1, 1, 0, 1, 0,

1, 0, 1, 1, 0, 0, 1, 0, 1, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 0, 1, 1,

1, 1, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1,

1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1,

0, 1, 1, 1, 0, 0, 1, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 1, 1, 0, 1,

1, 1, 1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 1,

1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 1,

1, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 1, 0, 1, 0, 1, 0, 0, 1, 1, 1,

0, 0, 1, 0, 1, 0, 0, 1, 0, 0, 1, 1, 1, 0, 1, 0, 1, 0, 1, 0, 0, 1,

1, 1, 0, 1, 0, 1, 0, 0, 1, 1, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 1,

0, 1, 1, 0, 0, 1, 1, 1, 0, 1, 0, 1, 1, 1, 0, 1, 0, 0, 1, 0, 1, 0,

1, 0, 1, 0, 1, 1, 0, 1, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 1,

0, 0, 1, 1, 0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 0, 1, 1, 0, 0, 1, 1,

0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 0, 0, 1,

0, 1, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1,

0, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 1, 0,

1, 1, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1,

0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 1, 1, 1, 1, 0, 1, 1, 0, 1,

0, 1, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1,

1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 1],

dtype=int64)

# Source for code below: https://stackoverflow.com/questions/25009284/how-to-plot-roc-curve-in-python

fpr, tpr, thresholds = metrics.roc_curve(y_test, pred_prob[:,1])

roc_auc = metrics.auc(fpr, tpr)

plt.figure(figsize = (9,8))

plt.title('Receiver Operating Characteristic')

plt.plot(fpr, tpr, 'b', label = 'AUC = %0.2f' % roc_auc)

plt.legend(loc = 'lower right')

plt.plot([0, 1], [0, 1],'r--')

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate')

for i, txt in enumerate(thresholds):

if i in np.arange(1, len(thresholds), 10): # print every 10th point to prevent overplotting:

plt.annotate(text = round(txt,3), xy = (fpr[i], tpr[i]),

xytext=(-44, 0), textcoords='offset points',

arrowprops={'arrowstyle':"simple"}, color='green',fontsize=8)

plt.show()

threshold_dataframe = pd.DataFrame({'fpr':fpr, 'tpr': tpr, 'threshold':thresholds}).sort_values(by='threshold')

threshold_dataframe.head()

| fpr | tpr | threshold | |

|---|---|---|---|

| 155 | 1.000000 | 1.0 | 0.006040 |

| 154 | 0.985455 | 1.0 | 0.015364 |

| 153 | 0.974545 | 1.0 | 0.015584 |

| 152 | 0.960000 | 1.0 | 0.028427 |

| 151 | 0.952727 | 1.0 | 0.028829 |

Closing remarks on LDA: - LDA can not be applied to regression problems, it is useful only for classification. - LDA does provide class membership probabilities, using the predict_proba() method. - There are additional variations to LDA, eg Quadratic Discriminant Analysis, and those may yield better results by allowing a non-linear decision boundary.

Support Vector Machines

Classification with SVM

SVMs use linear classification techniques, ie, they classify instances based on a linear function of the features. The idea behind SVMs is simple: instead of thinking about separating with a line, fit the fattest possible bar between the two classes.

The objective function for SVM incorporates the idea that a wider bar is better.

Once the widest bar is found, the linear discriminant will be the center line through the bar.

The distance between the dashed parallel lines is called the margin around the linear discriminant, and the objective function attempts to maximize the margin.

SVMs require data to be standardized for best results

SVM Example

We will use the same data as before – collegePlace.csv. However this time we will include all the variables, including the categorical variables. We convert the categorical variables to numerical using dummy variables with pd.get_dummies().

# load the data & convert categoricals into numerical variables

college = pd.read_csv('collegePlace.csv')

college = pd.get_dummies(college)

college

| Age | Internships | CGPA | Hostel | HistoryOfBacklogs | PlacedOrNot | Gender_Female | Gender_Male | Stream_Civil | Stream_Computer Science | Stream_Electrical | Stream_Electronics And Communication | Stream_Information Technology | Stream_Mechanical | |